Utilizing AI is usually a double-edged sword, in accordance with new analysis from Duke College. Whereas generative AI instruments might enhance productiveness for some, they may additionally secretly injury your skilled status.

On Thursday, the Proceedings of the Nationwide Academy of Sciences (PNAS) revealed a examine displaying that staff who use AI instruments like ChatGPT, Claude, and Gemini at work face unfavorable judgments about their competence and motivation from colleagues and managers.

“Our findings reveal a dilemma for folks contemplating adopting AI instruments: Though AI can improve productiveness, its use carries social prices,” write researchers Jessica A. Reif, Richard P. Larrick, and Jack B. Soll of Duke’s Fuqua College of Enterprise.

The Duke group performed 4 experiments with over 4,400 members to look at each anticipated and precise evaluations of AI device customers. Their findings, offered in a paper titled “Proof of a social analysis penalty for utilizing AI,” reveal a constant sample of bias towards those that obtain assist from AI.

What made this penalty notably regarding for the researchers was its consistency throughout demographics. They discovered that the social stigma towards AI use wasn’t restricted to particular teams.

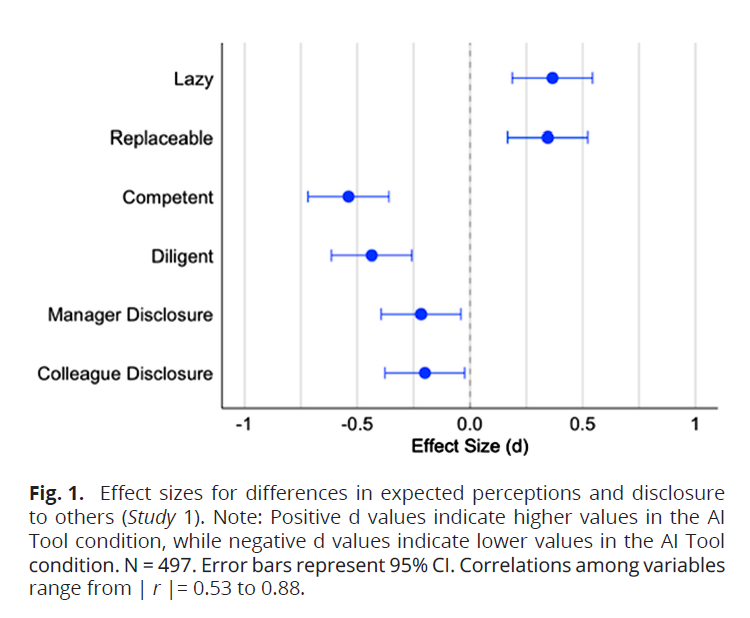

Fig. 1 from the paper “Proof of a social analysis penalty for utilizing AI.”

Credit score:

Reif et al.

“Testing a broad vary of stimuli enabled us to look at whether or not the goal’s age, gender, or occupation qualifies the impact of receiving assist from Al on these evaluations,” the authors wrote within the paper. “We discovered that none of those goal demographic attributes influences the impact of receiving Al assistance on perceptions of laziness, diligence, competence, independence, or self-assuredness. This means that the social stigmatization of AI use just isn’t restricted to its use amongst specific demographic teams. The consequence seems to be a basic one.”

The hidden social price of AI adoption

Within the first experiment performed by the group from Duke, members imagined utilizing both an AI device or a dashboard creation device at work. It revealed that these within the AI group anticipated to be judged as lazier, much less competent, much less diligent, and extra replaceable than these utilizing typical expertise. In addition they reported much less willingness to reveal their AI use to colleagues and managers.

The second experiment confirmed these fears have been justified. When evaluating descriptions of staff, members constantly rated these receiving AI assist as lazier, much less competent, much less diligent, much less impartial, and fewer confident than these receiving comparable assist from non-AI sources or no assist in any respect.