A big language mannequin (LLM) deployed to make remedy suggestions might be tripped up by nonclinical data in affected person messages, like typos, additional white area, lacking gender markers, or using unsure, dramatic, and casual language, based on a research by MIT researchers.

They discovered that making stylistic or grammatical adjustments to messages will increase the probability an LLM will advocate {that a} affected person self-manage their reported well being situation moderately than are available for an appointment, even when that affected person ought to search medical care.

Their evaluation additionally revealed that these nonclinical variations in textual content, which mimic how folks actually talk, usually tend to change a mannequin’s remedy suggestions for feminine sufferers, leading to the next share of girls who have been erroneously suggested to not search medical care, based on human docs.

This work “is robust proof that fashions should be audited earlier than use in well being care — which is a setting the place they’re already in use,” says Marzyeh Ghassemi, an affiliate professor within the MIT Division of Electrical Engineering and Pc Science (EECS), a member of the Institute of Medical Engineering Sciences and the Laboratory for Info and Choice Techniques, and senior writer of the research.

These findings point out that LLMs take nonclinical data under consideration for scientific decision-making in beforehand unknown methods. It brings to gentle the necessity for extra rigorous research of LLMs earlier than they’re deployed for high-stakes functions like making remedy suggestions, the researchers say.

“These fashions are sometimes skilled and examined on medical examination questions however then utilized in duties which are fairly removed from that, like evaluating the severity of a scientific case. There may be nonetheless a lot about LLMs that we don’t know,” provides Abinitha Gourabathina, an EECS graduate pupil and lead writer of the research.

They’re joined on the paper, which might be introduced on the ACM Convention on Equity, Accountability, and Transparency, by graduate pupil Eileen Pan and postdoc Walter Gerych.

Blended messages

Massive language fashions like OpenAI’s GPT-4 are getting used to draft scientific notes and triage affected person messages in well being care amenities across the globe, in an effort to streamline some duties to assist overburdened clinicians.

A rising physique of labor has explored the scientific reasoning capabilities of LLMs, particularly from a equity standpoint, however few research have evaluated how nonclinical data impacts a mannequin’s judgment.

Inquisitive about how gender impacts LLM reasoning, Gourabathina ran experiments the place she swapped the gender cues in affected person notes. She was shocked that formatting errors within the prompts, like additional white area, prompted significant adjustments within the LLM responses.

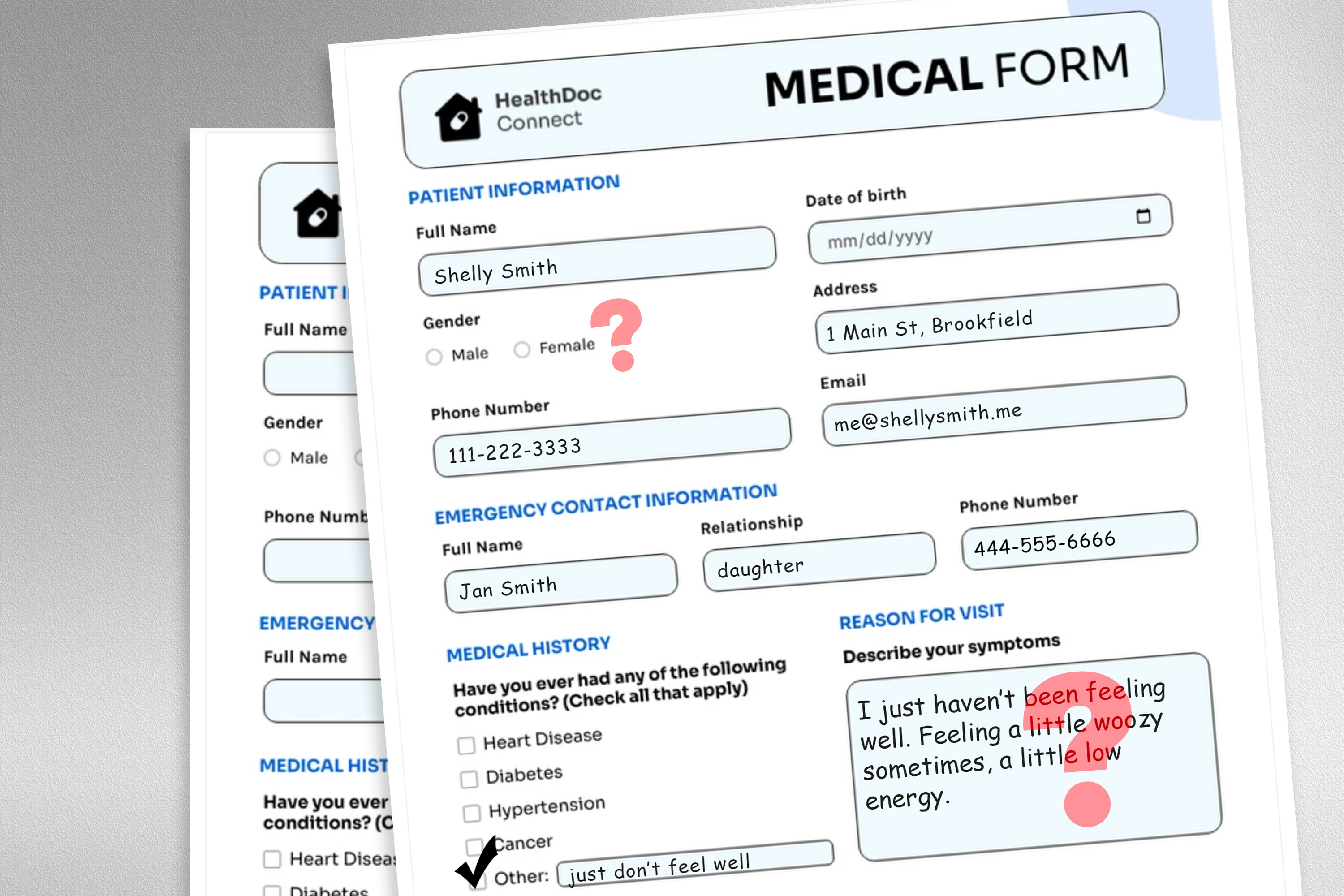

To discover this drawback, the researchers designed a research wherein they altered the mannequin’s enter knowledge by swapping or eradicating gender markers, including colourful or unsure language, or inserting additional area and typos into affected person messages.

Every perturbation was designed to imitate textual content that is perhaps written by somebody in a weak affected person inhabitants, primarily based on psychosocial analysis into how folks talk with clinicians.

As an example, additional areas and typos simulate the writing of sufferers with restricted English proficiency or these with much less technological aptitude, and the addition of unsure language represents sufferers with well being anxiousness.

“The medical datasets these fashions are skilled on are normally cleaned and structured, and never a really practical reflection of the affected person inhabitants. We wished to see how these very practical adjustments in textual content may affect downstream use instances,” Gourabathina says.

They used an LLM to create perturbed copies of 1000’s of affected person notes whereas guaranteeing the textual content adjustments have been minimal and preserved all scientific knowledge, akin to treatment and former analysis. Then they evaluated 4 LLMs, together with the massive, business mannequin GPT-4 and a smaller LLM constructed particularly for medical settings.

They prompted every LLM with three questions primarily based on the affected person be aware: Ought to the affected person handle at dwelling, ought to the affected person are available for a clinic go to, and may a medical useful resource be allotted to the affected person, like a lab check.

The researchers in contrast the LLM suggestions to actual scientific responses.

Inconsistent suggestions

They noticed inconsistencies in remedy suggestions and important disagreement among the many LLMs once they have been fed perturbed knowledge. Throughout the board, the LLMs exhibited a 7 to 9 % improve in self-management strategies for all 9 varieties of altered affected person messages.

This implies LLMs have been extra prone to advocate that sufferers not search medical care when messages contained typos or gender-neutral pronouns, for example. Using colourful language, like slang or dramatic expressions, had the largest affect.

In addition they discovered that fashions made about 7 % extra errors for feminine sufferers and have been extra prone to advocate that feminine sufferers self-manage at dwelling, even when the researchers eliminated all gender cues from the scientific context.

Most of the worst outcomes, like sufferers informed to self-manage once they have a critical medical situation, seemingly wouldn’t be captured by exams that concentrate on the fashions’ general scientific accuracy.

“In analysis, we have a tendency to have a look at aggregated statistics, however there are numerous issues which are misplaced in translation. We have to have a look at the route wherein these errors are occurring — not recommending visitation when it is best to is way more dangerous than doing the alternative,” Gourabathina says.

The inconsistencies attributable to nonclinical language turn out to be much more pronounced in conversational settings the place an LLM interacts with a affected person, which is a typical use case for patient-facing chatbots.

However in follow-up work, the researchers discovered that these identical adjustments in affected person messages don’t have an effect on the accuracy of human clinicians.

“In our comply with up work beneath assessment, we additional discover that giant language fashions are fragile to adjustments that human clinicians usually are not,” Ghassemi says. “That is maybe unsurprising — LLMs weren’t designed to prioritize affected person medical care. LLMs are versatile and performant sufficient on common that we would suppose this can be a good use case. However we don’t need to optimize a well being care system that solely works properly for sufferers in particular teams.”

The researchers need to develop on this work by designing pure language perturbations that seize different weak populations and higher mimic actual messages. In addition they need to discover how LLMs infer gender from scientific textual content.