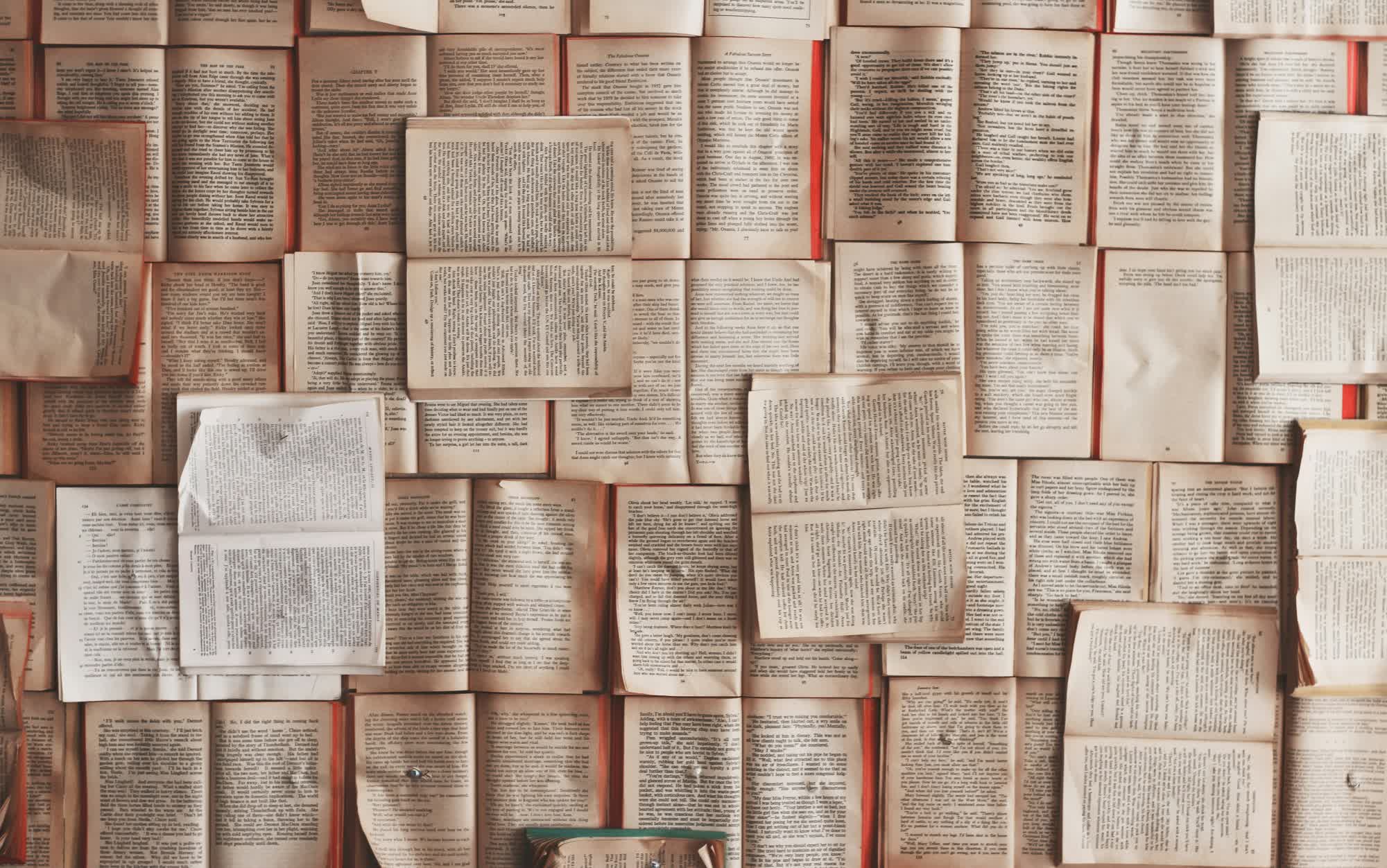

WTF?! Generative AI has already confronted sharp criticism for its well-known points with reliability, its large vitality consumption, and the unauthorized use of copyrighted materials. Now, a latest courtroom case reveals that coaching these AI fashions has additionally concerned the large-scale destruction of bodily books.

Buried within the particulars of a latest cut up ruling towards Anthropic is a shocking revelation: the generative AI firm destroyed tens of millions of bodily books by chopping off their bindings and discarding the stays, all to coach its AI assistant. Notably, this destruction was cited as an element that tipped the courtroom’s determination in Anthropic’s favor.

To construct Claude, its language mannequin and ChatGPT competitor, Anthropic educated on as many books because it may purchase. The corporate bought tens of millions of bodily volumes and digitized them by tearing out and scanning the pages, completely destroying the books within the course of.

Moreover, Anthropic has no plans to make the ensuing digital copies publicly out there. This element helped persuade the decide that digitizing and scraping the books constituted adequate transformation to qualify underneath truthful use. Whereas Claude presumably makes use of the digitized library to generate distinctive content material, critics have proven that enormous language fashions can typically reproduce verbatim materials from their coaching information.

Anthropic’s partial authorized victory now permits it to coach AI fashions on copyrighted books with out notifying the unique publishers or authors, doubtlessly eradicating one of many greatest hurdles going through the generative AI business. A former Metallic govt lately admitted that AI would die in a single day if required to adjust to copyright regulation, seemingly as a result of builders would not have entry to the huge information troves wanted to coach giant language fashions.

Nonetheless, ongoing copyright battles proceed to pose a serious menace to the expertise. Earlier this month, the CEO of Getty Pictures acknowledged the corporate could not afford to combat each AI-related copyright violation. In the meantime, Disney’s lawsuit towards Midjourney – the place the corporate demonstrated the picture generator’s capability to copy copyrighted content material – may have important penalties for the broader generative AI ecosystem.

That stated, the decide within the Anthropic case did rule towards the corporate for partially counting on libraries of pirated books to coach Claude. Anthropic should nonetheless face a copyright trial in December, the place it may very well be ordered to pay as much as $150,000 per pirated work.