AI picture era — which depends on neural networks to create new photos from quite a lot of inputs, together with textual content prompts — is projected to turn into a billion-dollar business by the tip of this decade. Even with at this time’s expertise, should you needed to make a fantastic image of, say, a buddy planting a flag on Mars or heedlessly flying right into a black gap, it may take lower than a second. Nevertheless, earlier than they will carry out duties like that, picture mills are generally educated on huge datasets containing thousands and thousands of photos which are typically paired with related textual content. Coaching these generative fashions could be an arduous chore that takes weeks or months, consuming huge computational assets within the course of.

However what if it have been doable to generate photos by means of AI strategies with out utilizing a generator in any respect? That actual risk, together with different intriguing concepts, was described in a analysis paper offered on the Worldwide Convention on Machine Studying (ICML 2025), which was held in Vancouver, British Columbia, earlier this summer time. The paper, describing novel methods for manipulating and producing photos, was written by Lukas Lao Beyer, a graduate scholar researcher in MIT’s Laboratory for Data and Determination Methods (LIDS); Tianhong Li, a postdoc at MIT’s Laptop Science and Synthetic Intelligence Laboratory (CSAIL); Xinlei Chen of Fb AI Analysis; Sertac Karaman, an MIT professor of aeronautics and astronautics and the director of LIDS; and Kaiming He, an MIT affiliate professor {of electrical} engineering and pc science.

This group effort had its origins in a category undertaking for a graduate seminar on deep generative fashions that Lao Beyer took final fall. In conversations throughout the semester, it turned obvious to each Lao Beyer and He, who taught the seminar, that this analysis had actual potential, which went far past the confines of a typical homework project. Different collaborators have been quickly introduced into the endeavor.

The place to begin for Lao Beyer’s inquiry was a June 2024 paper, written by researchers from the Technical College of Munich and the Chinese language firm ByteDance, which launched a brand new approach of representing visible data known as a one-dimensional tokenizer. With this system, which can also be a form of neural community, a 256×256-pixel picture could be translated right into a sequence of simply 32 numbers, known as tokens. “I needed to know how such a excessive stage of compression might be achieved, and what the tokens themselves truly represented,” says Lao Beyer.

The earlier era of tokenizers would sometimes break up the identical picture into an array of 16×16 tokens — with every token encapsulating data, in extremely condensed type, that corresponds to a selected portion of the unique picture. The brand new 1D tokenizers can encode a picture extra effectively, utilizing far fewer tokens total, and these tokens are capable of seize details about your complete picture, not only a single quadrant. Every of those tokens, furthermore, is a 12-digit quantity consisting of 1s and 0s, permitting for two12 (or about 4,000) potentialities altogether. “It’s like a vocabulary of 4,000 phrases that makes up an summary, hidden language spoken by the pc,” He explains. “It’s not like a human language, however we will nonetheless attempt to discover out what it means.”

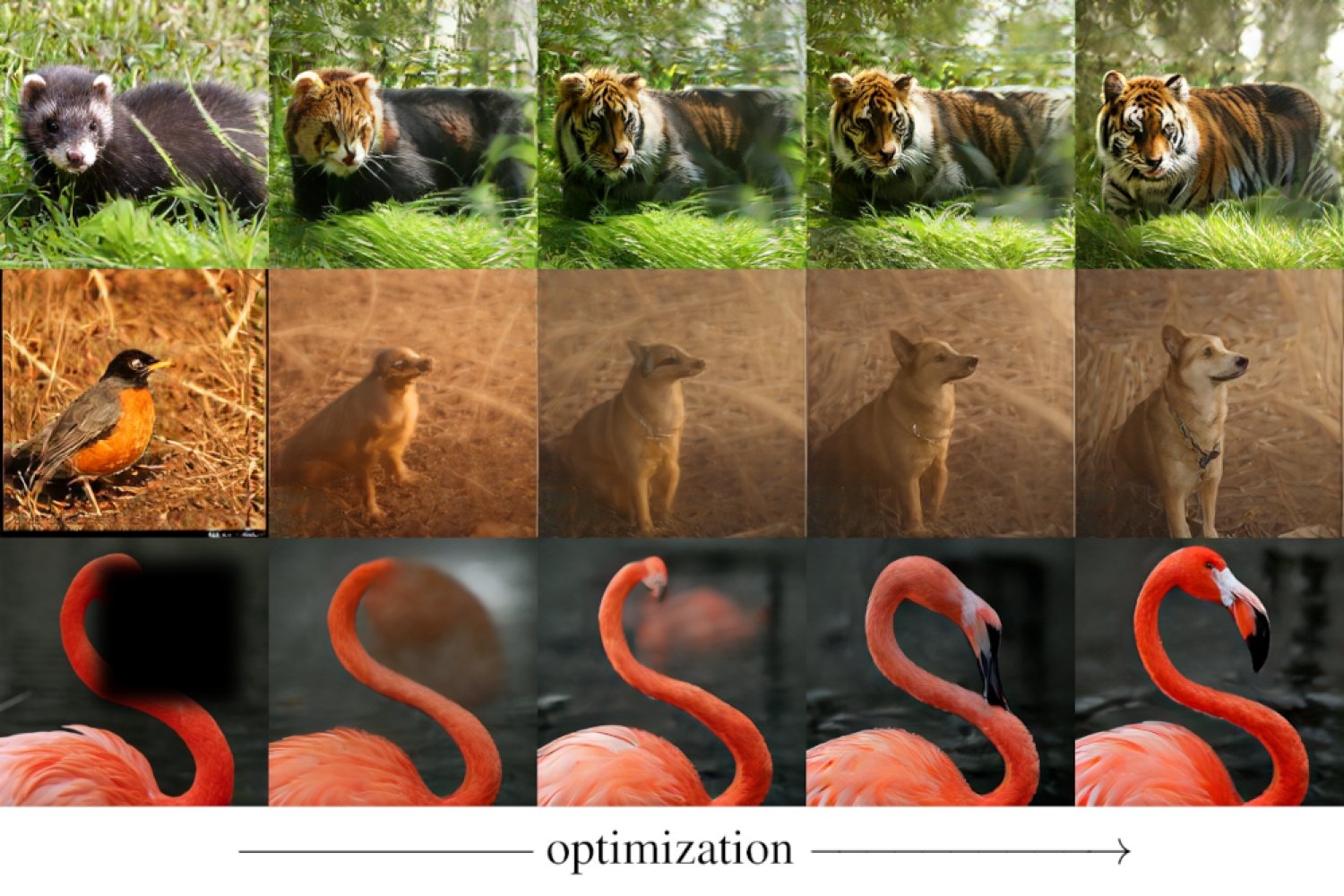

That’s precisely what Lao Beyer had initially got down to discover — work that supplied the seed for the ICML 2025 paper. The strategy he took was fairly easy. If you wish to discover out what a specific token does, Lao Beyer says, “you possibly can simply take it out, swap in some random worth, and see if there’s a recognizable change within the output.” Changing one token, he discovered, modifications the picture high quality, turning a low-resolution picture right into a high-resolution picture or vice versa. One other token affected the blurriness within the background, whereas one other nonetheless influenced the brightness. He additionally discovered a token that’s associated to the “pose,” that means that, within the picture of a robin, for example, the fowl’s head would possibly shift from proper to left.

“This was a never-before-seen consequence, as nobody had noticed visually identifiable modifications from manipulating tokens,” Lao Beyer says. The discovering raised the potential for a brand new strategy to modifying photos. And the MIT group has proven, the truth is, how this course of could be streamlined and automatic, in order that tokens don’t must be modified by hand, one after the other.

He and his colleagues achieved an much more consequential consequence involving picture era. A system able to producing photos usually requires a tokenizer, which compresses and encodes visible knowledge, together with a generator that may mix and organize these compact representations to be able to create novel photos. The MIT researchers discovered a solution to create photos with out utilizing a generator in any respect. Their new strategy makes use of a 1D tokenizer and a so-called detokenizer (also called a decoder), which might reconstruct a picture from a string of tokens. Nevertheless, with steering supplied by an off-the-shelf neural community known as CLIP — which can’t generate photos by itself, however can measure how nicely a given picture matches a sure textual content immediate — the group was capable of convert a picture of a pink panda, for instance, right into a tiger. As well as, they might create photos of a tiger, or every other desired type, beginning utterly from scratch — from a scenario through which all of the tokens are initially assigned random values (after which iteratively tweaked in order that the reconstructed picture more and more matches the specified textual content immediate).

The group demonstrated that with this similar setup — counting on a tokenizer and detokenizer, however no generator — they might additionally do “inpainting,” which suggests filling in components of photos that had someway been blotted out. Avoiding using a generator for sure duties may result in a big discount in computational prices as a result of mills, as talked about, usually require in depth coaching.

What may appear odd about this group’s contributions, He explains, “is that we didn’t invent something new. We didn’t invent a 1D tokenizer, and we didn’t invent the CLIP mannequin, both. However we did uncover that new capabilities can come up whenever you put all these items collectively.”

“This work redefines the function of tokenizers,” feedback Saining Xie, a pc scientist at New York College. “It exhibits that picture tokenizers — instruments normally used simply to compress photos — can truly do much more. The truth that a easy (however extremely compressed) 1D tokenizer can deal with duties like inpainting or text-guided modifying, with no need to coach a full-blown generative mannequin, is fairly shocking.”

Zhuang Liu of Princeton College agrees, saying that the work of the MIT group “exhibits that we will generate and manipulate the photographs in a approach that’s a lot simpler than we beforehand thought. Principally, it demonstrates that picture era is usually a byproduct of a really efficient picture compressor, probably decreasing the price of producing photos several-fold.”

There might be many purposes outdoors the sector of pc imaginative and prescient, Karaman suggests. “As an example, we may contemplate tokenizing the actions of robots or self-driving automobiles in the identical approach, which can quickly broaden the impression of this work.”

Lao Beyer is pondering alongside related traces, noting that the excessive quantity of compression afforded by 1D tokenizers means that you can do “some wonderful issues,” which might be utilized to different fields. For instance, within the space of self-driving automobiles, which is considered one of his analysis pursuits, the tokens may symbolize, as an alternative of photos, the totally different routes {that a} car would possibly take.

Xie can also be intrigued by the purposes that will come from these modern concepts. “There are some actually cool use instances this might unlock,” he says.