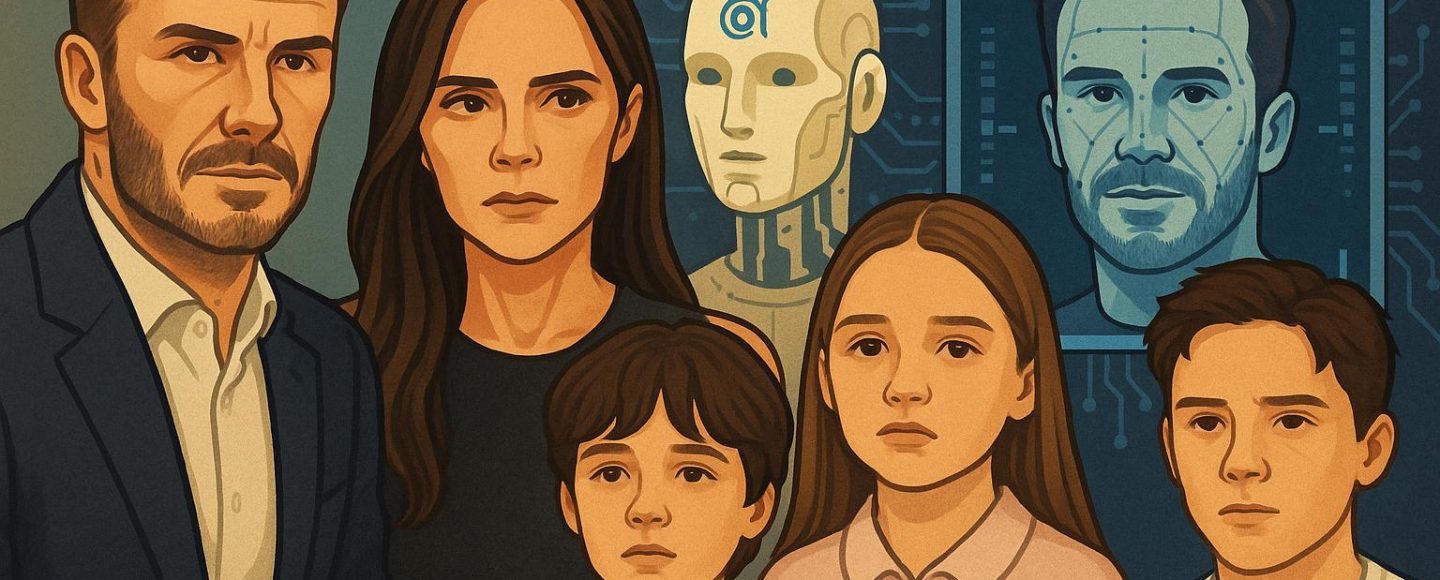

AI Deepfake Targets Beckham Household

AI deepfake targets Bekham household shines a harsh highlight on the disturbing pattern of AI-manipulated media concentrating on high-profile figures. A sensible however solely fabricated video portraying an argument between Brooklyn and Victoria Beckham has gone viral, triggering a frenzy of hypothesis and misinformation throughout social media platforms. This incident provides to the rising listing of deepfake-generated hoaxes and surfaces broader issues round expertise, public picture, and media authenticity. As artificial content material turns into harder to tell apart from actuality, it’s important we perceive how it’s created, establish it extra successfully, and demand stronger authorized, moral, and digital instruments to guard ourselves. This text dives into the expertise behind deepfakes, the authorized grey areas surrounding their use, and concrete methods for figuring out and responding to misinformation within the digital age.

Key Takeaways

- AI deepfake movies depicting Brooklyn and Victoria Beckham in battle are solely fabricated however extensively believed.

- Specialists spotlight severe moral and authorized issues surrounding deepfake use in manipulating superstar narratives.

- Deepfakes are more and more arduous to detect and are fueling misinformation in fashionable media tradition.

- Improved public media literacy and regulation are urgently wanted to stop hurt from artificial digital media.

What Was within the Faux AI Video That includes the Beckhams?

The AI-generated video in query reveals what seems to be a heated personal dispute between Brooklyn Beckham and his mom, Victoria Beckham. Neither particular person is seen clearly talking, however sensible vocal mimicry and facial mapping create the phantasm of an intense alternate. The deepfake was posted on TikTok and quickly unfold to different platforms, amassing thousands and thousands of views inside hours.

The fabricated video insinuates a fractured relationship throughout the high-profile household, suggesting disagreements over life-style decisions and private boundaries. Viewers, unaware of its synthetic origin, largely accepted it as genuine, fueling on-line debates in regards to the household’s personal lives. Regardless of there being no footage of such an incident in verified public information or media, the sensible rendering led many to imagine it was leaked from a non-public second.

On platforms like Twitter, Instagram, and TikTok, commentators speculated extensively a few attainable rift throughout the Beckham household. Memes, response movies, and sizzling takes shortly emerged, usually amplifying the false narrative began by the deepfake. A number of customers expressed shock and concern, whereas others criticized the general public intrusion into private issues.

This phenomenon emphasizes how shortly public notion might be formed by unverified content material. A 2023 Stanford Web Observatory report revealed that over 55 % of customers discovered it troublesome to confidently distinguish AI-altered video from actual media, particularly when involving recognizable faces or voices.

No official remark has been made by the Beckham household, resulting in much more hypothesis. Specialists argue that this silence displays the inherent problem celebrities face in addressing pretend content material. A public denial could inadvertently validate or amplify the lie, whereas silence may seem suspicious or detached.

The Expertise Behind Movie star Deepfakes

Deepfakes are generated utilizing deep studying, significantly Generative Adversarial Networks (GANs). These AI methods practice on 1000’s of actual photographs, movies, and speech samples to construct an artificial copy. The result’s a extremely convincing piece of content material that mimics voice tone, facial expressions, and physique actions virtually flawlessly.

Open-source instruments like DeepFaceLab and user-friendly apps corresponding to Reface and Zao have lowered the barrier to entry. With solely average technical expertise, somebody can produce pretty convincing deepfakes. The sophistication of AI fashions like StyleGAN and voice emulators from corporations like Descript additional blur traces between actuality and fiction.

This stage of accessibility will increase the danger of manipulation, particularly as some creators goal to confuse, satirize, and even defame others. Within the superstar world, the place photographs and reputations are high-stakes commodities, the risk is magnified. Study extra about how deepfakes work right here.

Authorized and Moral Issues Round Deepfakes

At present, legal guidelines surrounding deepfakes fluctuate considerably by area. Within the UK, content material that defames a public determine or invades their privateness might be grounds for authorized motion, however prosecuting creators of deepfakes stays advanced. Within the US, current laws like California’s AB 730 regulation restricts the distribution of deceptive deepfake movies associated to politics throughout election cycles. Regulation of entertainment-based content material stays minimal.

Leisure attorneys and digital privateness specialists argue that authorized frameworks haven’t tailored quick sufficient to this quickly evolving difficulty. Professor Marina Corridor, an professional in ethics and AI regulation at NYU, commented, “We’re watching the weaponization of id for leisure and misinformation. With out clear pointers, victims of deepfakes have restricted recourse besides public clarification, which is never passable.”

Ethically, deepfakes elevate severe issues about consent, manipulation, and character assassination. When superstar identities might be repurposed with out their permission, it diminishes their company and distorts public understanding of actuality.

Historic Context: Not the First Movie star Deepfake

This isn’t the primary time a celeb has been a goal of deepfake expertise. Broadly circulated AI-generated movies of Tom Cruise surfaced in 2021 by way of TikTok, mesmerizing audiences with their accuracy. In 2023, Taylor Swift appeared in falsified movies selling cryptocurrency platforms, which had been promptly flagged by fact-checkers and eliminated after public outcry.

Jamie Lee Curtis additionally condemned deepfake movies that misused her likeness in offensive contexts. These examples make it clear that deepfakes affect business, reputational, and social domains. Every high-profile case provides urgency to the rising demand for technological transparency and accountability.

Methods to Spot a Deepfake: Suggestions for Digital Literacy

As deepfake expertise improves, distinguishing artificial content material from actual footage turns into harder. Nonetheless, there are a number of pink flags and instruments viewers can use to confirm authenticity:

- Audio-visual inconsistencies: Look ahead to unnatural blinking, misaligned shadows, or lip-syncing anomalies.

- Supply verification: Verify whether or not mainstream or respected media shops have reported on the incident. Most professional sources confirm movies earlier than publishing.

- Reverse search instruments: Use Google’s reverse video/picture search or deepfake detection software program corresponding to Deepware Scanner or Microsoft’s Video Authenticator to test credentials.

- Truth-checking websites: Seek the advice of platforms like Snopes or PolitiFact specializing in debunking viral misinformation.

Constructing public consciousness is vital to lowering the harm deepfakes may cause. Study extra about easy methods to struggle again in opposition to deepfake misuse.

Skilled Opinions on the Rising Influence of AI Deepfakes

Specialists from numerous fields warn of accelerating challenges as artificial media turns into extra prevalent. Dr. Ellis Park, a cybersecurity researcher with the London Faculty of Digital Belief, believes that “we’re coming into an period the place video proof will not robotically equate to fact.”

Digital imaging specialist Zara Flynn provides that artificial content material is now simpler to generate than ever earlier than. When malicious actors mix emotional narratives with superior AI instruments, public sentiment might be hijacked shortly.

Media psychologist Dr. Karen Zhou advises viewers to recalibrate their inner belief filters. She explains that the extra acquainted or emotionally charged content material seems, the extra skepticism is critical. Virality alone isn’t proof of fact.

Latest backlash associated to AI-generated content material that includes David Attenborough additionally highlighted rising public discomfort. This underscores the significance of essential pondering and content material verification in right now’s digital setting.

What Can Celebrities and the Public Do to Defend Themselves?

Though authorized protections are nonetheless catching as much as these speedy adjustments, there are sensible steps each celebrities and most of the people can take to mitigate hurt from deepfakes:

- Monitoring instruments: Celebrities ought to collaborate with digital safety specialists who use AI detection software program to observe misuse of their likeness. Proactive surveillance permits sooner takedown requests and reduces the viral unfold of manipulated content material.

- Content material watermarking: Embedding authenticity markers into media will help platforms and viewers establish manipulated content material. Cryptographic watermarking and provenance requirements strengthen belief by permitting verification on the level of distribution.

- Platform engagement: Public figures and creators ought to preserve direct escalation channels with main platforms to streamline reporting and elimination processes. Clear documentation of id and prior content material possession accelerates enforcement actions.

- Authorized preparedness: Establishing authorized response frameworks prematurely, together with mental property and defamation methods, reduces response time when violations happen. Early session with counsel additionally strengthens deterrence.

- Public schooling: Contributing to media literacy campaigns helps equip audiences with the abilities to acknowledge altered content material. A extra knowledgeable public is much less prone to amplify manipulated media.

- Push for regulation: Influencers and advocacy teams can foyer for stronger legislative measures to fight deepfake associated threats. Coordinated advocacy can speed up the event of clearer legal responsibility requirements and platform accountability.

For most of the people, sensible habits matter. Limiting oversharing of excessive decision imagery, adjusting privateness settings, and being cautious about unknown platforms cut back publicity threat. People also needs to doc incidents totally to assist platform or authorized motion if vital.

Conclusion

Deepfakes signify a structural shift in how digital id might be manipulated at scale. Whereas regulatory methods proceed to evolve, hurt discount depends upon a mixture of technological safeguards, authorized preparedness, institutional accountability, and public consciousness. No single answer will eradicate the risk. Sustained collaboration between creators, platforms, policymakers, and the general public is important to protect belief and shield digital id in an period of artificial media.