Introduction

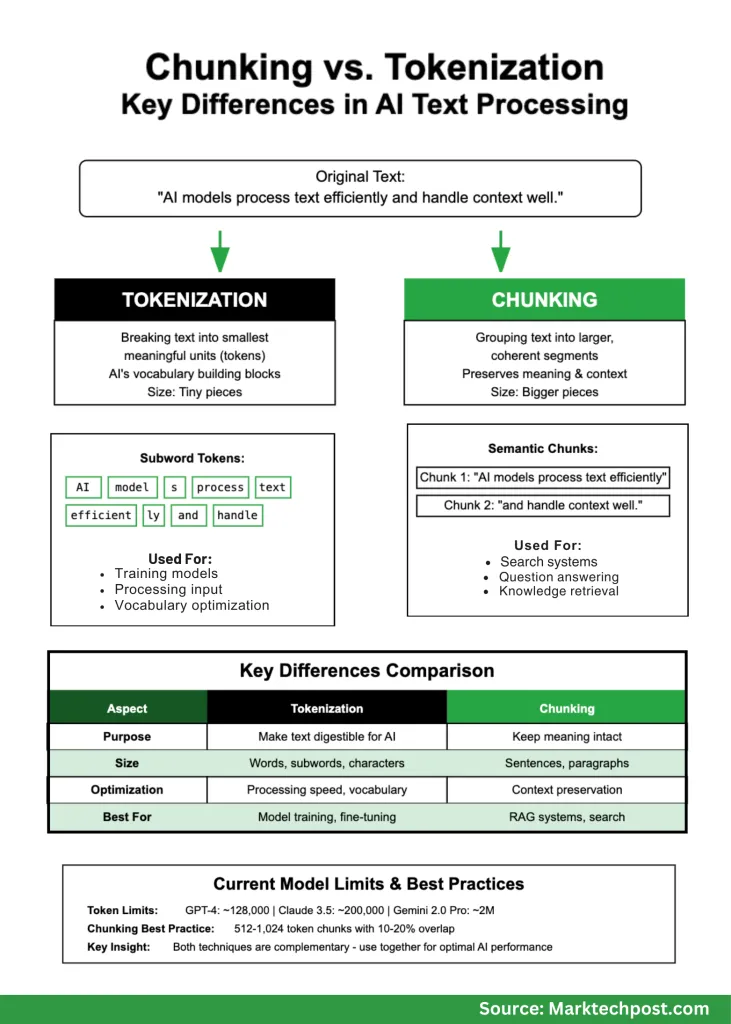

Once you’re working with AI and pure language processing, you’ll shortly encounter two elementary ideas that usually get confused: tokenization and chunking. Whereas each contain breaking down textual content into smaller items, they serve utterly totally different functions and work at totally different scales. If you happen to’re constructing AI purposes, understanding these variations isn’t simply tutorial—it’s essential for creating techniques that truly work nicely.

Consider it this fashion: in case you’re making a sandwich, tokenization is like reducing your components into bite-sized items, whereas chunking is like organizing these items into logical teams that make sense to eat collectively. Each are needed, however they resolve totally different issues.

What’s Tokenization?

Tokenization is the method of breaking textual content into the smallest significant models that AI fashions can perceive. These models, known as tokens, are the fundamental constructing blocks that language fashions work with. You possibly can consider tokens because the “phrases” in an AI’s vocabulary, although they’re typically smaller than precise phrases.

There are a number of methods to create tokens:

Phrase-level tokenization splits textual content at areas and punctuation. It’s easy however creates issues with uncommon phrases that the mannequin has by no means seen earlier than.

Subword tokenization is extra refined and extensively used at the moment. Strategies like Byte Pair Encoding (BPE), WordPiece, and SentencePiece break phrases into smaller chunks based mostly on how incessantly character mixtures seem in coaching information. This method handles new or uncommon phrases a lot better.

Character-level tokenization treats every letter as a token. It’s easy however creates very lengthy sequences which are tougher for fashions to course of effectively.

Right here’s a sensible instance:

- Unique textual content: “AI fashions course of textual content effectively.”

- Phrase tokens: [“AI”, “models”, “process”, “text”, “efficiently”]

- Subword tokens: [“AI”, “model”, “s”, “process”, “text”, “efficient”, “ly”]

Discover how subword tokenization splits “fashions” into “mannequin” and “s” as a result of this sample seems incessantly in coaching information. This helps the mannequin perceive associated phrases like “modeling” or “modeled” even when it hasn’t seen them earlier than.

What’s Chunking?

Chunking takes a very totally different method. As an alternative of breaking textual content into tiny items, it teams textual content into bigger, coherent segments that protect which means and context. Once you’re constructing purposes like chatbots or search techniques, you want these bigger chunks to keep up the circulation of concepts.

Take into consideration studying a analysis paper. You wouldn’t need every sentence scattered randomly—you’d need associated sentences grouped collectively so the concepts make sense. That’s precisely what chunking does for AI techniques.

Right here’s the way it works in follow:

- Unique textual content: “AI fashions course of textual content effectively. They depend on tokens to seize which means and context. Chunking permits higher retrieval.”

- Chunk 1: “AI fashions course of textual content effectively.”

- Chunk 2: “They depend on tokens to seize which means and context.”

- Chunk 3: “Chunking permits higher retrieval.”

Trendy chunking methods have change into fairly refined:

Mounted-length chunking creates chunks of a selected measurement (like 500 phrases or 1000 characters). It’s predictable however generally breaks up associated concepts awkwardly.

Semantic chunking is smarter—it appears to be like for pure breakpoints the place matters change, utilizing AI to know when concepts shift from one idea to a different.

Recursive chunking works hierarchically, first making an attempt to separate at paragraph breaks, then sentences, then smaller models if wanted.

Sliding window chunking creates overlapping chunks to make sure essential context isn’t misplaced at boundaries.

The Key Variations That Matter

Understanding when to make use of every method makes all of the distinction in your AI purposes:

| What You’re Doing | Tokenization | Chunking |

|---|---|---|

| Dimension | Tiny items (phrases, elements of phrases) | Larger items (sentences, paragraphs) |

| Purpose | Make textual content digestible for AI fashions | Hold which means intact for people and AI |

| When You Use It | Coaching fashions, processing enter | Search techniques, query answering |

| What You Optimize For | Processing velocity, vocabulary measurement | Context preservation, retrieval accuracy |

Why This Issues for Actual Functions

For AI Mannequin Efficiency

Once you’re working with language fashions, tokenization instantly impacts how a lot you pay and how briskly your system runs. Fashions like GPT-4 cost by the token, so environment friendly tokenization saves cash. Present fashions have totally different limits:

- GPT-4: Round 128,000 tokens

- Claude 3.5: As much as 200,000 tokens

- Gemini 2.0 Professional: As much as 2 million tokens

Current analysis exhibits that bigger fashions really work higher with larger vocabularies. For instance, whereas LLaMA-2 70B makes use of about 32,000 totally different tokens, it could in all probability carry out higher with round 216,000. This issues as a result of the correct vocabulary measurement impacts each efficiency and effectivity.

For Search and Query-Answering Programs

Chunking technique could make or break your RAG (Retrieval-Augmented Era) system. In case your chunks are too small, you lose context. Too massive, and also you overwhelm the mannequin with irrelevant data. Get it proper, and your system supplies correct, useful solutions. Get it fallacious, and also you get hallucinations and poor outcomes.

Firms constructing enterprise AI techniques have discovered that sensible chunking methods considerably scale back these irritating instances the place AI makes up information or provides nonsensical solutions.

The place You’ll Use Every Method

Tokenization is Important For:

Coaching new fashions – You possibly can’t practice a language mannequin with out first tokenizing your coaching information. The tokenization technique impacts every part about how nicely the mannequin learns.

Superb-tuning current fashions – Once you adapt a pre-trained mannequin on your particular area (like medical or authorized textual content), it is advisable rigorously think about whether or not the present tokenization works on your specialised vocabulary.

Cross-language purposes – Subword tokenization is especially useful when working with languages which have complicated phrase constructions or when constructing multilingual techniques.

Chunking is Essential For:

Constructing firm information bases – Once you need workers to ask questions and get correct solutions out of your inside paperwork, correct chunking ensures the AI retrieves related, full data.

Doc evaluation at scale – Whether or not you’re processing authorized contracts, analysis papers, or buyer suggestions, chunking helps preserve doc construction and which means.

Search techniques – Trendy search goes past key phrase matching. Semantic chunking helps techniques perceive what customers actually need and retrieve probably the most related data.

Present Finest Practices (What Really Works)

After watching many real-world implementations, right here’s what tends to work:

For Chunking:

- Begin with 512-1024 token chunks for many purposes

- Add 10-20% overlap between chunks to protect context

- Use semantic boundaries when doable (finish of sentences, paragraphs)

- Take a look at along with your precise use instances and modify based mostly on outcomes

- Monitor for hallucinations and tweak your method accordingly

For Tokenization:

- Use established strategies (BPE, WordPiece, SentencePiece) fairly than constructing your individual

- Contemplate your area—medical or authorized textual content may want specialised approaches

- Monitor out-of-vocabulary charges in manufacturing

- Steadiness between compression (fewer tokens) and which means preservation

Abstract

Tokenization and chunking aren’t competing strategies—they’re complementary instruments that resolve totally different issues. Tokenization makes textual content digestible for AI fashions, whereas chunking preserves which means for sensible purposes.

As AI techniques change into extra refined, each strategies proceed evolving. Context home windows are getting bigger, vocabularies have gotten extra environment friendly, and chunking methods are getting smarter about preserving semantic which means.

The secret is understanding what you’re making an attempt to perform. Constructing a chatbot? Give attention to chunking methods that protect conversational context. Coaching a mannequin? Optimize your tokenization for effectivity and protection. Constructing an enterprise search system? You’ll want each—sensible tokenization for effectivity and clever chunking for accuracy.