Aether 1 started as an inner experiment at OFF+BRAND: May we craft a product‑launch web site so immersive that guests would really feel the sound?

The earbuds themselves are fictional, however each pixel of the expertise is actual – an finish‑to‑finish sandbox the place our model, 3D, and engineering groups pushed WebGL, AI‑assisted tooling, and narrative design far past a typical product web page.

This technical case examine is the dwelling playbook of that exploration. Inside you’ll discover:

- 3D creation workflow – how we sculpted, animated, and optimised the earphones and their charging case.

- Interactive WebGL structure – the particle circulation‑fields, infinite scroll, audio‑reactive shaders, and customized controllers that make the location really feel alive.

- Efficiency tips – GPU‑pleasant supplies, fake depth‑of‑area, selective bloom, and different techniques that saved the mission operating at 60 FPS on cell {hardware}.

- Device stack & takeaways – what labored, what didn’t, and why each lesson right here can translate to your personal initiatives.

Whether or not you’re a developer, designer, or producer, the subsequent sections unpack the selections, experiments, and onerous‑received optimizations that helped us show that “sound with out boundaries” can exist on the internet.

1. 3D Creation Workflow

By Celia Lopez

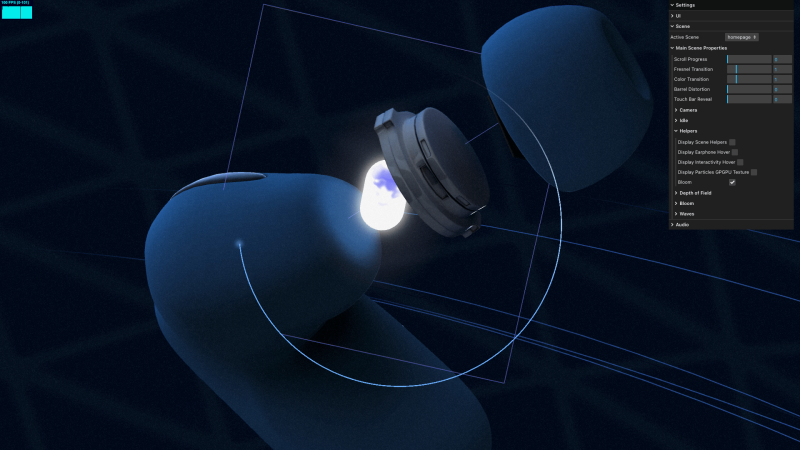

3D creation of the headphone and case

For the headphone form, we wanted to create one from scratch. To assist ourselves rapidly sketch out the concepts we had in thoughts, we used Midjourney. Because of references from the web and the assistance of AI, we agreed on a creative path.

Dimension reference and headphone creation

To make sure the dimensions matched a real-life reference, we used Apple headphones and iterated till we discovered one thing attention-grabbing. We used Figma to current all of the iterations to the staff, exporting three photos – entrance, facet, and again – every time to assist them higher visualize the thing.

Identical for the case.

Storyboard

For the storyboard, we first sketched our concepts and tried to match every particular scene with a 3D visualization.

We iterated for some time earlier than finalizing the nonetheless frames for every half. Some components have been too difficult to symbolize in 3D, so we adjusted the workflow accordingly.

Movement

So that everybody agrees on the circulation, look, and really feel, we created a full-motion model of it.

Unwrapping and renaming

To arrange the scene for a developer, we wanted to spend a while unwrapping the UVs, cleansing the file, and renaming the weather. We used C4D completely for unwrapping for the reason that shapes weren’t too advanced. It’s additionally crucial to rename all components and set up the file so the developer can simply acknowledge which object is which. (Within the instance under, we present the approach – not the total workflow or an ideal unwrap.)

Fluid circulation baked

Nearly all of the animations have been baked from C4D to Blender and exported as .glb information.

Timing

We determined to start out with an infinite scroll and a looped expertise. When the person releases the scroll, seven anchors subtly and robotically information the development. To make it simpler for the developer to divide the baked animation, we used particular timing for every step — 200 keyframes between every anchor.

AO baking

As a result of the headphones have been rotating, we couldn’t bake the lighting. We solely baked the Ambient Occlusion shadows to boost realism. For that, after unwrapping the objects, we mixed all of the totally different components of the headphones right into a single object, utilized a single texture with the Ambient Occlusion, and baked it in Redshift. Identical for the case.

Regular map baked

For the Play‑Stade touchpad solely, we wanted a traditional map, so we exported it. Nonetheless, for the reason that AO was already baked, the UVs needed to stay the identical.

Digicam path and goal

With a view to guarantee a easy circulation throughout the net expertise, it was essential to make use of a single digicam. Nonetheless, since we’ve got totally different focal factors, we wanted two separate round paths with totally different facilities and sizes, together with a null object to function a goal reference all through the circulation.

2. WebGL Options and Interactive Structure

By Adrian Gubrica

GPGPU particles

Particles are a good way so as to add an additional layer of element to 3D scenes, as was the case with Aether 1. To enhance the calming movement of the audio waves, a circulation‑area simulation was used — a way identified for producing plausible and pure motion in particle programs. With the appropriate settings, the ensuing movement may also be extremely enjoyable to observe.

To calculate the circulation fields, noise algorithms — particularly Simplex4D — have been used. Since these will be extremely performance-intensive on the CPU, a GPGPU approach (primarily the WebGL equal of a compute shader) was applied to run the simulation effectively on the GPU. The outcomes have been saved and up to date throughout two textures, enabling easy and high-performance movement.

Easy scene transitions

To create a seamless transition between scenes, I developed a customized controller to handle when every scene ought to or shouldn’t render. I additionally applied a guide method of controlling their scroll state, permitting me, for instance, to show the final place of a scene with out bodily scrolling there. By combining this with a customized transition operate that primarily makes use of GSAP to animate values, I used to be in a position to create each ahead and backward animations to the goal scene.

It is very important observe that each one scenes and transitions are displayed inside a “publish‑processing scene,” which consists of an orthographic digicam and a full‑display screen aircraft. Within the fragment shader, I merge all of the renders collectively.

This transition approach grew to become particularly difficult when transitioning on the finish of every scroll in the principle scene to create an infinite loop. To realize this, I created two cases of the principle scene (A and B) and swapped between them every time a transition occurred.

Customized scroll controller for infinite scrolling

As talked about earlier, the principle scene options an infinite loop at each the beginning and finish of the scroll, which triggers a transition again to the start or finish of the scene. This conduct is enhanced with some resistance throughout the backward motion and different delicate results. Reaching this required cautious guide tweaking of the Lenis library.

My preliminary thought was to make use of Lenis’ infinite: true property, which at first appeared like a fast resolution – particularly for returning to the beginning scroll place. Nonetheless, this strategy required manually listening to the scroll velocity and predicting whether or not the scroll would cross a sure threshold to cease it on the proper second and set off the transition. Whereas attainable, it rapidly proved unreliable, typically resulting in unpredictable conduct like damaged scroll states, unintended transitions, or a confused browser scroll historical past.

Due to these points, I made a decision to take away the infinite: true property and deal with the scroll transitions manually. By combining Lenis.scrollTo(), Lenis.cease(), and Lenis.begin(), I used to be in a position to recreate the identical looping impact on the finish of every scroll with larger management and reliability. An additional benefit was with the ability to retain Lenis’s default easing initially and finish of the scroll, which contributed a easy and polished really feel.

Cursor with fluid simulation cross

Fluid simulation triggered by mouse or contact motion has turn out to be a significant development on immersive web sites in recent times. However past simply being fashionable, it persistently enhances the visible attraction and provides a satisfying layer of interactivity to the person expertise.

In my implementation, I used the fluid simulation as a blue overlay that follows the pointer motion. It additionally served as a masks for the Fresnel cross (defined in additional element under) and was used to create a dynamic displacement and RGB shift impact within the last render.

As a result of fluid simulations will be efficiency‑intensive – requiring a number of passes to calculate reasonable conduct – I downscaled it to only 7.5 % of the display screen decision. This optimization nonetheless produced a visually compelling impact whereas sustaining easy general efficiency.

Fresnel cross on the earphones

Within the first half of the principle scene’s scroll development, customers can see the internal components of the earphones when hovering over them, including a pleasant interactive contact to the scene. I achieved this impact through the use of the fluid simulation cross as a masks on the earphones’ materials.

Nonetheless, implementing this wasn’t simple at first, for the reason that earphones and the fluid simulation use totally different coordinate programs. My preliminary thought was to create a separate render cross for the earphones and apply the fluid masks in that particular cross. However this strategy would have been pricey and launched pointless complexity to the publish‑processing pipeline.

After some experimentation, I spotted I may use the digicam’s view place as a type of display screen‑house UV projection onto the fabric. This allowed me to precisely pattern the fluid texture immediately within the earphones’ materials – precisely what I wanted to make the impact work with out further rendering overhead.

Audio reactivity

Because the mission is a presentation of earphones, some scene parameters wanted to turn out to be audio‑reactive. I used one of many background audio’s frequency channels – the one which produced essentially the most noticeable “jumps,” as the remainder of the observe had a really steady tone – which served because the enter to drive varied results. This included modifying the tempo and form of the wave animations, influencing the power of the particles’ circulation area, and shaping the touchpad’s visualizer.

The background audio itself was additionally processed utilizing the Internet Audio API, particularly a low‑cross filter. This filter was triggered when the person hovered over the earphones within the first part of the principle scene, in addition to throughout the scene transitions firstly and finish. The low‑cross impact helped amplify the affect of the animations, making a delicate sensation of time slowing down.

Animation and empties

Many of the animations have been baked immediately into the .glb file and managed through the scroll progress utilizing THREE.js’s AnimationMixer. This included the digicam motion in addition to the earphone animations.

This workflow proved to be extremely efficient when collaborating with one other 3D artist, because it gave them management over a number of elements of the expertise – similar to timing, movement, and transitions – whereas permitting me to focus solely on the actual‑time interactions and logic.

Talking of actual‑time actions, I prolonged the scene by including a number of empties, animating their place and scale values to behave as drivers for varied interactive occasions – similar to triggering interactive factors or adjusting enter power throughout scroll. This strategy made it straightforward to superb‑tune these occasions immediately in Blender’s timeline and align them exactly with different baked animations.

3. Optimization Strategies

Visible expectations have been set very excessive for this mission, making it clear from the beginning that efficiency optimization can be a significant problem. Due to this, I intently monitored efficiency metrics all through improvement, consistently in search of alternatives to avoid wasting assets wherever attainable. This typically led to sudden but efficient options to issues that originally appeared too demanding or impractical for our objectives. A few of these optimizations have already been talked about – similar to utilizing GPGPU strategies for particle simulation and considerably decreasing the decision of the cursor’s fluid simulation. Nonetheless, there have been a number of different key optimizations that performed an important position in sustaining stable efficiency:

Synthetic depth of area

Certainly one of that was utilizing depth of area throughout the shut‑up view on the headphones. Depth of area is often used as a publish‑processing layer utilizing some type of convolution to simulate progressive blurring of the rendered scene. I thought-about this as a great‑to‑have from the start in case we can be left with some further fps, however not as a sensible possibility.

Nonetheless, after implementing the particles simulation, which used smoothstep operate within the particle’s fragment shader to attract the blue circle, I used to be questioning if it won’t be sufficient to easily modify its values to make it appear like it’s blurred. After few little tweaks, the particles grew to become blurry.

The one downside left was that the blur was not progressive like in an actual digicam, which means it was not getting blurry in line with the main target level of the digicam. So I made a decision to strive the digicam’s view place to get some type of depth worth, which surprisingly did the job nicely.

I utilized the identical smoothstep approach to the rotating tube within the background, however now with out the progressive impact because it was virtually at a continuing distance more often than not.

Voilà. Depth of area for nearly free (not good, however does the job nicely).

Synthetic bloom

Bloom was additionally a part of the publish‑processing stack – sometimes a pricey impact because of the further render cross it requires. This turns into much more demanding when utilizing selective bloom, which I wanted to make the core of the earphones glow. In that case, the render cross is successfully doubled to isolate and mix solely particular parts.

To work round this efficiency hit, I changed the bloom impact with a easy aircraft utilizing a pre‑generated bloom texture that matched the form of the earphone core. The aircraft was set to at all times face the digicam (a billboard approach), creating the phantasm of bloom with out the computational overhead.

Surprisingly, this strategy labored very nicely. With a little bit of superb‑tuning – particularly adjusting the depth write settings – I used to be even in a position to keep away from seen overlaps with close by geometry, sustaining a clear and convincing look.

Customized performant glass materials

A serious a part of the earphones’ visible attraction got here from the shiny floor on the again. Nonetheless, reaching reasonable reflections in WebGL is at all times difficult – and infrequently costly – particularly when utilizing double‑sided supplies.

To sort out this, I used a method I typically depend on: combining a MeshStandardMaterial for the bottom bodily lighting mannequin with a glass matcap texture, injected through the onBeforeCompile callback. This setup offered a great steadiness between realism and efficiency.

To boost the impact additional, I added Fresnel lighting on the sides and launched a slight opacity, which collectively helped create a convincing glass‑like floor. The ultimate end result intently matched the visible idea offered for the mission – with out the heavy price of actual‑time reflections or extra advanced supplies.

Simplified raycasting

Raycasting on excessive‑polygon meshes will be sluggish and inefficient. To optimise this, I used invisible low‑poly proxy meshes for the factors of curiosity – such because the earphone shapes and their interactive areas.

This strategy considerably decreased the efficiency price of raycasting whereas giving me rather more flexibility. I may freely alter the dimensions and place of the raycastable zones with out affecting the visible mesh, permitting me to superb‑tune the interactions for the very best person expertise.

Cellular efficiency

Because of the optimisation strategies talked about above, the expertise maintains a stable 60 FPS – even on older gadgets just like the iPhone SE (2020).

- Three.js: For a mission of this scale, Three.js was the clear alternative. Its constructed‑in supplies, loaders, and utilities made it supreme for constructing extremely interactive WebGL scenes. It was particularly helpful when establishing the GPGPU particle simulation, which is supported through a devoted addon offered by the Three.js ecosystem.

- lil‑gui: Generally used alongside Three.js, was instrumental in making a debug surroundings throughout improvement. It additionally allowed designers to interactively tweak and superb‑tune varied parameters of the expertise with no need to dive into the code.

- GSAP: Most linear animations have been dealt with with GSAP and its timeline system. It proved significantly helpful when manually syncing animations to the scroll progress offered by Lenis, providing exact management over timing and transitions.

- Lenis: As talked about earlier, Lenis offered a easy and dependable basis for scroll conduct. Its

syncTouchparameter helped handle DOM shifting on cell gadgets, which generally is a widespread problem in scroll‑primarily based experiences.

5. Outcomes and Takeaways

Aether 1 efficiently demonstrated how model narrative, superior WebGL interactions, and rigorous 3D workflows can mix right into a single, performant, and emotionally participating net expertise.

By baking key animations, utilizing empties for occasion triggers, and leaning on instruments like Three.js, GSAP, and Lenis, the staff was in a position to iterate rapidly with out sacrificing polish. In the meantime, the 3D pipeline- from Midjourney idea sketches by means of C4D unwrapping and Blender export ensured the visible constancy stayed aligned with the model imaginative and prescient.

Most significantly, each approach outlined right here is transferable. Whether or not you might be contemplating audio‑reactive visuals, infinite scroll adventures, or just attempting to squeeze additional frames per second out of a heavy scene, the options documented above present that considerate planning and a willingness to experiment can push WebGL far past typical product‑web page expectations.

6. Creator Contributions

Basic – Ross Anderson

3D – Celia Lopez

WebGL – Adrian Gubrica

7. Web site credit

Artwork Course – Ross Anderson

Design – Gilles Tossoukpe

3D – Celia Lopez

WebGL – Adrian Gubrica

AI Integration – Federico Valla

Movement – Jason Kearley

Entrance Finish / Webflow – Youness Benammou