A language mannequin is a mathematical mannequin that describes a human language as a chance distribution over its vocabulary. To coach a deep studying community to mannequin a language, it’s essential to determine the vocabulary and study its chance distribution. You possibly can’t create the mannequin from nothing. You want a dataset in your mannequin to study from.

On this article, you’ll find out about datasets used to coach language fashions and easy methods to supply frequent datasets from public repositories.

Let’s get began.

Datasets for Coaching a Language Mannequin

Picture by Dan V. Some rights reserved.

A Good Dataset for Coaching a Language Mannequin

A superb language mannequin ought to study appropriate language utilization, freed from biases and errors. In contrast to programming languages, human languages lack formal grammar and syntax. They evolve constantly, making it unimaginable to catalog all language variations. Due to this fact, the mannequin ought to be educated from a dataset as a substitute of crafted from guidelines.

Organising a dataset for language modeling is difficult. You want a big, various dataset that represents the language’s nuances. On the identical time, it should be prime quality, presenting appropriate language utilization. Ideally, the dataset ought to be manually edited and cleaned to take away noise like typos, grammatical errors, and non-language content material reminiscent of symbols or HTML tags.

Creating such a dataset from scratch is expensive, however a number of high-quality datasets are freely accessible. Widespread datasets embrace:

- Widespread Crawl. A large, constantly up to date dataset of over 9.5 petabytes with various content material. It’s utilized by main fashions together with GPT-3, Llama, and T5. Nevertheless, because it’s sourced from the net, it incorporates low-quality and duplicate content material, together with biases and offensive materials. Rigorous cleansing and filtering are required to make it helpful.

- C4 (Colossal Clear Crawled Corpus). A 750GB dataset scraped from the net. In contrast to Widespread Crawl, this dataset is pre-cleaned and filtered, making it simpler to make use of. Nonetheless, count on potential biases and errors. The T5 mannequin was educated on this dataset.

- Wikipedia. English content material alone is round 19GB. It’s huge but manageable. It’s well-curated, structured, and edited to Wikipedia requirements. Whereas it covers a broad vary of common data with excessive factual accuracy, its encyclopedic fashion and tone are very particular. Coaching on this dataset alone might trigger fashions to overfit to this fashion.

- WikiText. A dataset derived from verified good and featured Wikipedia articles. Two variations exist: WikiText-2 (2 million phrases from a whole lot of articles) and WikiText-103 (100 million phrases from 28,000 articles).

- BookCorpus. A number of-GB dataset of long-form, content-rich, high-quality guide texts. Helpful for studying coherent storytelling and long-range dependencies. Nevertheless, it has identified copyright points and social biases.

- The Pile. An 825GB curated dataset from a number of sources, together with BookCorpus. It mixes completely different textual content genres (books, articles, supply code, and tutorial papers), offering broad topical protection designed for multidisciplinary reasoning. Nevertheless, this range leads to variable high quality, duplicate content material, and inconsistent writing kinds.

Getting the Datasets

You possibly can seek for these datasets on-line and obtain them as compressed recordsdata. Nevertheless, you’ll want to grasp every dataset’s format and write customized code to learn them.

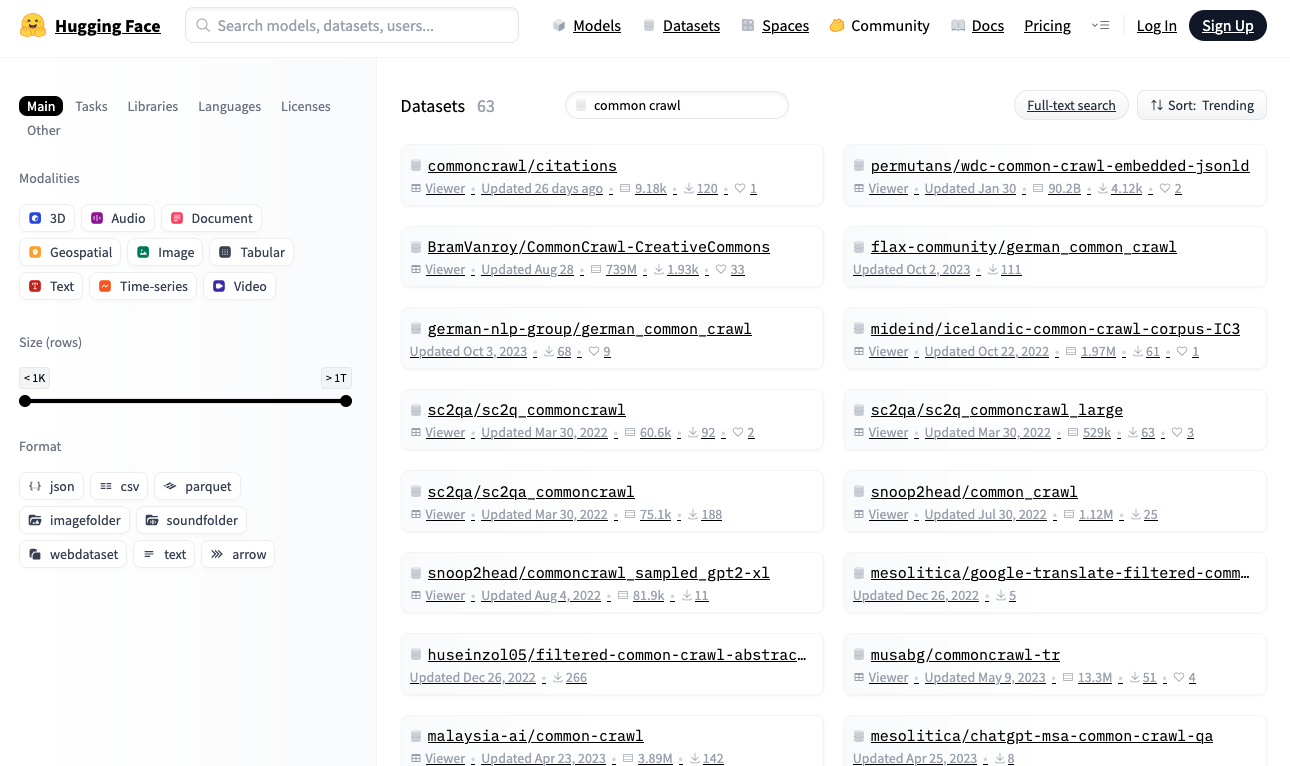

Alternatively, seek for datasets within the Hugging Face repository at https://huggingface.co/datasets. This repository offers a Python library that permits you to obtain and skim datasets in actual time utilizing a standardized format.

Hugging Face Datasets Repository

Let’s obtain the WikiText-2 dataset from Hugging Face, one of many smallest datasets appropriate for constructing a language mannequin:

|

import random from datasets import load_dataset

dataset = load_dataset(“wikitext”, “wikitext-2-raw-v1”) print(f“Measurement of the dataset: {len(dataset)}”) # print just a few samples n = 5 whereas n > 0: idx = random.randint(0, len(dataset)–1) textual content = dataset[idx][“text”].strip() if textual content and not textual content.startswith(“=”): print(f“{idx}: {textual content}”) n -= 1 |

The output might appear to be this:

|

Measurement of the dataset: 36718 31776: The Missouri ‘s headwaters above Three Forks prolong a lot farther upstream than … 29504: Regional variants of the phrase Allah happen in each pagan and Christian pre @-@ … 19866: Pokiri ( English : Rogue ) is a 2006 Indian Telugu @-@ language motion movie , … 27397: The primary flour mill in Minnesota was in-built 1823 at Fort Snelling as a … 10523: The music trade took be aware of Carey ‘s success . She received two awards on the … |

When you haven’t already, set up the Hugging Face datasets library:

Whenever you run this code for the primary time, load_dataset() downloads the dataset to your native machine. Guarantee you might have sufficient disk area, particularly for big datasets. By default, datasets are downloaded to ~/.cache/huggingface/datasets.

All Hugging Face datasets observe a typical format. The dataset object is an iterable, with every merchandise as a dictionary. For language mannequin coaching, datasets usually include textual content strings. On this dataset, textual content is saved underneath the "textual content" key.

The code above samples just a few components from the dataset. You’ll see plain textual content strings of various lengths.

Put up-Processing the Datasets

Earlier than coaching a language mannequin, it’s possible you’ll need to post-process the dataset to wash the information. This contains reformatting textual content (clipping lengthy strings, changing a number of areas with single areas), eradicating non-language content material (HTML tags, symbols), and eradicating undesirable characters (further areas round punctuation). The precise processing will depend on the dataset and the way you need to current textual content to the mannequin.

For instance, if coaching a small BERT-style mannequin that handles solely lowercase letters, you possibly can scale back vocabulary measurement and simplify the tokenizer. Right here’s a generator perform that gives post-processed textual content:

|

def wikitext2_dataset(): dataset = load_dataset(“wikitext”, “wikitext-2-raw-v1”) for merchandise in dataset: textual content = merchandise[“text”].strip() if not textual content or textual content.startswith(“=”): proceed # skip the empty strains or header strains yield textual content.decrease() # generate lowercase model of the textual content |

Creating post-processing perform is an artwork. It ought to enhance the dataset’s signal-to-noise ratio to assist the mannequin study higher, whereas preserving the flexibility to deal with surprising enter codecs {that a} educated mannequin might encounter.

Additional Readings

Under are some assets that you could be discover them helpful:

Abstract

On this article, you realized about datasets used to coach language fashions and easy methods to supply frequent datasets from public repositories. That is simply a place to begin for dataset exploration. Contemplate leveraging current libraries and instruments to optimize dataset loading pace so it doesn’t turn into a bottleneck in your coaching course of.