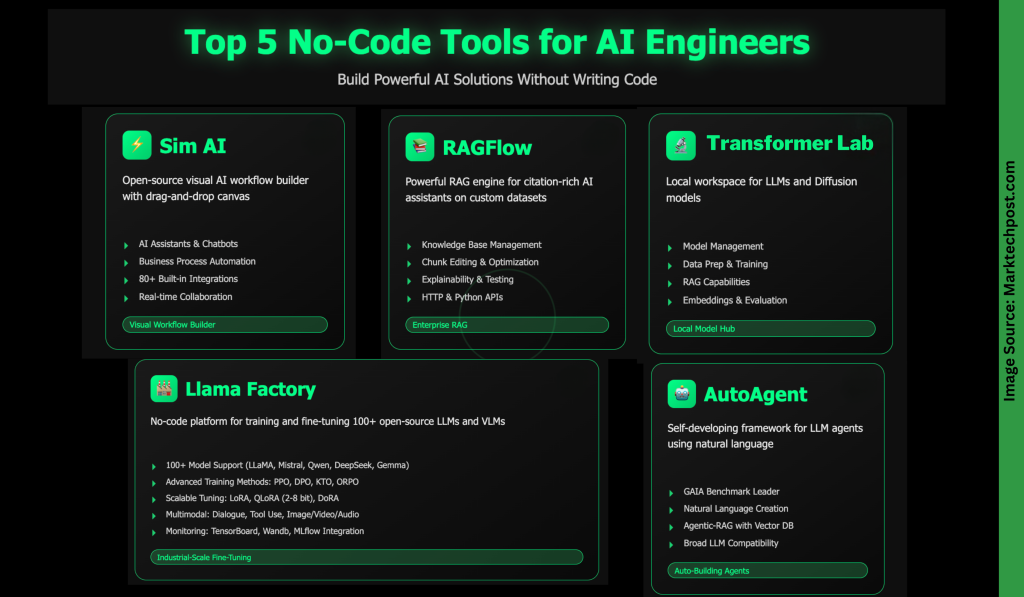

In immediately’s AI-driven world, no-code instruments are reworking how individuals create and deploy clever purposes. They empower anybody—no matter coding experience—to construct options shortly and effectively. From creating enterprise-grade RAG programs to designing multi-agent workflows or fine-tuning a whole bunch of LLMs, these platforms dramatically cut back growth effort and time. On this article, we’ll discover 5 highly effective no-code instruments that make constructing AI options quicker and extra accessible than ever.

Sim AI is an open-source platform for visually constructing and deploying AI agent workflows—no coding required. Utilizing its drag-and-drop canvas, you possibly can join AI fashions, APIs, databases, and enterprise instruments to create:

- AI Assistants & Chatbots: Brokers that search the online, entry calendars, ship emails, and work together with enterprise apps.

- Enterprise Course of Automation: Streamline duties corresponding to knowledge entry, report creation, buyer help, and content material technology.

- Knowledge Processing & Evaluation: Extract insights, analyze datasets, create reviews, and sync knowledge throughout programs.

- API Integration Workflows: Orchestrate complicated logic, unify companies, and handle event-driven automation.

Key options:

- Visible canvas with “sensible blocks” (AI, API, logic, output).

- A number of triggers (chat, REST API, webhooks, schedulers, Slack/GitHub occasions).

- Actual-time staff collaboration with permissions management.

- 80+ built-in integrations (AI fashions, communication instruments, productiveness apps, dev platforms, search companies, and databases).

- MCP help for customized integrations.

Deployment choices:

- Cloud-hosted (managed infrastructure with scaling & monitoring).

- Self-hosted (by way of Docker, with native mannequin help for knowledge privateness).

RAGFlow is a robust retrieval-augmented technology (RAG) engine that helps you construct grounded, citation-rich AI assistants on high of your individual datasets. It runs on x86 CPUs or NVIDIA GPUs (with non-obligatory ARM builds) and gives full or slim Docker photographs for fast deployment. After spinning up a neighborhood server, you possibly can join an LLM—by way of API or native runtimes like Ollama—to deal with chat, embedding, or image-to-text duties. RAGFlow helps hottest language fashions and permits you to set defaults or customise fashions for every assistant.

Key capabilities embrace:

- Information base administration: Add and parse information (PDF, Phrase, CSV, photographs, slides, and extra) into datasets, choose an embedding mannequin, and arrange content material for environment friendly retrieval.

- Chunk enhancing & optimization: Examine parsed chunks, add key phrases, or manually modify content material to enhance search accuracy.

- AI chat assistants: Create chats linked to at least one or a number of information bases, configure fallback responses, and fine-tune prompts or mannequin settings.

- Explainability & testing: Use built-in instruments to validate retrieval high quality, monitor efficiency, and examine real-time citations.

- Integration & extensibility: Leverage HTTP and Python APIs for app integration, with an non-obligatory sandbox for protected code execution inside chats.

Transformer Lab is a free, open-source workspace for Giant Language Fashions (LLMs) and Diffusion fashions, designed to run in your native machine—whether or not that’s a GPU, TPU, or Apple M-series Mac—or within the cloud. It lets you obtain, chat with, and consider LLMs, generate photographs utilizing Diffusion fashions, and compute embeddings, all from one versatile atmosphere.

Key capabilities embrace:

- Mannequin administration: Obtain and work together with LLMs, or generate photographs utilizing state-of-the-art Diffusion fashions.

- Knowledge preparation & coaching: Create datasets, fine-tune, or prepare fashions, together with help for RLHF and choice tuning.

- Retrieval-augmented technology (RAG): Use your individual paperwork to energy clever, grounded conversations.

- Embeddings & analysis: Calculate embeddings and assess mannequin efficiency throughout completely different inference engines.

- Extensibility & group: Construct plugins, contribute to the core utility, and collaborate by way of the lively Discord group.

LLaMA-Manufacturing unit is a robust no-code platform for coaching and fine-tuning open-source Giant Language Fashions (LLMs) and Imaginative and prescient-Language Fashions (VLMs). It helps over 100 fashions, multimodal fine-tuning, superior optimization algorithms, and scalable useful resource configurations. Designed for researchers and practitioners, it provides in depth instruments for pre-training, supervised fine-tuning, reward modeling, and reinforcement studying strategies like PPO and DPO—together with simple experiment monitoring and quicker inference.

Key highlights embrace:

- Broad mannequin help: Works with LLaMA, Mistral, Qwen, DeepSeek, Gemma, ChatGLM, Phi, Yi, Mixtral-MoE, and lots of extra.

- Coaching strategies: Helps steady pre-training, multimodal SFT, reward modeling, PPO, DPO, KTO, ORPO, and extra.

- Scalable tuning choices: Full-tuning, freeze-tuning, LoRA, QLoRA (2–8 bit), OFT, DoRA, and different resource-efficient methods.

- Superior algorithms & optimizations: Consists of GaLore, BAdam, APOLLO, Muon, FlashAttention-2, RoPE scaling, NEFTune, rsLoRA, and others.

- Duties & modalities: Handles dialogue, device use, picture/video/audio understanding, visible grounding, and extra.

- Monitoring & inference: Integrates with LlamaBoard, TensorBoard, Wandb, MLflow, and SwanLab, plus provides quick inference by way of OpenAI-style APIs, Gradio UI, or CLI with vLLM/SGLang employees.

- Versatile infrastructure: Appropriate with PyTorch, Hugging Face Transformers, Deepspeed, BitsAndBytes, and helps each CPU/GPU setups with memory-efficient quantization.

AutoAgent is a totally automated, self-developing framework that allows you to create and deploy LLM-powered brokers utilizing pure language alone. Designed to simplify complicated workflows, it lets you construct, customise, and run clever instruments and assistants with out writing a single line of code.

Key options embrace:

- Excessive efficiency: Achieves top-tier outcomes on the GAIA benchmark, rivaling superior deep analysis brokers.

- Easy agent & workflow creation: Construct instruments, brokers, and workflows by way of easy pure language prompts—no coding required.

- Agentic-RAG with native vector database: Comes with a self-managing vector database, providing superior retrieval in comparison with conventional options like LangChain.

- Broad LLM compatibility: Integrates seamlessly with main fashions corresponding to OpenAI, Anthropic, DeepSeek, vLLM, Grok, Hugging Face, and extra.

- Versatile interplay modes: Helps each function-calling and ReAct-style reasoning for versatile use instances.

Light-weight & extensible: A dynamic private AI assistant that’s simple to customise and lengthen whereas remaining resource-efficient.