This text discusses a brand new launch of a multimodal Hunyuan Video world mannequin referred to as ‘HunyuanCustom’. The brand new paper’s breadth of protection, mixed with a number of points in most of the provided instance movies on the undertaking web page*, constrains us to extra normal protection than normal, and to restricted copy of the large quantity of video materials accompanying this launch (since most of the movies require vital re-editing and processing in an effort to enhance the readability of the format).

Please be aware moreover that the paper refers back to the API-based generative system Kling as ‘Keling’. For readability, I seek advice from ‘Kling’ as an alternative all through.

Tencent is within the means of releasing a brand new model of its Hunyuan Video mannequin, titled HunyuanCustom. The brand new launch is seemingly able to making Hunyuan LoRA fashions redundant, by permitting the person to create ‘deepfake’-style video customization via a single picture:

Click on to play. Immediate: ‘A person is listening to music and cooking snail noodles within the kitchen’. The brand new methodology in comparison with each close-source and open-source strategies, together with Kling, which is a big opponent on this house. Supply: https://hunyuancustom.github.io/ (warning: CPU/memory-intensive web site!)

Within the left-most column of the video above, we see the only supply picture provided to HunyuanCustom, adopted by the brand new system’s interpretation of the immediate within the second column, subsequent to it. The remaining columns present the outcomes from varied proprietary and FOSS methods: Kling; Vidu; Pika; Hailuo; and the Wan-based SkyReels-A2.

Within the video beneath, we see renders of three eventualities important to this launch: respectively, particular person + object; single-character emulation; and digital try-on (particular person + garments):

Click on to play. Three examples edited from the fabric on the supporting web site for Hunyuan Video.

We are able to discover a couple of issues from these examples, principally associated to the system counting on a single supply picture, as an alternative of a number of photos of the identical topic.

Within the first clip, the person is basically nonetheless dealing with the digital camera. He dips his head down and sideways at not rather more than 20-25 levels of rotation, however, at an inclination in extra of that, the system would actually have to start out guessing what he seems to be like in profile. That is arduous, most likely inconceivable to gauge precisely from a sole frontal picture.

Within the second instance, we see that the little woman is smiling within the rendered video as she is within the single static supply picture. Once more, with this sole picture as reference, the HunyuanCustom must make a comparatively uninformed guess about what her ‘resting face’ seems to be like. Moreover, her face doesn’t deviate from camera-facing stance by greater than the prior instance (‘man consuming crisps’).

Within the final instance, we see that because the supply materials – the girl and the garments she is prompted into sporting – are usually not full photos, the render has cropped the state of affairs to suit – which is definitely reasonably a superb resolution to an information problem!

The purpose is that although the brand new system can deal with a number of photos (corresponding to particular person + crisps, or particular person + garments), it doesn’t apparently permit for a number of angles or different views of a single character, in order that numerous expressions or uncommon angles could possibly be accommodated. To this extent, the system might due to this fact battle to exchange the rising ecosystem of LoRA fashions which have sprung up round HunyuanVideo since its launch final December, since these can assist HunyuanVideo to provide constant characters from any angle and with any facial features represented within the coaching dataset (20-60 photos is typical).

Wired for Sound

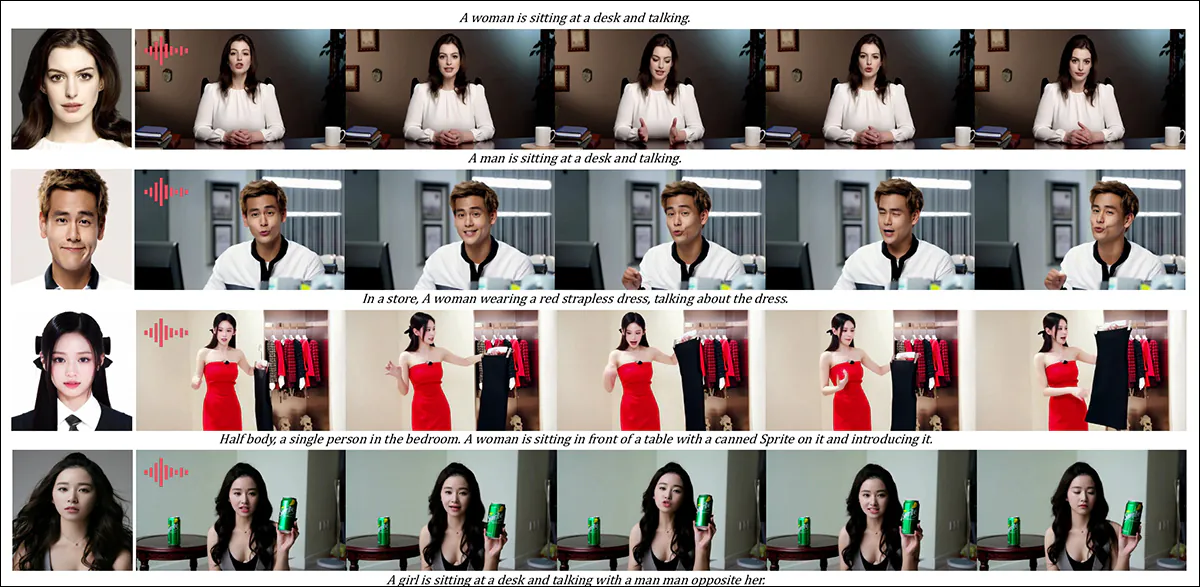

For audio, HunyuanCustom leverages the LatentSync system (notoriously arduous for hobbyists to arrange and get good outcomes from) for acquiring lip actions which can be matched to audio and textual content that the person provides:

Options audio. Click on to play. Numerous examples of lip-sync from the HunyuanCustom supplementary web site, edited collectively.

On the time of writing, there aren’t any English-language examples, however these seem like reasonably good – the extra so if the tactic of making them is easily-installable and accessible.

Modifying Present Video

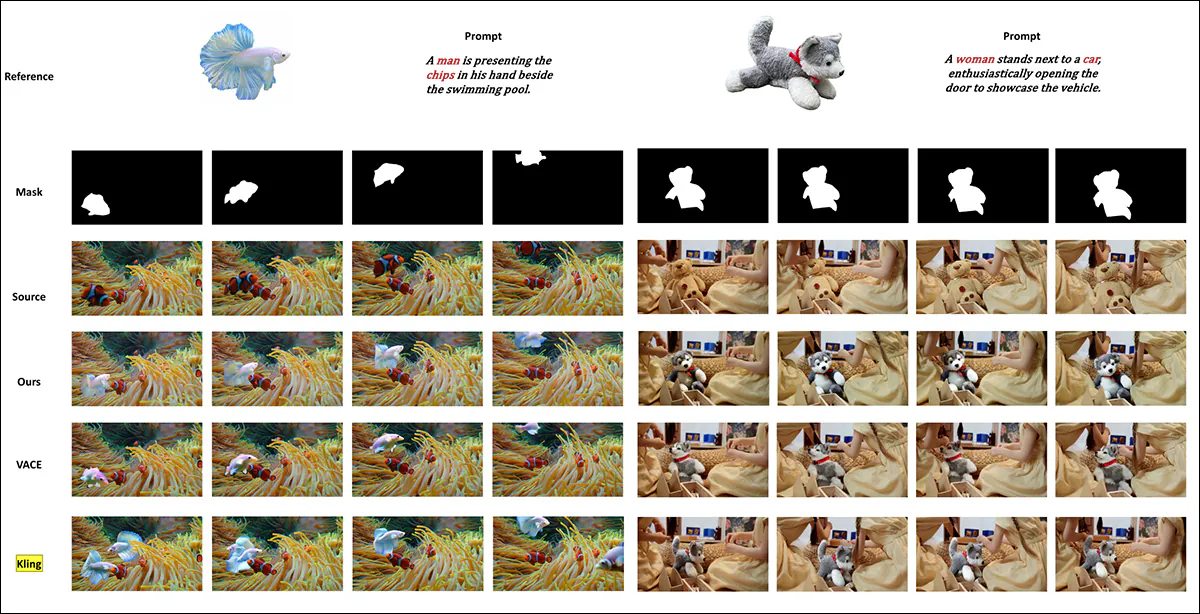

The brand new system presents what seem like very spectacular outcomes for video-to-video (V2V, or Vid2Vid) modifying, whereby a phase of an current (actual) video is masked off and intelligently changed by a topic given in a single reference picture. Beneath is an instance from the supplementary supplies web site:

Click on to play. Solely the central object is focused, however what stays round it additionally will get altered in a HunyuanCustom vid2vid cross.

As we are able to see, and as is normal in a vid2vid state of affairs, the whole video is to some extent altered by the method, although most altered within the focused area, i.e., the plush toy. Presumably pipelines could possibly be developed to create such transformations underneath a rubbish matte method that leaves the vast majority of the video content material an identical to the unique. That is what Adobe Firefly does underneath the hood, and does fairly effectively – however it’s an under-studied course of within the FOSS generative scene.

That stated, many of the different examples offered do a greater job of focusing on these integrations, as we are able to see within the assembled compilation beneath:

Click on to play. Various examples of interjected content material utilizing vid2vid in HunyuanCustom, exhibiting notable respect for the untargeted materials.

A New Begin?

This initiative is a growth of the Hunyuan Video undertaking, not a tough pivot away from that growth stream. The undertaking’s enhancements are launched as discrete architectural insertions reasonably than sweeping structural modifications, aiming to permit the mannequin to take care of id constancy throughout frames with out counting on subject-specific fine-tuning, as with LoRA or textual inversion approaches.

To be clear, due to this fact, HunyuanCustom isn’t educated from scratch, however reasonably is a fine-tuning of the December 2024 HunyuanVideo basis mannequin.

Those that have developed HunyuanVideo LoRAs might marvel if they may nonetheless work with this new version, or whether or not they should reinvent the LoRA wheel but once more if they need extra customization capabilities than are constructed into this new launch.

Basically, a closely fine-tuned launch of a hyperscale mannequin alters the mannequin weights sufficient that LoRAs made for the sooner mannequin is not going to work correctly, or in any respect, with the newly-refined mannequin.

Generally, nevertheless, a fine-tune’s reputation can problem its origins: one instance of a fine-tune turning into an efficient fork, with a devoted ecosystem and followers of its personal, is the Pony Diffusion tuning of Secure Diffusion XL (SDXL). Pony at the moment has 592,000+ downloads on the ever-changing CivitAI area, with an enormous vary of LoRAs which have used Pony (and never SDXL) as the bottom mannequin, and which require Pony at inference time.

Releasing

The undertaking web page for the new paper (which is titled HunyuanCustom: A Multimodal-Pushed Structure for Personalized Video Technology) options hyperlinks to a GitHub web site that, as I write, simply grew to become purposeful, and seems to include all code and needed weights for native implementation, along with a proposed timeline (the place the one necessary factor but to come back is ComfyUI integration).

On the time of writing, the undertaking’s Hugging Face presence continues to be a 404. There’s, nevertheless, an API-based model of the place one can apparently demo the system, as long as you’ll be able to present a WeChat scan code.

I’ve not often seen such an elaborate and intensive utilization of such all kinds of initiatives in a single meeting, as is clear in HunyuanCustom – and presumably a number of the licenses would in any case oblige a full launch.

Two fashions are introduced on the GitHub web page: a 720px1280px model requiring 8)GB of GPU Peak Reminiscence, and a 512px896px model requiring 60GB of GPU Peak Reminiscence.

The repository states ‘The minimal GPU reminiscence required is 24GB for 720px1280px129f however very gradual…We advocate utilizing a GPU with 80GB of reminiscence for higher era high quality’ – and iterates that the system has solely been examined to this point on Linux.

The sooner Hunyuan Video mannequin has, since official launch, been quantized all the way down to sizes the place it may be run on lower than 24GB of VRAM, and it appears affordable to imagine that the brand new mannequin will likewise be tailored into extra consumer-friendly types by the group, and that it’ll rapidly be tailored to be used on Home windows methods too.

Resulting from time constraints and the overwhelming quantity of data accompanying this launch, we are able to solely take a broader, reasonably than in-depth have a look at this launch. Nonetheless, let’s pop the hood on HunyuanCustom just a little.

A Have a look at the Paper

The info pipeline for HunyuanCustom, apparently compliant with the GDPR framework, incorporates each synthesized and open-source video datasets, together with OpenHumanVid, with eight core classes represented: people, animals, vegetation, landscapes, autos, objects, structure, and anime.

From the discharge paper, an outline of the varied contributing packages within the HunyuanCustom information building pipeline. Supply: https://arxiv.org/pdf/2505.04512

Preliminary filtering begins with PySceneDetect, which segments movies into single-shot clips. TextBPN-Plus-Plus is then used to take away movies containing extreme on-screen textual content, subtitles, watermarks, or logos.

To handle inconsistencies in decision and length, clips are standardized to 5 seconds in size and resized to 512 or 720 pixels on the brief facet. Aesthetic filtering is dealt with utilizing Koala-36M, with a customized threshold of 0.06 utilized for the customized dataset curated by the brand new paper’s researchers.

The topic extraction course of combines the Qwen7B Massive Language Mannequin (LLM), the YOLO11X object recognition framework, and the favored InsightFace structure, to establish and validate human identities.

For non-human topics, QwenVL and Grounded SAM 2 are used to extract related bounding packing containers, that are discarded if too small.

Examples of semantic segmentation with Grounded SAM 2, used within the Hunyuan Management undertaking. Supply: https://github.com/IDEA-Analysis/Grounded-SAM-2

Multi-subject extraction makes use of Florence2 for bounding field annotation, and Grounded SAM 2 for segmentation, adopted by clustering and temporal segmentation of coaching frames.

The processed clips are additional enhanced through annotation, utilizing a proprietary structured-labeling system developed by the Hunyuan staff, and which furnishes layered metadata corresponding to descriptions and digital camera movement cues.

Masks augmentation methods, together with conversion to bounding packing containers, have been utilized throughout coaching to cut back overfitting and make sure the mannequin adapts to numerous object shapes.

Audio information was synchronized utilizing the aforementioned LatentSync, and clips discarded if synchronization scores fall beneath a minimal threshold.

The blind picture high quality evaluation framework HyperIQA was used to exclude movies scoring underneath 40 (on HyperIQA’s bespoke scale). Legitimate audio tracks have been then processed with Whisper to extract options for downstream duties.

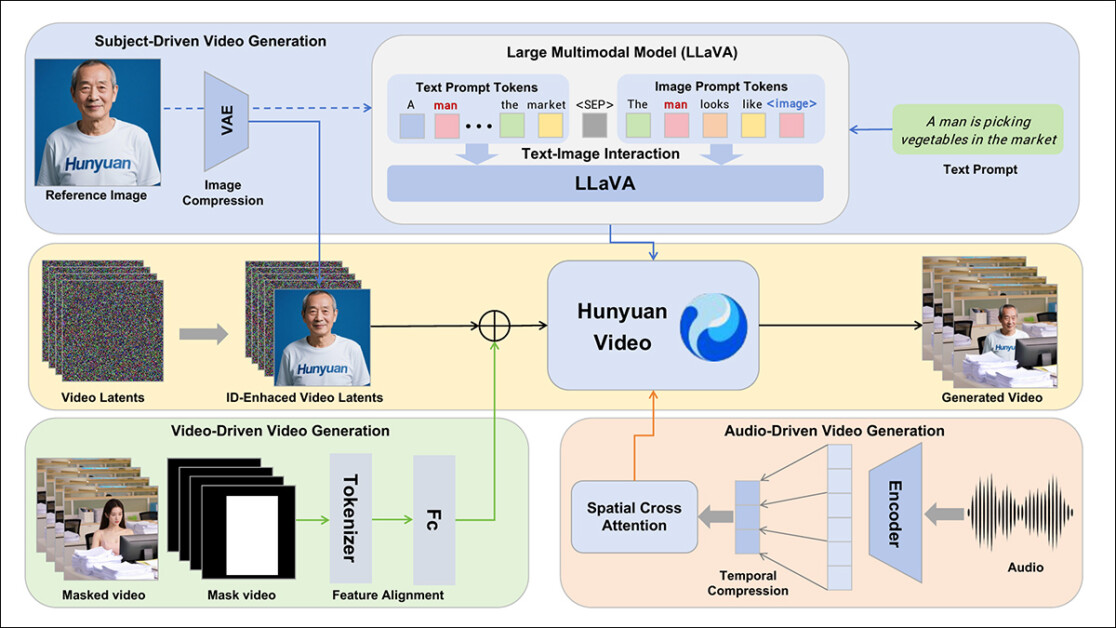

The authors incorporate the LLaVA language assistant mannequin throughout the annotation section, they usually emphasize the central place that this framework has in HunyuanCustom. LLaVA is used to generate picture captions and help in aligning visible content material with textual content prompts, supporting the development of a coherent coaching sign throughout modalities:

The HunyuanCustom framework helps identity-consistent video era conditioned on textual content, picture, audio, and video inputs.

By leveraging LLaVA’s vision-language alignment capabilities, the pipeline beneficial properties an extra layer of semantic consistency between visible parts and their textual descriptions – particularly helpful in multi-subject or complex-scene eventualities.

Customized Video

To permit video era based mostly on a reference picture and a immediate, the 2 modules centered round LLaVA have been created, first adapting the enter construction of HunyuanVideo in order that it may settle for a picture together with textual content.

This concerned formatting the immediate in a method that embeds the picture straight or tags it with a brief id description. A separator token was used to cease the picture embedding from overwhelming the immediate content material.

Since LLaVA’s visible encoder tends to compress or discard fine-grained spatial particulars throughout the alignment of picture and textual content options (significantly when translating a single reference picture right into a normal semantic embedding), an id enhancement module was included. Since almost all video latent diffusion fashions have some issue sustaining an id with out an LoRA, even in a five-second clip, the efficiency of this module in group testing might show vital.

In any case, the reference picture is then resized and encoded utilizing the causal 3D-VAE from the unique HunyuanVideo mannequin, and its latent inserted into the video latent throughout the temporal axis, with a spatial offset utilized to forestall the picture from being straight reproduced within the output, whereas nonetheless guiding era.

The mannequin was educated utilizing Circulate Matching, with noise samples drawn from a logit-normal distribution – and the community was educated to get well the proper video from these noisy latents. LLaVA and the video generator have been each fine-tuned collectively in order that the picture and immediate may information the output extra fluently and hold the topic id constant.

For multi-subject prompts, every image-text pair was embedded individually and assigned a definite temporal place, permitting identities to be distinguished, and supporting the era of scenes involving a number of interacting topics.

Sound and Imaginative and prescient

HunyuanCustom situations audio/speech era utilizing each user-input audio and a textual content immediate, permitting characters to talk inside scenes that mirror the described setting.

To help this, an Identification-disentangled AudioNet module introduces audio options with out disrupting the id indicators embedded from the reference picture and immediate. These options are aligned with the compressed video timeline, divided into frame-level segments, and injected utilizing a spatial cross-attention mechanism that retains every body remoted, preserving topic consistency and avoiding temporal interference.

A second temporal injection module gives finer management over timing and movement, working in tandem with AudioNet, mapping audio options to particular areas of the latent sequence, and utilizing a Multi-Layer Perceptron (MLP) to transform them into token-wise movement offsets. This enables gestures and facial motion to comply with the rhythm and emphasis of the spoken enter with higher precision.

HunyuanCustom permits topics in current movies to be edited straight, changing or inserting individuals or objects right into a scene with no need to rebuild all the clip from scratch. This makes it helpful for duties that contain altering look or movement in a focused method.

Click on to play. An extra instance from the supplementary web site.

To facilitate environment friendly subject-replacement in current movies, the brand new system avoids the resource-intensive method of latest strategies such because the currently-popular VACE, or those who merge whole video sequences collectively, favoring as an alternative the compression of a reference video utilizing the pretrained causal 3D-VAE – aligning it with the era pipeline’s inside video latents, after which including the 2 collectively. This retains the method comparatively light-weight, whereas nonetheless permitting exterior video content material to information the output.

A small neural community handles the alignment between the clear enter video and the noisy latents utilized in era. The system assessments two methods of injecting this info: merging the 2 units of options earlier than compressing them once more; and including the options body by body. The second methodology works higher, the authors discovered, and avoids high quality loss whereas maintaining the computational load unchanged.

Knowledge and Assessments

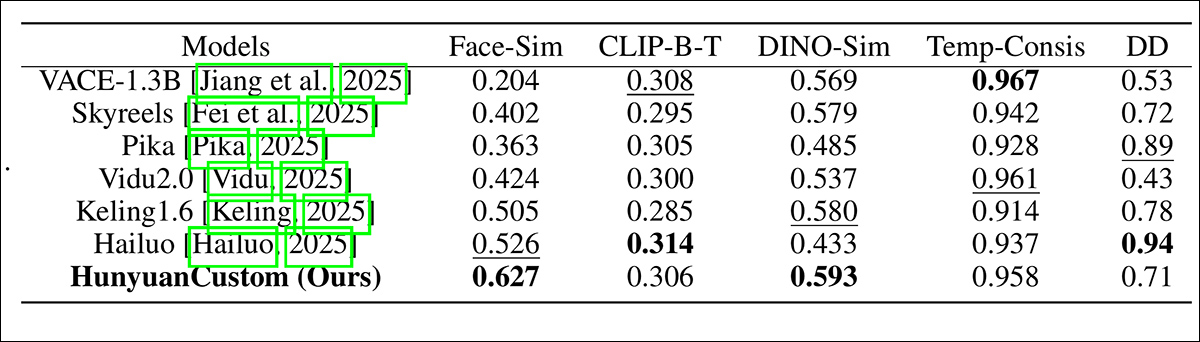

In assessments, the metrics used have been: the id consistency module in ArcFace, which extracts facial embeddings from each the reference picture and every body of the generated video, after which calculates the typical cosine similarity between them; topic similarity, through sending YOLO11x segments to Dino 2 for comparability; CLIP-B, text-video alignment, which measures similarity between the immediate and the generated video; CLIP-B once more, to calculate similarity between every body and each its neighboring frames and the primary body, in addition to temporal consistency; and dynamic diploma, as outlined by VBench.

As indicated earlier, the baseline closed supply rivals have been Hailuo; Vidu 2.0; Kling (1.6); and Pika. The competing FOSS frameworks have been VACE and SkyReels-A2.

Mannequin efficiency analysis evaluating HunyuanCustom with main video customization strategies throughout ID consistency (Face-Sim), topic similarity (DINO-Sim), text-video alignment (CLIP-B-T), temporal consistency (Temp-Consis), and movement depth (DD). Optimum and sub-optimal outcomes are proven in daring and underlined, respectively.

Of those outcomes, the authors state:

‘Our [HunyuanCustom] achieves the perfect ID consistency and topic consistency. It additionally achieves comparable ends in immediate following and temporal consistency. [Hailuo] has the perfect clip rating as a result of it will possibly comply with textual content directions effectively with solely ID consistency, sacrificing the consistency of non-human topics (the worst DINO-Sim). By way of Dynamic-degree, [Vidu] and [VACE] carry out poorly, which can be because of the small measurement of the mannequin.’

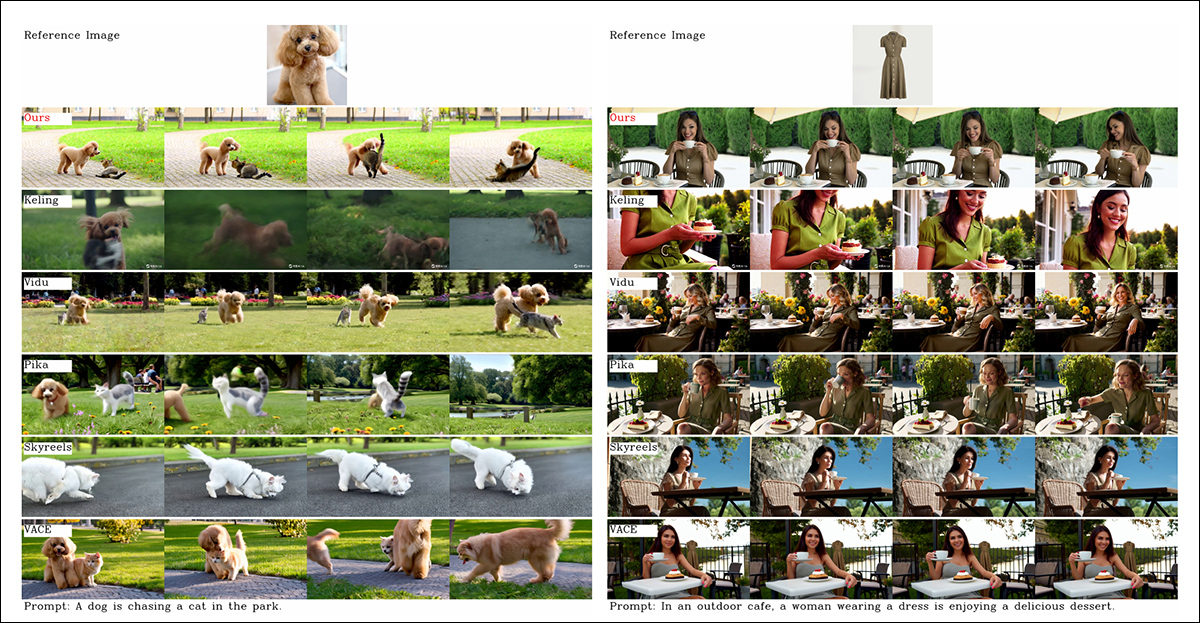

Although the undertaking web site is saturated with comparability movies (the format of which appears to have been designed for web site aesthetics reasonably than simple comparability), it doesn’t at the moment function a video equal of the static outcomes crammed collectively within the PDF, in regard to the preliminary qualitative assessments. Although I embrace it right here, I encourage the reader to make an in depth examination of the movies on the undertaking web site, as they provide a greater impression of the outcomes:

From the paper, a comparability on object-centered video customization. Although the viewer ought to (as at all times) seek advice from the supply PDF for higher decision, the movies on the undertaking web site is perhaps a extra illuminating useful resource on this case.

The authors remark right here:

‘It may be seen that [Vidu], [Skyreels A2] and our methodology obtain comparatively good ends in immediate alignment and topic consistency, however our video high quality is healthier than Vidu and Skyreels, due to the great video era efficiency of our base mannequin, i.e., [Hunyuanvideo-13B].

‘Amongst industrial merchandise, though [Kling] has a superb video high quality, the primary body of the video has a copy-paste [problem], and generally the topic strikes too quick and [blurs], main a poor viewing expertise.’

The authors additional remark that Pika performs poorly when it comes to temporal consistency, introducing subtitle artifacts (results from poor information curation, the place textual content parts in video clips have been allowed to pollute the core ideas).

Hailuo maintains facial id, they state, however fails to protect full-body consistency. Amongst open-source strategies, VACE, the researchers assert, is unable to take care of id consistency, whereas they contend that HunyuanCustom produces movies with robust id preservation, whereas retaining high quality and variety.

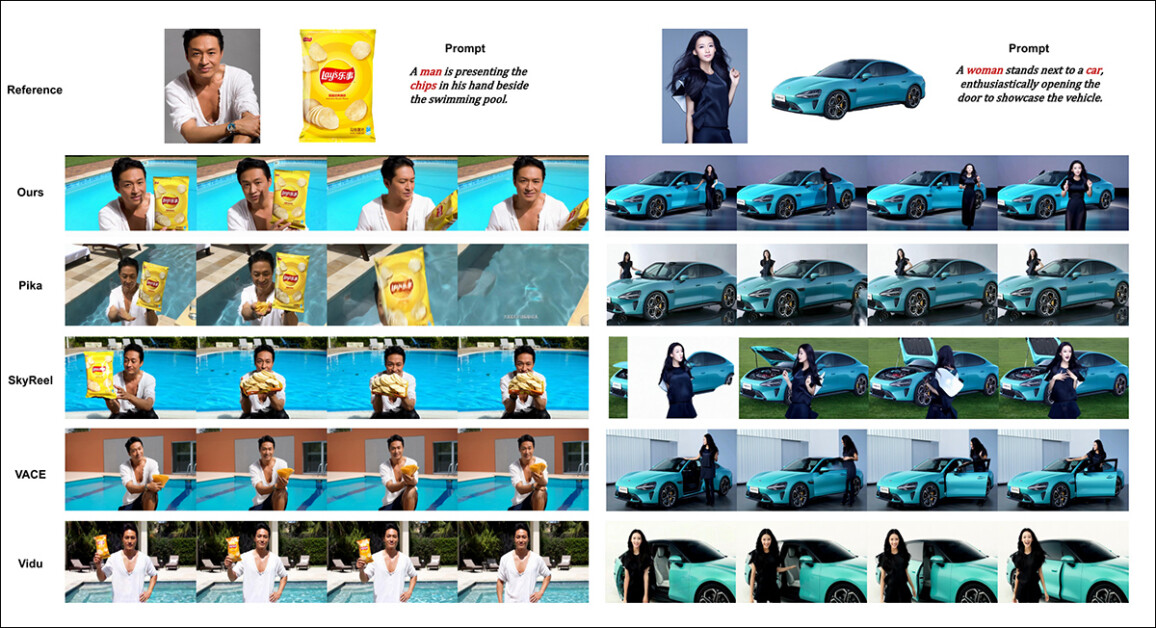

Subsequent, assessments have been performed for multi-subject video customization, in opposition to the identical contenders. As within the earlier instance, the flattened PDF outcomes are usually not print equivalents of movies accessible on the undertaking web site, however are distinctive among the many outcomes introduced:

Comparisons utilizing multi-subject video customizations. Please see PDF for higher element and backbone.

The paper states:

‘[Pika] can generate the desired topics however reveals instability in video frames, with cases of a person disappearing in a single state of affairs and a girl failing to open a door as prompted. [Vidu] and [VACE] partially seize human id however lose vital particulars of non-human objects, indicating a limitation in representing non-human topics.

‘[SkyReels A2] experiences extreme body instability, with noticeable modifications in chips and quite a few artifacts in the correct state of affairs.

‘In distinction, our HunyuanCustom successfully captures each human and non-human topic identities, generates movies that adhere to the given prompts, and maintains excessive visible high quality and stability.’

An extra experiment was ‘digital human commercial’, whereby the frameworks have been tasked to combine a product with an individual:

From the qualitative testing spherical, examples of neural ‘product placement’. Please see PDF for higher element and backbone.

For this spherical, the authors state:

‘The [results] show that HunyuanCustom successfully maintains the id of the human whereas preserving the main points of the goal product, together with the textual content on it.

‘Moreover, the interplay between the human and the product seems pure, and the video adheres carefully to the given immediate, highlighting the substantial potential of HunyuanCustom in producing commercial movies.’

One space the place video outcomes would have been very helpful was the qualitative spherical for audio-driven topic customization, the place the character speaks the corresponding audio from a text-described scene and posture.

Partial outcomes given for the audio spherical – although video outcomes might need been preferable on this case. Solely the highest half of the PDF determine is reproduced right here, as it’s giant and arduous to accommodate on this article. Please seek advice from supply PDF for higher element and backbone.

The authors assert:

‘Earlier audio-driven human animation strategies enter a human picture and an audio, the place the human posture, apparel, and surroundings stay per the given picture and can’t generate movies in different gesture and surroundings, which can [restrict] their utility.

‘…[Our] HunyuanCustom allows audio-driven human customization, the place the character speaks the corresponding audio in a text-described scene and posture, permitting for extra versatile and controllable audio-driven human animation.’

Additional assessments (please see PDF for all particulars) included a spherical pitting the brand new system in opposition to VACE and Kling 1.6 for video topic alternative:

Testing topic alternative in video-to-video mode. Please seek advice from supply PDF for higher element and backbone.

Of those, the final assessments introduced within the new paper, the researchers opine:

‘VACE suffers from boundary artifacts attributable to strict adherence to the enter masks, leading to unnatural topic shapes and disrupted movement continuity. [Kling], in distinction, reveals a copy-paste impact, the place topics are straight overlaid onto the video, resulting in poor integration with the background.

‘Compared, HunyuanCustom successfully avoids boundary artifacts, achieves seamless integration with the video background, and maintains robust id preservation—demonstrating its superior efficiency in video modifying duties.’

Conclusion

It is a fascinating launch, not least as a result of it addresses one thing that the ever-discontent hobbyist scene has been complaining about extra recently – the shortage of lip-sync, in order that the elevated realism succesful in methods corresponding to Hunyuan Video and Wan 2.1 is perhaps given a brand new dimension of authenticity.

Although the format of almost all of the comparative video examples on the undertaking web site makes it reasonably tough to match HunyuanCustom’s capabilities in opposition to prior contenders, it have to be famous that very, only a few initiatives within the video synthesis house have the braveness to pit themselves in assessments in opposition to Kling, the industrial video diffusion API which is at all times hovering at or close to the highest of the leader-boards; Tencent seems to have made headway in opposition to this incumbent in a reasonably spectacular method.

* The problem being that a number of the movies are so large, brief, and high-resolution that they won’t play in normal video gamers corresponding to VLC or Home windows Media Participant, displaying black screens.

First printed Thursday, Might 8, 2025