Say an individual takes their French Bulldog, Bowser, to the canine park. Figuring out Bowser as he performs among the many different canines is straightforward for the dog-owner to do whereas onsite.

But when somebody desires to make use of a generative AI mannequin like GPT-5 to observe their pet whereas they’re at work, the mannequin might fail at this primary job. Imaginative and prescient-language fashions like GPT-5 typically excel at recognizing basic objects, like a canine, however they carry out poorly at finding personalised objects, like Bowser the French Bulldog.

To deal with this shortcoming, researchers from MIT, the MIT-IBM Watson AI Lab, the Weizmann Institute of Science, and elsewhere have launched a brand new coaching methodology that teaches vision-language fashions to localize personalised objects in a scene.

Their methodology makes use of fastidiously ready video-tracking information through which the identical object is tracked throughout a number of frames. They designed the dataset so the mannequin should give attention to contextual clues to establish the personalised object, quite than counting on information it beforehand memorized.

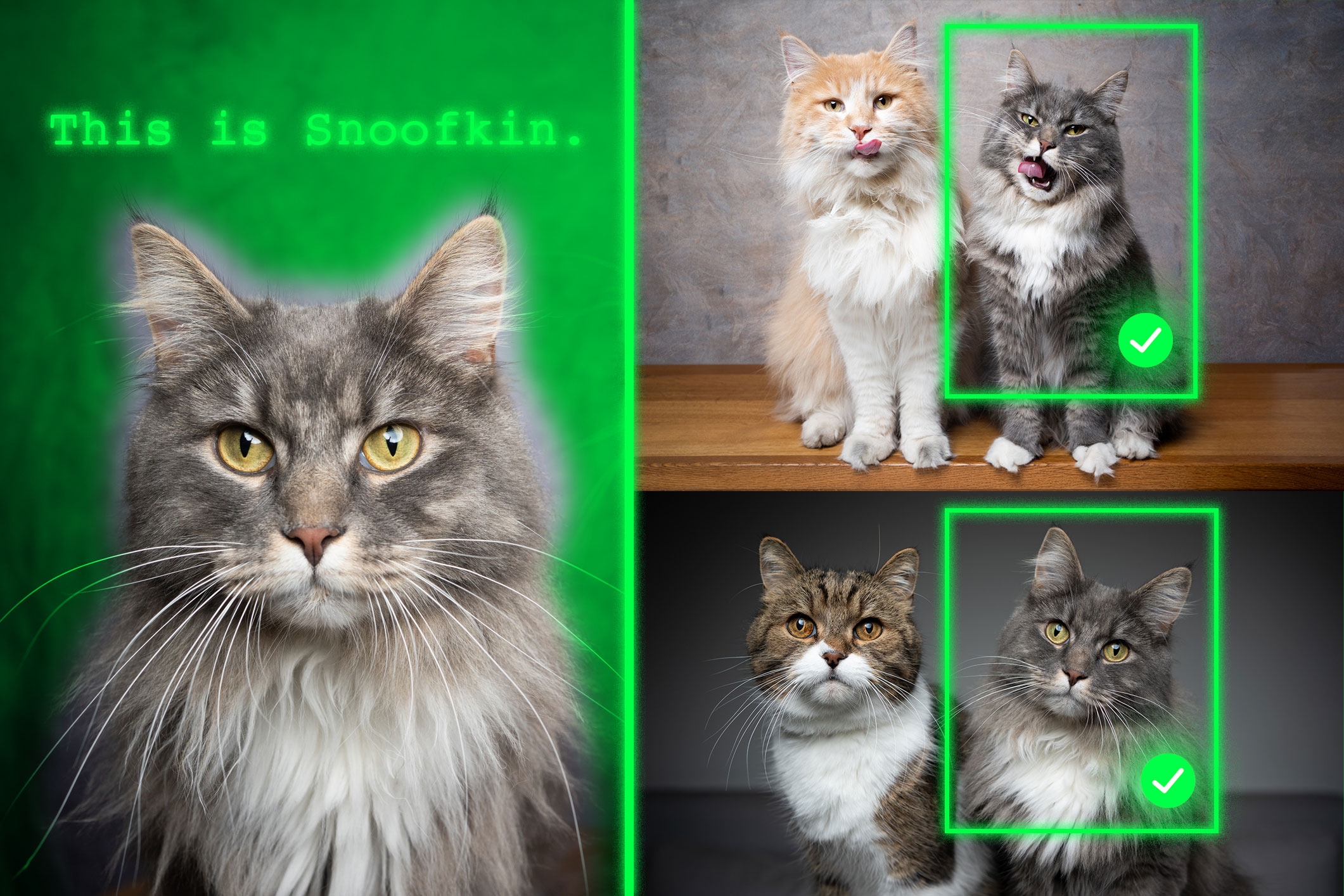

When given a couple of instance photos displaying a personalised object, like somebody’s pet, the retrained mannequin is best in a position to establish the placement of that very same pet in a brand new picture.

Fashions retrained with their methodology outperformed state-of-the-art methods at this job. Importantly, their method leaves the remainder of the mannequin’s basic talents intact.

This new strategy might assist future AI methods observe particular objects throughout time, like a toddler’s backpack, or localize objects of curiosity, corresponding to a species of animal in ecological monitoring. It might additionally support within the growth of AI-driven assistive applied sciences that assist visually impaired customers discover sure gadgets in a room.

“In the end, we wish these fashions to have the ability to study from context, identical to people do. If a mannequin can do that nicely, quite than retraining it for every new job, we might simply present a couple of examples and it will infer learn how to carry out the duty from that context. This can be a very highly effective capability,” says Jehanzeb Mirza, an MIT postdoc and senior creator of a paper on this system.

Mirza is joined on the paper by co-lead authors Sivan Doveh, a postdoc at Stanford College who was a graduate pupil at Weizmann Institute of Science when this analysis was carried out; and Nimrod Shabtay, a researcher at IBM Analysis; James Glass, a senior analysis scientist and the pinnacle of the Spoken Language Techniques Group within the MIT Pc Science and Synthetic Intelligence Laboratory (CSAIL); and others. The work will likely be introduced on the Worldwide Convention on Pc Imaginative and prescient.

An sudden shortcoming

Researchers have discovered that giant language fashions (LLMs) can excel at studying from context. In the event that they feed an LLM a couple of examples of a job, like addition issues, it could actually study to reply new addition issues primarily based on the context that has been supplied.

A vision-language mannequin (VLM) is basically an LLM with a visible part linked to it, so the MIT researchers thought it will inherit the LLM’s in-context studying capabilities. However this isn’t the case.

“The analysis group has not been capable of finding a black-and-white reply to this specific downside but. The bottleneck might come up from the truth that some visible info is misplaced within the means of merging the 2 elements collectively, however we simply don’t know,” Mirza says.

The researchers got down to enhance VLMs talents to do in-context localization, which includes discovering a particular object in a brand new picture. They targeted on the information used to retrain present VLMs for a brand new job, a course of referred to as fine-tuning.

Typical fine-tuning information are gathered from random sources and depict collections of on a regular basis objects. One picture would possibly comprise vehicles parked on a road, whereas one other features a bouquet of flowers.

“There isn’t any actual coherence in these information, so the mannequin by no means learns to acknowledge the identical object in a number of photos,” he says.

To repair this downside, the researchers developed a brand new dataset by curating samples from present video-tracking information. These information are video clips displaying the identical object transferring by a scene, like a tiger strolling throughout a grassland.

They lower frames from these movies and structured the dataset so every enter would include a number of photos displaying the identical object in numerous contexts, with instance questions and solutions about its location.

“Through the use of a number of photos of the identical object in numerous contexts, we encourage the mannequin to constantly localize that object of curiosity by specializing in the context,” Mirza explains.

Forcing the main target

However the researchers discovered that VLMs are likely to cheat. As an alternative of answering primarily based on context clues, they’ll establish the article utilizing information gained throughout pretraining.

As an example, because the mannequin already discovered that a picture of a tiger and the label “tiger” are correlated, it might establish the tiger crossing the grassland primarily based on this pretrained information, as an alternative of inferring from context.

To resolve this downside, the researchers used pseudo-names quite than precise object class names within the dataset. On this case, they modified the identify of the tiger to “Charlie.”

“It took us some time to determine learn how to stop the mannequin from dishonest. However we modified the sport for the mannequin. The mannequin doesn’t know that ‘Charlie’ generally is a tiger, so it’s compelled to take a look at the context,” he says.

The researchers additionally confronted challenges find the easiest way to organize the information. If the frames are too shut collectively, the background wouldn’t change sufficient to supply information variety.

Ultimately, finetuning VLMs with this new dataset improved accuracy at personalised localization by about 12 % on common. After they included the dataset with pseudo-names, the efficiency positive factors reached 21 %.

As mannequin measurement will increase, their method results in larger efficiency positive factors.

Sooner or later, the researchers wish to research potential causes VLMs don’t inherit in-context studying capabilities from their base LLMs. As well as, they plan to discover further mechanisms to enhance the efficiency of a VLM with out the necessity to retrain it with new information.

“This work reframes few-shot personalised object localization — adapting on the fly to the identical object throughout new scenes — as an instruction-tuning downside and makes use of video-tracking sequences to show VLMs to localize primarily based on visible context quite than class priors. It additionally introduces the primary benchmark for this setting with stable positive factors throughout open and proprietary VLMs. Given the immense significance of fast, instance-specific grounding — typically with out finetuning — for customers of real-world workflows (corresponding to robotics, augmented actuality assistants, inventive instruments, and so forth.), the sensible, data-centric recipe provided by this work may also help improve the widespread adoption of vision-language basis fashions,” says Saurav Jha, a postdoc on the Mila-Quebec Synthetic Intelligence Institute, who was not concerned with this work.

Extra co-authors are Wei Lin, a analysis affiliate at Johannes Kepler College; Eli Schwartz, a analysis scientist at IBM Analysis; Hilde Kuehne, professor of laptop science at Tuebingen AI Heart and an affiliated professor on the MIT-IBM Watson AI Lab; Raja Giryes, an affiliate professor at Tel Aviv College; Rogerio Feris, a principal scientist and supervisor on the MIT-IBM Watson AI Lab; Leonid Karlinsky, a principal analysis scientist at IBM Analysis; Assaf Arbelle, a senior analysis scientist at IBM Analysis; and Shimon Ullman, the Samy and Ruth Cohn Professor of Pc Science on the Weizmann Institute of Science.

This analysis was funded, partially, by the MIT-IBM Watson AI Lab.