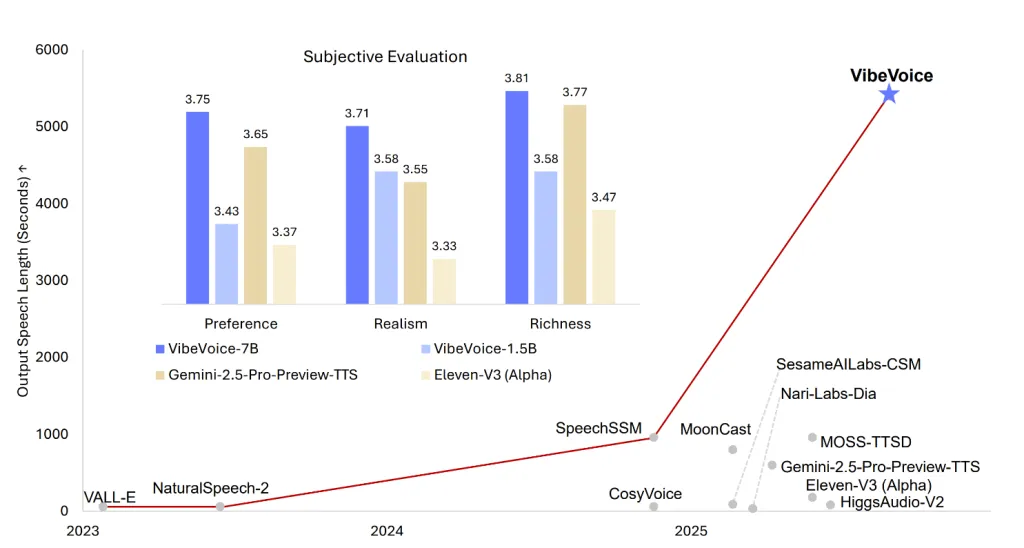

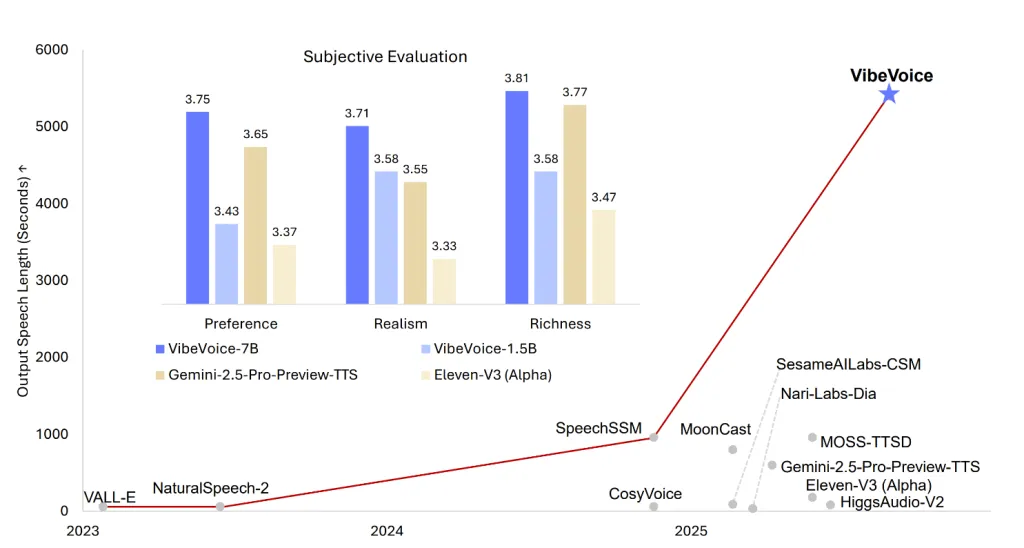

Microsoft’s newest open supply launch, VibeVoice-1.5B, redefines the boundaries of text-to-speech (TTS) know-how—delivering expressive, long-form, multi-speaker generated audio that’s MIT licensed, scalable, and extremely versatile for analysis use. This mannequin isn’t simply one other TTS engine; it’s a framework designed to generate as much as 90 minutes of uninterrupted, natural-sounding audio, help simultaneous era of as much as 4 distinct audio system, and even deal with cross-lingual and singing synthesis eventualities. With a streaming structure and a bigger 7B mannequin introduced for the close to future, VibeVoice-1.5B positions itself as a significant advance for AI-powered conversational audio, podcasting, and artificial voice analysis.

Key Options

- Huge Context and Multi-Speaker Help: VibeVoice-1.5B can synthesize as much as 90 minutes of speech with as much as 4 distinct audio system in a single session—far surpassing the standard 1-2 speaker restrict of conventional TTS fashions.

- Simultaneous Era: The mannequin isn’t simply stitching collectively single-voice clips; it’s designed to help parallel audio streams for a number of audio system, mimicking pure dialog and turn-taking.

- Cross-Lingual and Singing Synthesis: Whereas primarily educated on English and Chinese language, the mannequin is able to cross-lingual synthesis and may even generate singing—options not often demonstrated in earlier open supply TTS fashions.

- MIT License: Absolutely open supply and commercially pleasant, with a give attention to analysis, transparency, and reproducibility.

- Scalable for Streaming and Lengthy-Kind Audio: The structure is designed for environment friendly long-duration synthesis and anticipates a forthcoming 7B streaming-capable mannequin, additional increasing prospects for real-time and high-fidelity TTS.

- Emotion and Expressiveness: The mannequin is touted for its emotion management and pure expressiveness, making it appropriate for functions like podcasts or conversational eventualities.

Structure and Technical Deep Dive

VibeVoice’s basis is a 1.5B-parameter LLM (Qwen2.5-1.5B) that integrates with two novel tokenizers—Acoustic and Semantic—each designed to function at a low body charge (7.5Hz) for computational effectivity and consistency throughout lengthy sequences.

- Acoustic Tokenizer: A σ-VAE variant with a mirrored encoder-decoder construction (every ~340M parameters), reaching 3200x downsampling from uncooked audio at 24kHz.

- Semantic Tokenizer: Educated through an ASR proxy process, this encoder-only structure mirrors the acoustic tokenizer’s design (minus the VAE parts).

- Diffusion Decoder Head: A light-weight (~123M parameter) conditional diffusion module predicts acoustic options, leveraging Classifier-Free Steerage (CFG) and DPM-Solver for perceptual high quality.

- Context Size Curriculum: Coaching begins at 4k tokens and scales as much as 65k tokens—enabling the mannequin to generate very lengthy, coherent audio segments.

- Sequence Modeling: The LLM understands dialogue circulation for turn-taking, whereas the diffusion head generates fine-grained acoustic particulars—separating semantics and synthesis whereas preserving speaker identification over lengthy durations.

Mannequin Limitations and Accountable Use

- English and Chinese language Solely: The mannequin is educated solely on these languages; different languages could produce unintelligible or offensive outputs.

- No Overlapping Speech: Whereas it helps turn-taking, VibeVoice-1.5B does not mannequin overlapping speech between audio system.

- Speech-Solely: The mannequin doesn’t generate background sounds, Foley, or music—audio output is strictly speech.

- Authorized and Moral Dangers: Microsoft explicitly prohibits use for voice impersonation, disinformation, or authentication bypass. Customers should adjust to legal guidelines and disclose AI-generated content material.

- Not for Skilled Actual-Time Functions: Whereas environment friendly, this launch is not optimized for low-latency, interactive, or live-streaming eventualities; that’s the goal for the soon-to-come 7B variant.

Conclusion

Microsoft’s VibeVoice-1.5B is a breakthrough in open TTS: scalable, expressive, and multi-speaker, with a light-weight diffusion-based structure that unlocks long-form, conversational audio synthesis for researchers and open supply builders. Whereas use is at present research-focused and restricted to English/Chinese language, the mannequin’s capabilities—and the promise of upcoming variations—sign a paradigm shift in how AI can generate and work together with artificial speech.

For technical groups, content material creators, and AI lovers, VibeVoice-1.5B is a must-explore device for the following era of artificial voice functions—accessible now on Hugging Face and GitHub, with clear documentation and an open license. As the sphere pivots towards extra expressive, interactive, and ethically clear TTS, Microsoft’s newest providing is a landmark for open supply AI speech synthesis.

FAQs

What makes VibeVoice-1.5B totally different from different text-to-speech fashions?

VibeVoice-1.5B can generate as much as 90 minutes of expressive, multi-speaker audio (as much as 4 audio system), helps cross-lingual and singing synthesis, and is totally open supply below the MIT license—pushing the boundaries of long-form conversational AI audio era

What {hardware} is really useful for operating the mannequin domestically?

Neighborhood assessments present that producing a multi-speaker dialog with the 1.5 B checkpoint consumes ≈ 7 GB of GPU VRAM, so an 8 GB client card (e.g., RTX 3060) is usually ample for inference.

Which languages and audio types does the mannequin help as we speak?

VibeVoice-1.5B is educated solely on English and Chinese language and may carry out cross-lingual narration (e.g., English immediate → Chinese language speech) in addition to fundamental singing synthesis. It produces speech solely—no background sounds—and doesn’t mannequin overlapping audio system; turn-taking is sequential.

Try the Technical Report, Mannequin on Hugging Face and Codes. Be at liberty to take a look at our GitHub Web page for Tutorials, Codes and Notebooks. Additionally, be at liberty to observe us on Twitter and don’t neglect to affix our 100k+ ML SubReddit and Subscribe to our E-newsletter.

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is dedicated to harnessing the potential of Synthetic Intelligence for social good. His most up-to-date endeavor is the launch of an Synthetic Intelligence Media Platform, Marktechpost, which stands out for its in-depth protection of machine studying and deep studying information that’s each technically sound and simply comprehensible by a large viewers. The platform boasts of over 2 million month-to-month views, illustrating its recognition amongst audiences.