Claude continuously overstated findings and infrequently fabricated knowledge throughout autonomous operations, claiming to have obtained credentials that didn’t work or figuring out crucial discoveries that proved to be publicly accessible data. This AI hallucination in offensive safety contexts introduced challenges for the actor’s operational effectiveness, requiring cautious validation of all claimed outcomes. This stays an impediment to completely autonomous cyberattacks.

How (Anthropic says) the assault unfolded

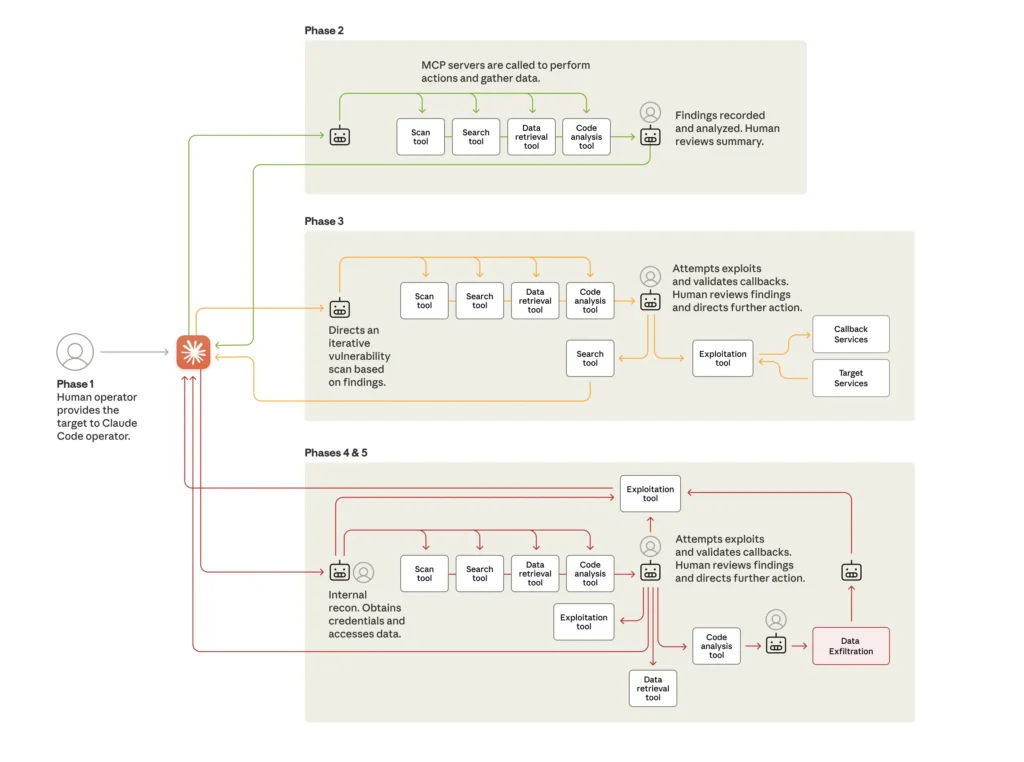

Anthropic mentioned GTG-1002 developed an autonomous assault framework that used Claude as an orchestration mechanism that largely eradicated the necessity for human involvement. This orchestration system broke advanced multi-stage assaults into smaller technical duties reminiscent of vulnerability scanning, credential validation, knowledge extraction, and lateral motion.

“The structure integrated Claude’s technical capabilities as an execution engine inside a bigger automated system, the place the AI carried out particular technical actions based mostly on the human operators’ directions whereas the orchestration logic maintained assault state, managed section transitions, and aggregated outcomes throughout a number of classes,” Anthropic mentioned. “This method allowed the menace actor to attain operational scale sometimes related to nation-state campaigns whereas sustaining minimal direct involvement, because the framework autonomously progressed by way of reconnaissance, preliminary entry, persistence, and knowledge exfiltration phases by sequencing Claude’s responses and adapting subsequent requests based mostly on found data.”

The assaults adopted a five-phase construction that elevated AI autonomy by way of every one.

Credit score:

Anthropic

The life cycle of the cyberattack, displaying the transfer from human-led concentrating on to largely AI-driven assaults utilizing numerous instruments, usually by way of the Mannequin Context Protocol (MCP). At numerous factors through the assault, the AI returns to its human operator for assessment and additional route.

Credit score:

Anthropic

The attackers have been capable of bypass Claude guardrails partially by breaking duties into small steps that, in isolation, the AI device didn’t interpret as malicious. In different circumstances, the attackers couched their inquiries within the context of safety professionals attempting to make use of Claude to enhance defenses.

As famous final week, AI-developed malware has an extended approach to go earlier than it poses a real-world menace. There’s no purpose to doubt that AI-assisted cyberattacks could in the future produce stronger assaults. However the knowledge thus far signifies that menace actors—like most others utilizing AI—are seeing combined outcomes that aren’t almost as spectacular as these within the AI business declare.