ChatGPT’s means to disregard copyright and customary sense whereas creating photos and deepfakes is the speak of the city proper now. The picture generator mannequin that OpenAI launched final week is so extensively used that it’s ruining ChatGPT’s fundamental performance and uptime for everybody.

Nevertheless it’s not simply developments in AI-generated photos that we’ve witnessed just lately. The Runway Gen-4 video mannequin enables you to create unimaginable clips from a single textual content immediate and a photograph, sustaining character and scene continuity, not like something we have now seen earlier than.

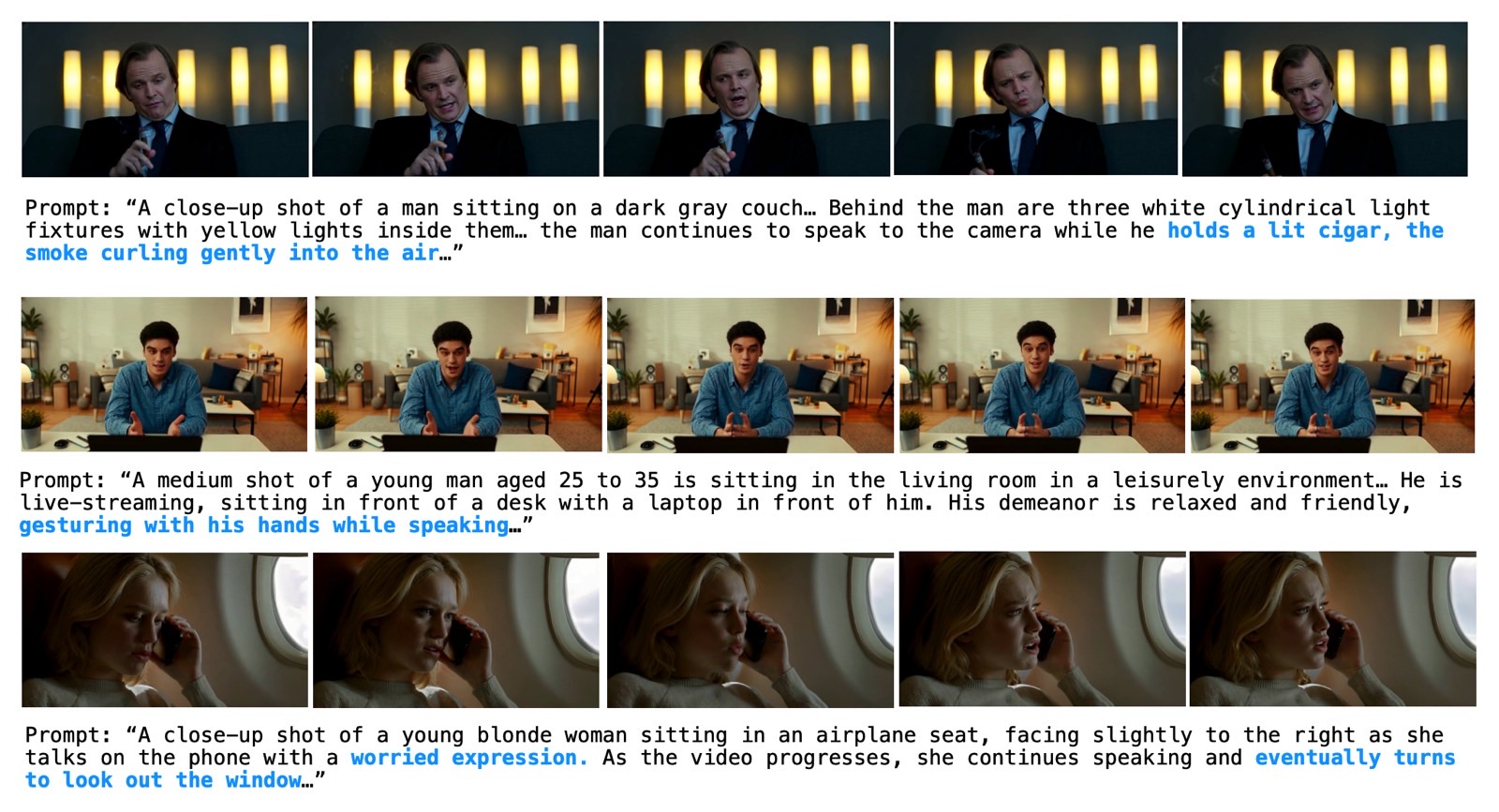

The movies the corporate supplied ought to put Hollywood on discover. Anybody could make movie-grade clips with instruments like Ruway’s, assuming they work as meant. On the very least, AI might help scale back the price of particular results for sure films.

It’s not simply Runway’s new AI video instrument that’s turning heads. Meta has a MoCha AI product of its personal that can be utilized to create speaking AI characters in movies that is likely to be adequate to idiot you.

MoCha isn’t a sort of espresso spelled mistaken. It’s brief for Film Character Animator, a analysis venture from Meta and the College of Waterloo. The essential concept of the MoCha AI mannequin is fairly easy. You present the AI with a textual content immediate that describes the video and a speech pattern. The AI then places collectively a video that ensures the characters “communicate” the traces within the audio pattern nearly completely.

The researchers supplied loads of samples that present MoCha’s superior capabilities, and the outcomes are spectacular. Now we have all types of clips displaying live-action and animated protagonists talking the traces from the audio pattern. Mocha takes under consideration feelings, and the AI also can assist a number of characters in the identical scene.

The outcomes are nearly excellent, however not fairly. There are some seen imperfections within the clips. The attention and face actions are giveaways that we’re AI-generated video. Additionally, whereas the lip motion seems to be completely synchronized to the audio pattern, the motion of your complete mouth is exaggerated in comparison with actual folks.

I say that as somebody who has seen loads of related AI modes from different firms by now, together with some extremely convincing ones.

First, there’s the Runway Gen-4 that we talked about a number of days in the past. The Gen-4 demo clips are higher than MoCha. However that’s a product you should utilize, MoCha can definitely be improved by the point it turns into a business AI mannequin.

Talking of AI fashions you may’t use, I at all times evaluate new merchandise that may sync AI-generated characters to audio samples to Microsoft’s VASA-1 AI analysis venture, which we noticed final April.

VASA-1 enables you to flip static pictures of actual folks into movies of talking characters so long as you present an audio pattern of any variety. Understandably, Microsoft by no means made the VASA-1 mannequin accessible to shoppers, as such tech opens the door to abuse.

Lastly, there’s TikTok’s mother or father firm, ByteDance, which confirmed a VASA-1-like AI a few months in the past that does the identical factor. It turns a single photograph into a totally animated video.

OmniHuman-1 additionally animates physique half actions, one thing I noticed in Meta’s MoCha demo as properly. That’s how we bought to see Taylor Swift sing the Naruto theme tune in Japanese. Sure, it’s a deepfake clip; I’m attending to that.

Merchandise like VASA-1, OmniHuman-1, MoCha, and doubtless Runway Gen-4 is likely to be used to create deepfakes that may mislead.

Meta researchers engaged on MoCha and related tasks ought to handle this publicly if and when the mannequin turns into accessible commercially.

You may spot inconsistencies within the MoCha samples accessible on-line, however watch these movies on a smartphone show, and they may not be so evident. Take away your familiarity with AI video era; you may assume a few of these MoCha clips had been shot with actual cameras.

Additionally vital can be the disclosure of the info Meta used to coach this AI. The paper stated MoCha employed some 500,000 samples, amounting to 300 hours of high-quality speech video samples, with out saying the place they bought that knowledge. Sadly, that’s a theme within the trade, not acknowledging the supply of the info used to coach the AI, and it’s nonetheless a regarding one.

You’ll discover the complete MoCha analysis paper at this hyperlink.