Conventional search monitoring is constructed on a easy promise: sort a question, get a end result, and observe your rating. AI doesn’t work that manner.

Assistants like ChatGPT, Gemini, and Perplexity don’t present mounted outcomes—they generate solutions that fluctuate with each run, each mannequin, and each person.

“AI rank monitoring” is a misnomer—you may’t observe AI such as you do conventional search.

However that doesn’t imply you shouldn’t observe it at all.

You simply want to regulate the questions you’re asking, and the best way you measure your model’s visibility.

In search engine marketing rank monitoring, you may depend on steady, repeatable guidelines:

- Deterministic outcomes: The identical question typically returns comparable SERPs for everybody.

- Mounted positions: You may measure actual ranks (#1, #5, #20).

- Recognized volumes: You understand how common every key phrase is, so you already know what to prioritize.

AI breaks all three.

- Probabilistic solutions: The identical immediate can return completely different manufacturers, citations, or response codecs every time.

- No mounted positions: Mentions seem in passing, in various order—not as numbered ranks.

- Hidden demand: Immediate quantity information is locked away. We don’t know what individuals truly ask at scale.

And it will get messier:

- Fashions don’t agree. Even inside variations of the identical assistant generate completely different responses to an equivalent immediate.

- Personalization skews outcomes. Many AIs tailor their outputs to elements like location, context, and reminiscence of earlier conversations.

That is why you may’t deal with AI prompts like key phrases.

It doesn’t imply AI can’t be tracked, however that monitoring particular person prompts isn’t sufficient.

As an alternative of asking “Did my model seem for this actual question?”, the higher query to ask is: “Throughout 1000’s of prompts, how typically does AI join my model with this matter or class?”

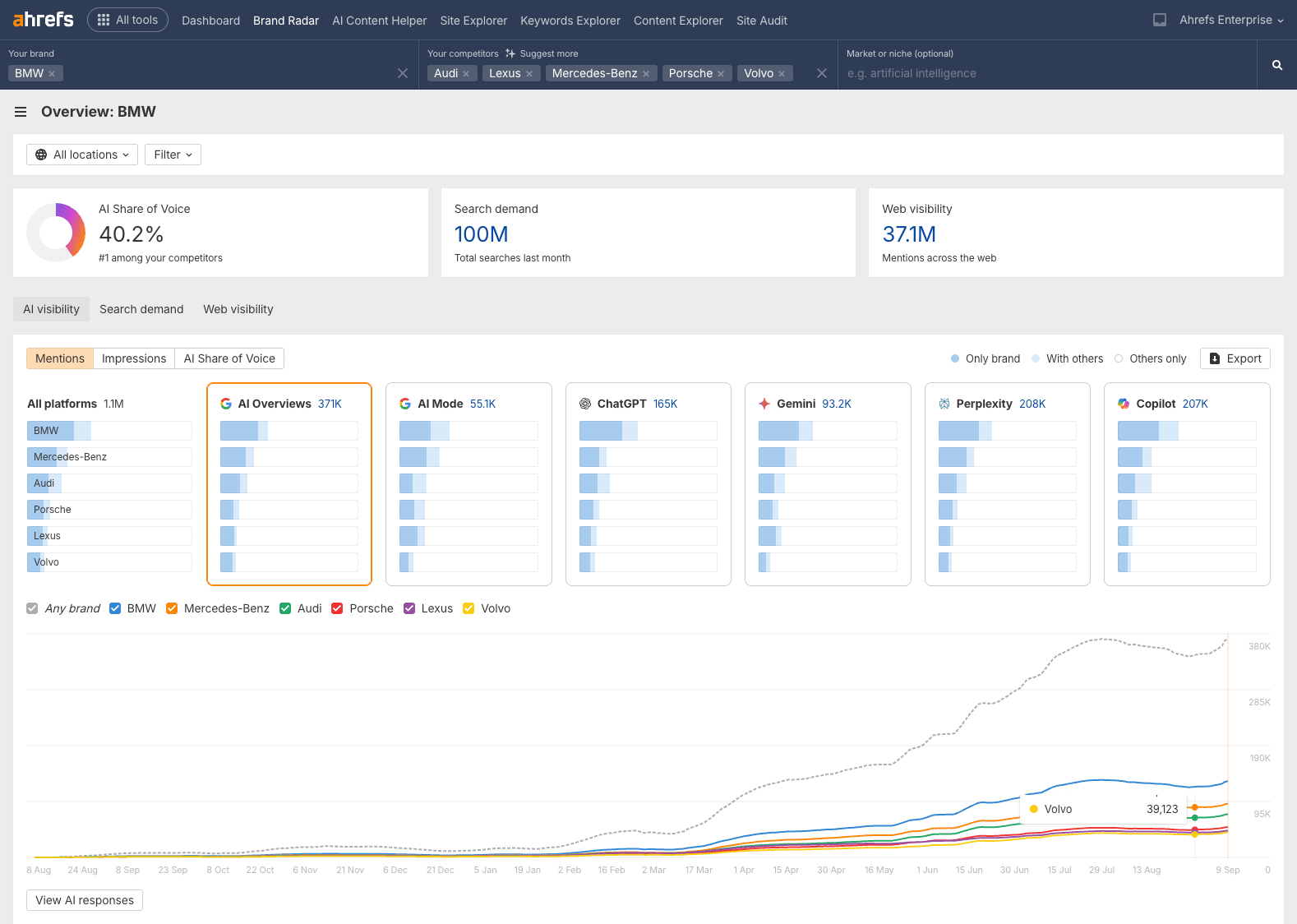

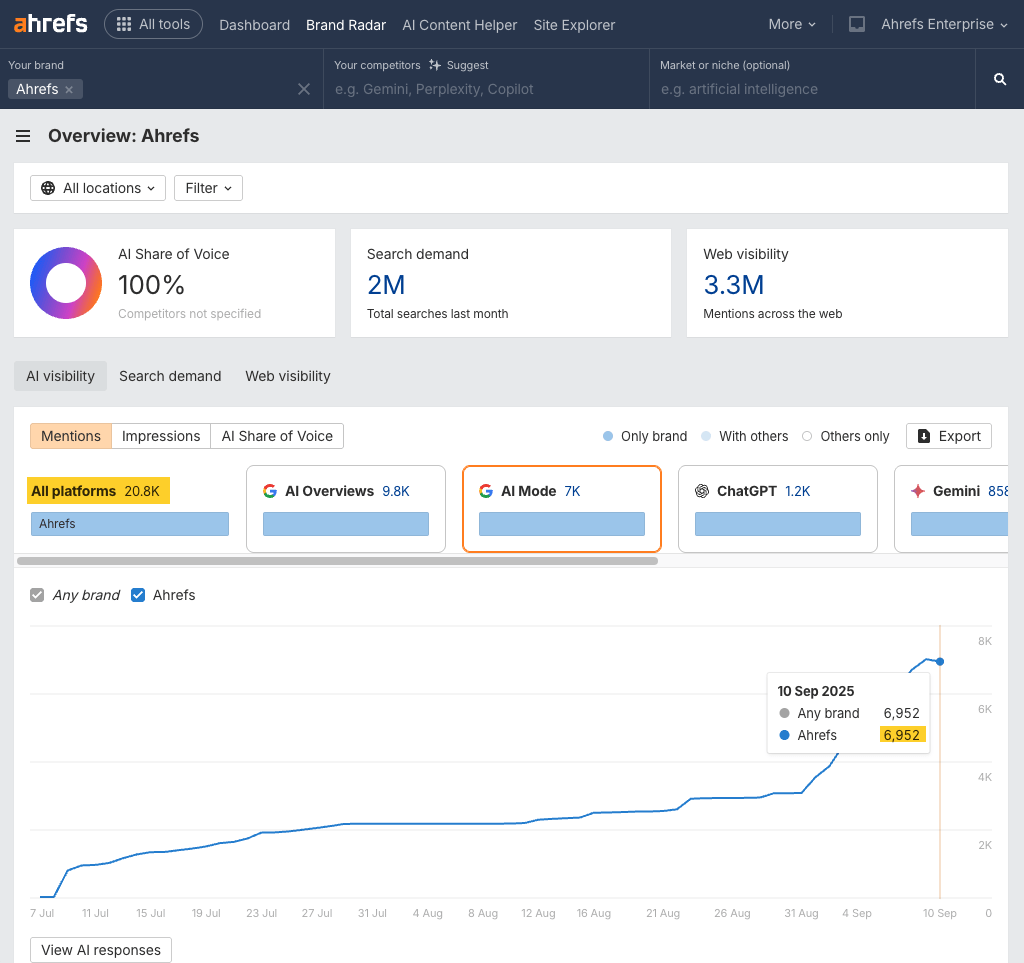

That’s the philosophy behind Ahrefs Model Radar—our database of thousands and thousands of AI prompts and responses that helps you observe directionally.

A significant stumbling block in relation to AI search monitoring is that none of us know what persons are truly looking out en masse.

In contrast to search engines like google, which publish key phrase volumes, AI corporations hold immediate logs personal—that information by no means leaves their servers.

That makes prioritization tough, and means it’s laborious to know the place to start out in relation to optimizing for AI visibility.

To maneuver previous this, we seed Model Radar’s database with actual search information: questions from our key phrase database and Folks Additionally Ask queries, paired with search quantity.

These are nonetheless “artificial” prompts, however they mirror actual world demand.

Our objective isn’t to inform you whether or not you seem for a single AI question, it’s to indicate you ways seen your model is throughout total matters.

In case you can see that you’ve got nice visibility for a subject, you don’t want to trace a whole bunch of particular prompts inside that matter, since you already perceive the underlying chance that you just’ll be talked about.

By specializing in aggregated visibility, you may transfer previous noisy outputs:

- See if AI constantly ties you to a class—not simply if you happen to appeared as soon as.

- Observe traits over time—not simply snapshots.

- Learn the way your model is positioned in opposition to rivals—not simply talked about.

Consider AI monitoring much less like rank monitoring and extra like polling.

You don’t care about one reply, you care concerning the path of the development throughout a statistically important quantity of information.

You may’t observe your AI visibility like you may observe your search visibility. However, even with flaws, AI monitoring has clear worth.

Particular person model mentions in AI fluctuate rather a lot, however aggregating that information offers you a extra steady view.

For instance, if you happen to run the identical immediate thrice, you’ll probably see three completely different solutions.

In a single your model is talked about, in one other it’s lacking, in a 3rd a competitor will get the highlight

However combination 1000’s of prompts, and the variability evens out.

Instantly it’s clear: your model seems in ~60% of AI solutions.

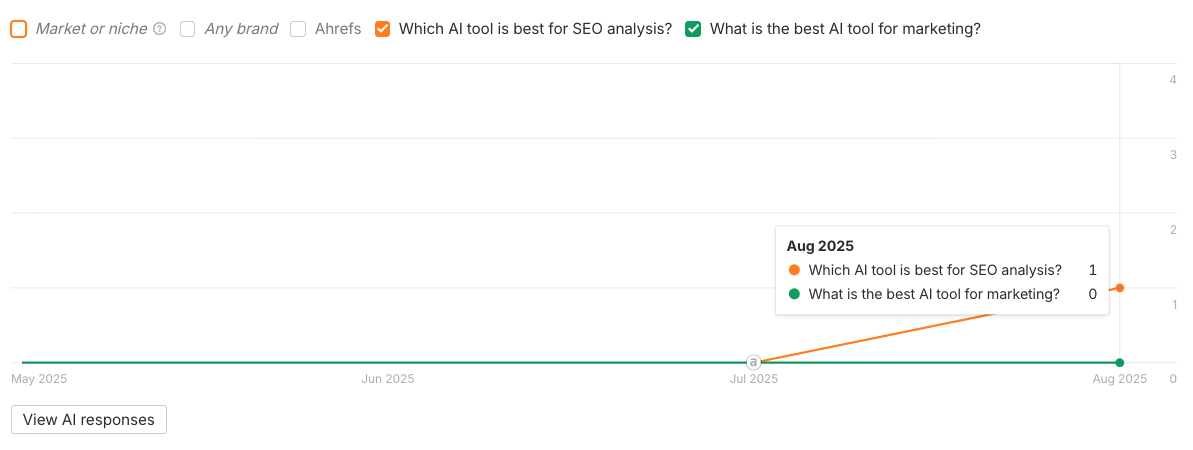

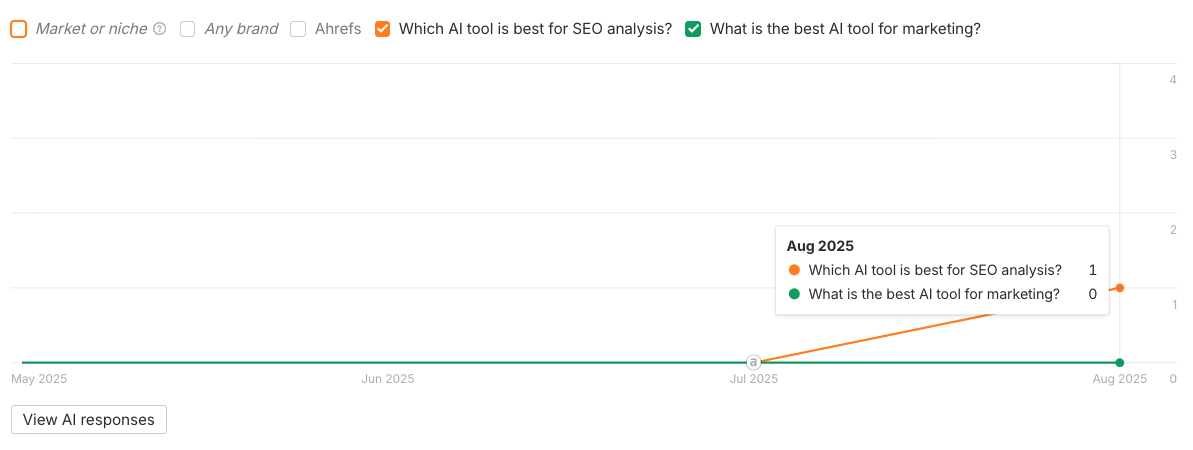

![]()

![]()

Aggregation smooths out the randomness, outlier solutions get averaged into the bigger pattern, and also you get a greater concept of how a lot of the market you truly personal.

These are the identical ideas utilized in surveys: particular person solutions fluctuate, however combination traits are dependable sufficient to behave on.

They present you constant indicators you’d miss if you happen to solely centered on a handful of prompts.

The issue is, most AI monitoring instruments cap you at 50–100 queries—primarily as a result of working prompts at scale will get costly.

That’s not sufficient information to inform you something significant.

With such a small pattern, you may’t get a transparent sense of your model’s precise AI visibility.

That’s why we’ve constructed our AI database of ~100M prompts—to help the form of combination evaluation that is sensible for AI search monitoring.

Finding out how your model exhibits up throughout 1000’s of AI prompts will help you see patterns in demand, and check how your efforts on one channel influence visibility on the different.

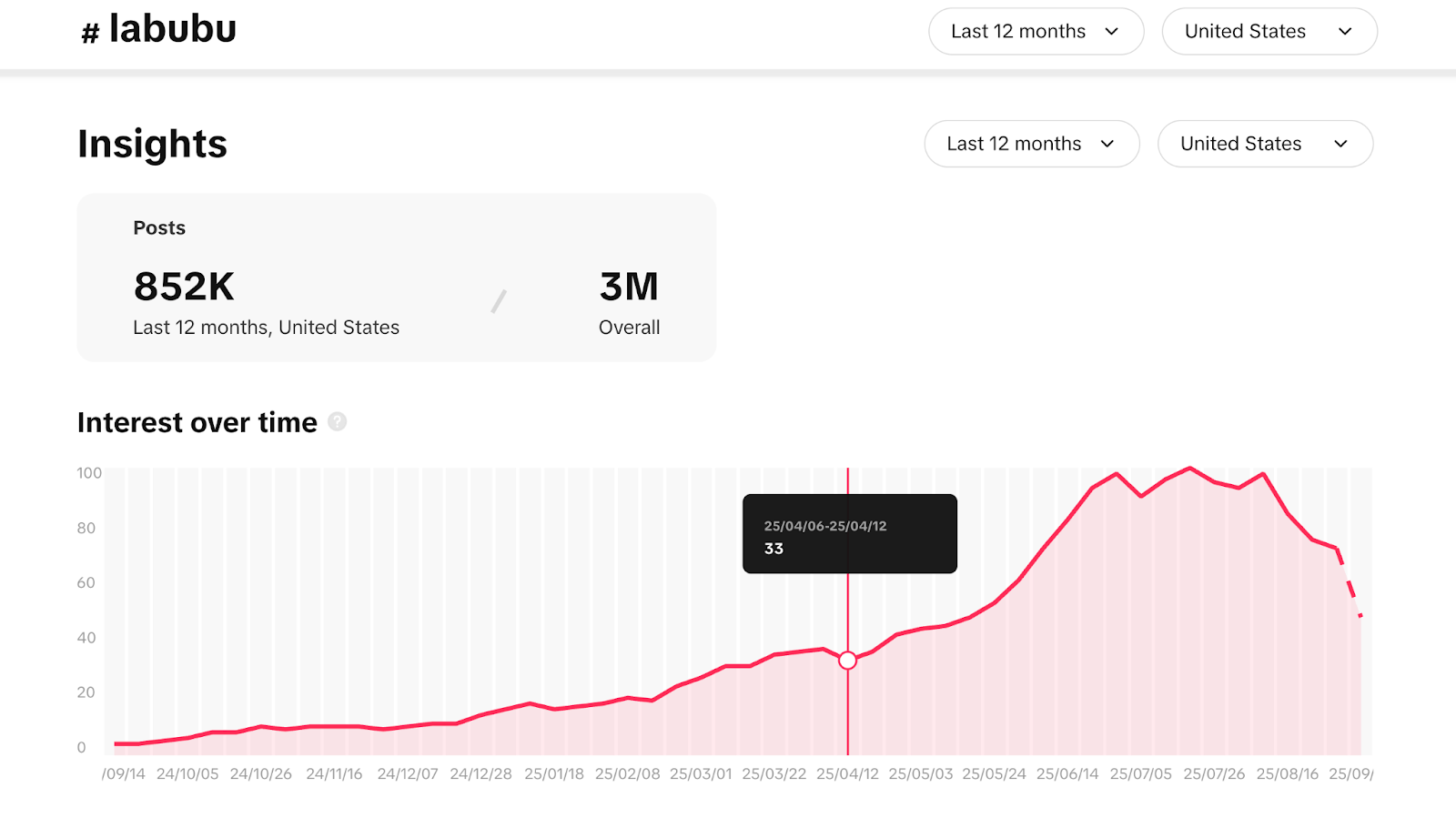

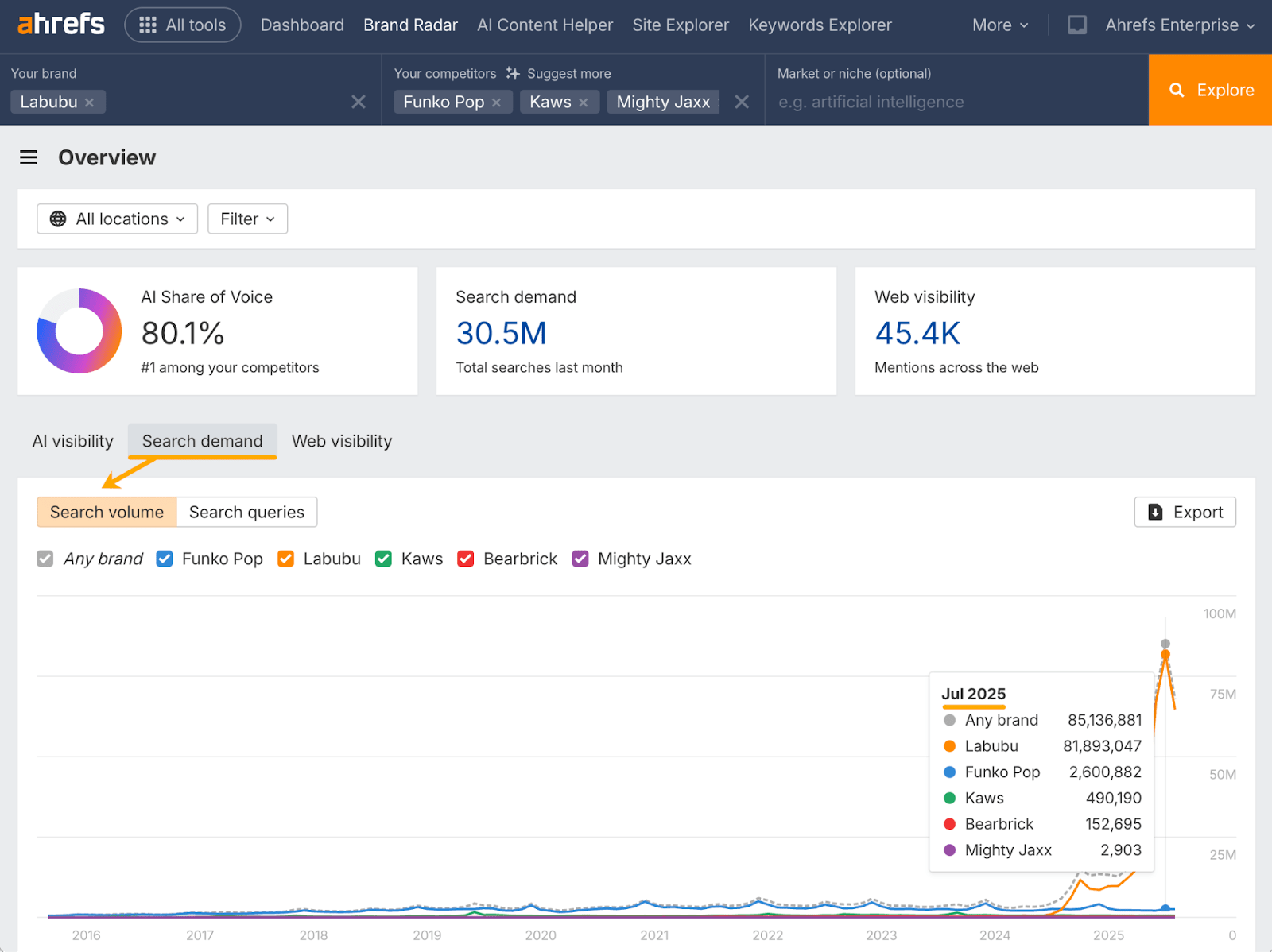

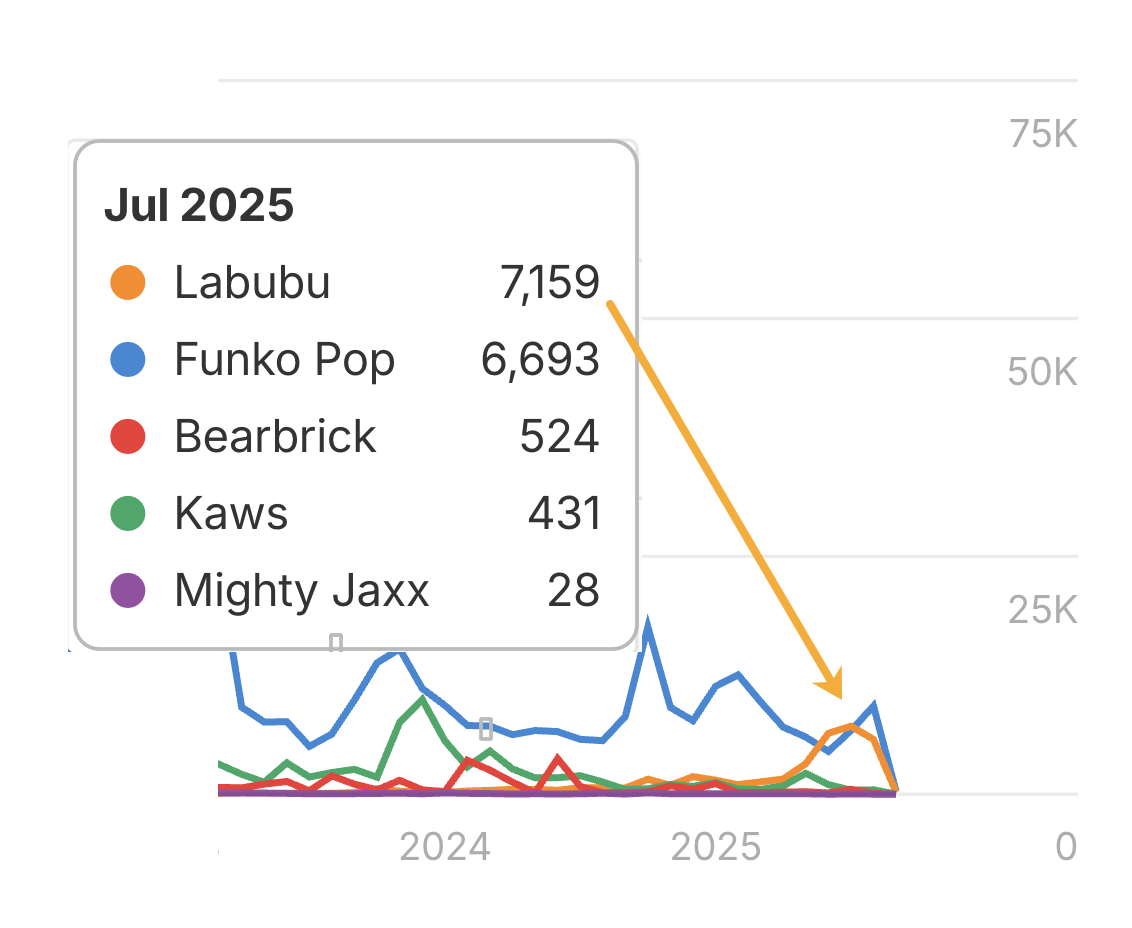

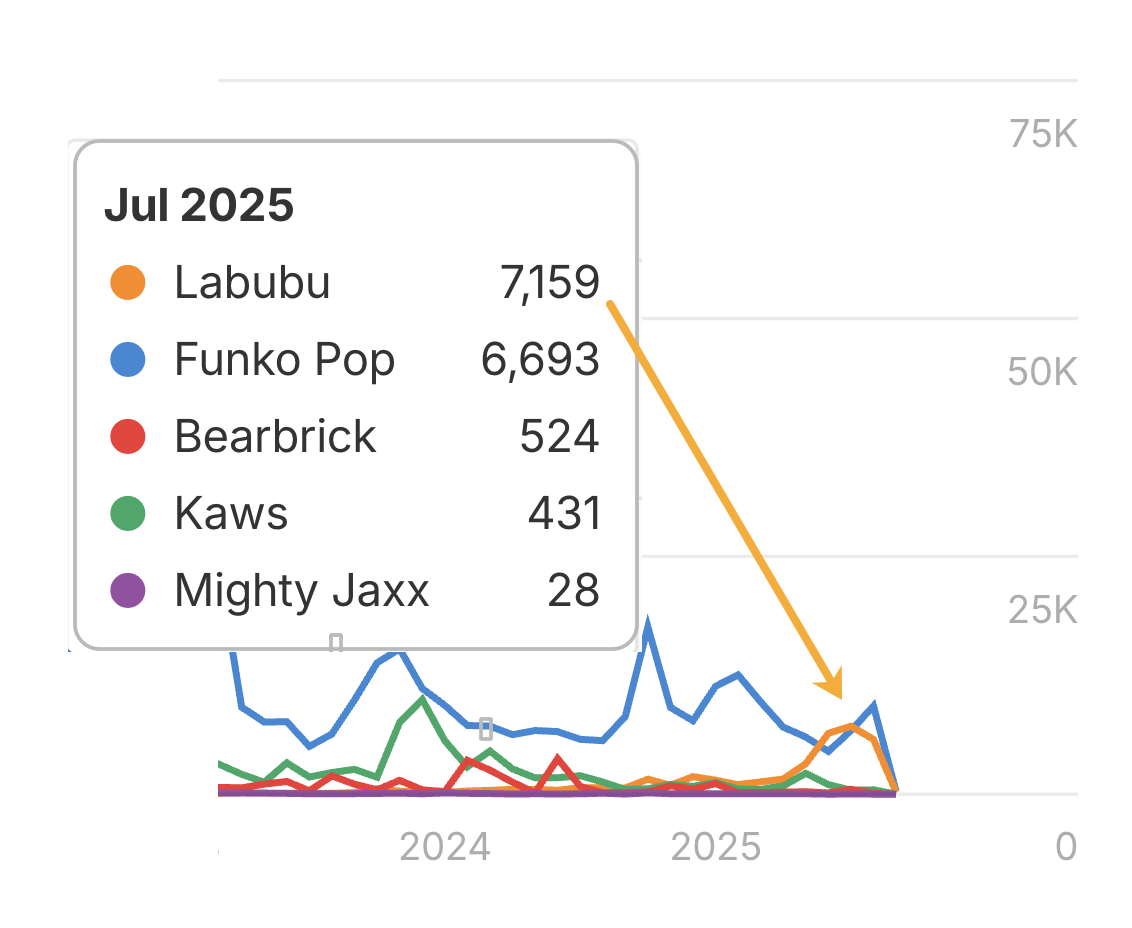

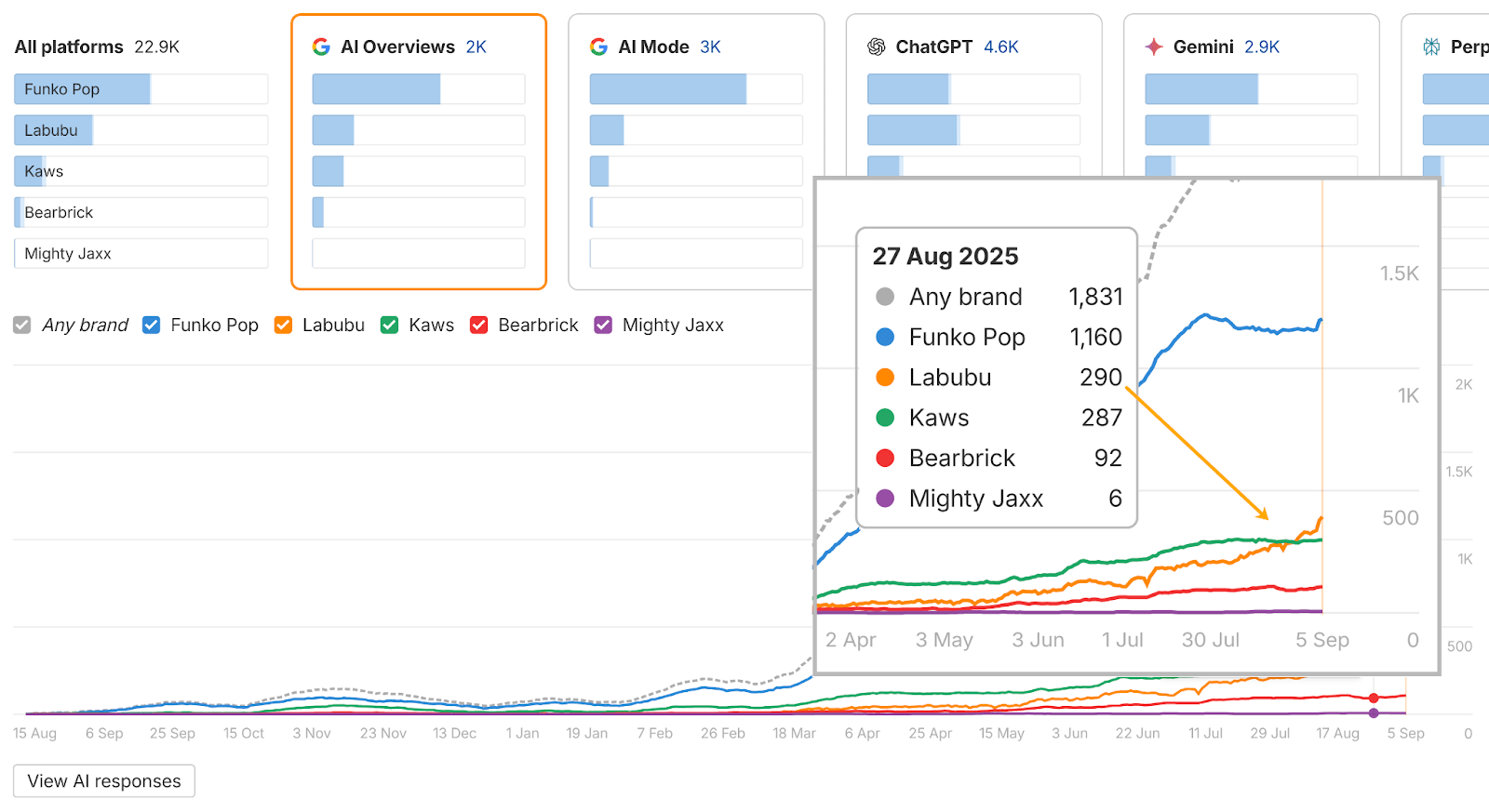

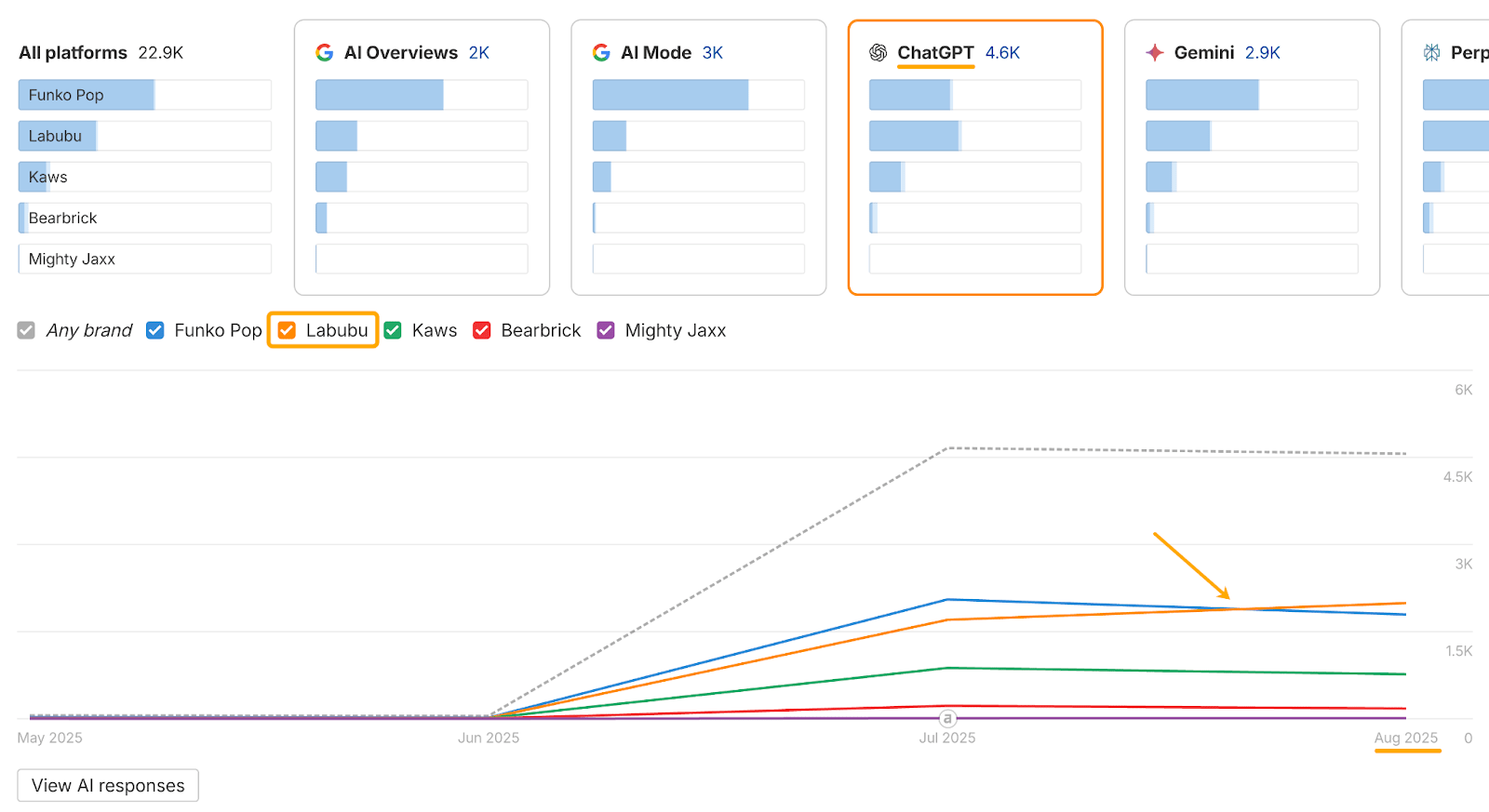

Right here’s what that appears like in observe, specializing in the instance of Labubu (these creepy doll issues that everybody has just lately turn into obsessive about).

By combining TikTok information with Ahrefs Model Radar, I traced how “Labubu” confirmed up throughout AI, social, search, and the broader internet.

It made for an fascinating timeline of occasions.

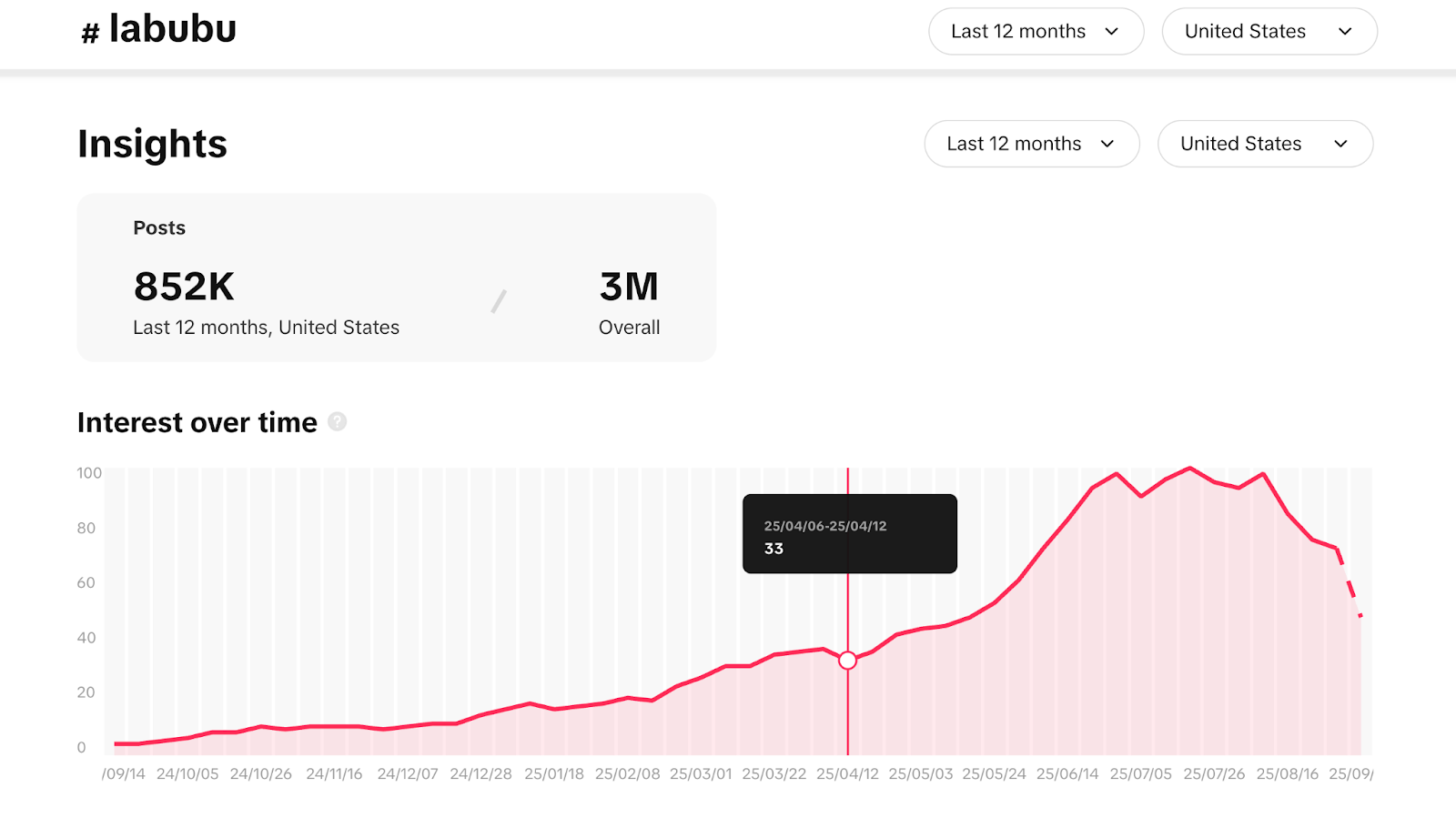

April: In keeping with TikTok’s Artistic Middle, which permits you observe trending key phrases and hashtags, Labubu went viral on TikTok after unboxing movies took off in April.

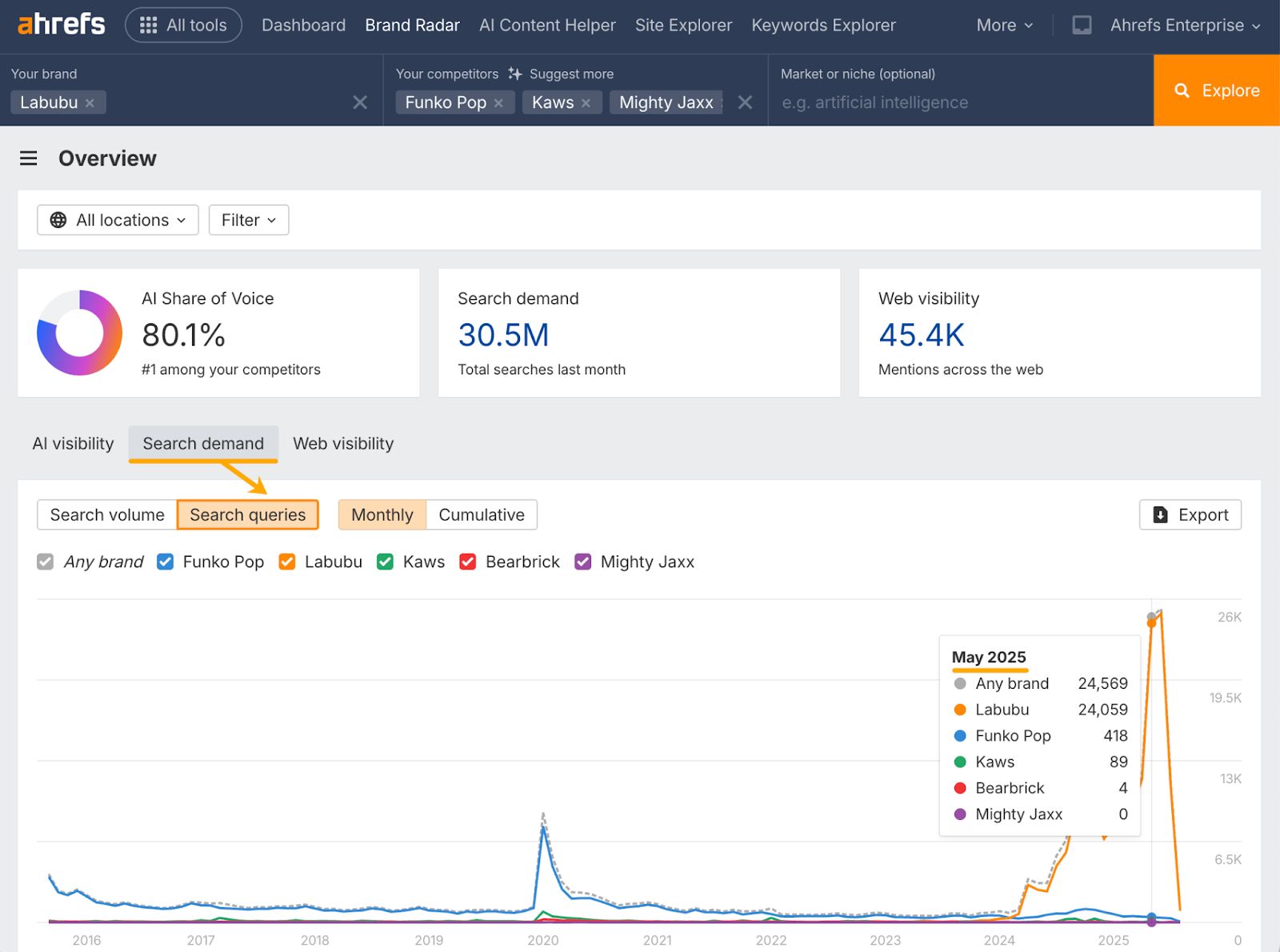

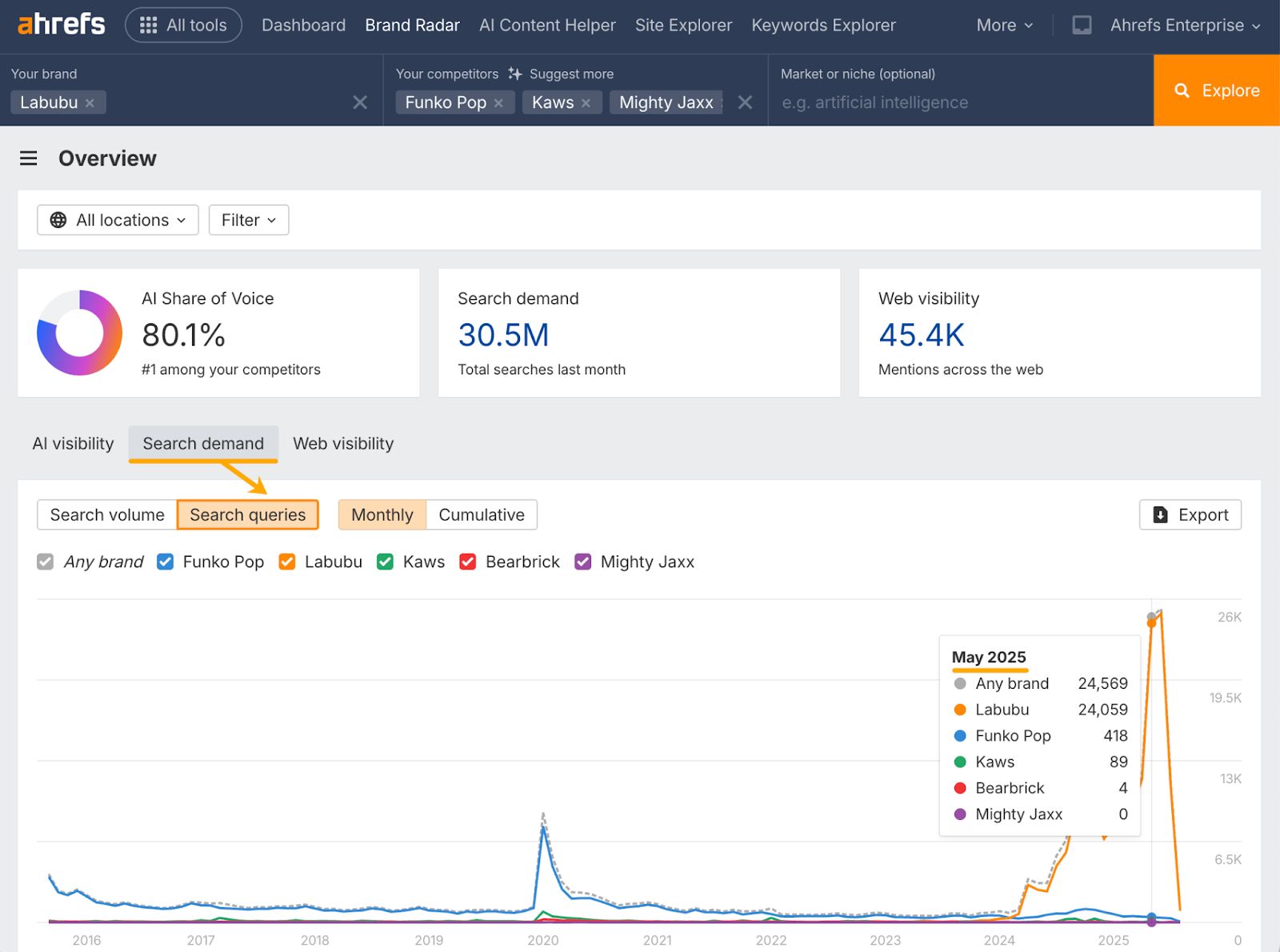

Could: 1000’s of “Labubu” associated search queries begin exhibiting up within the SERPs.

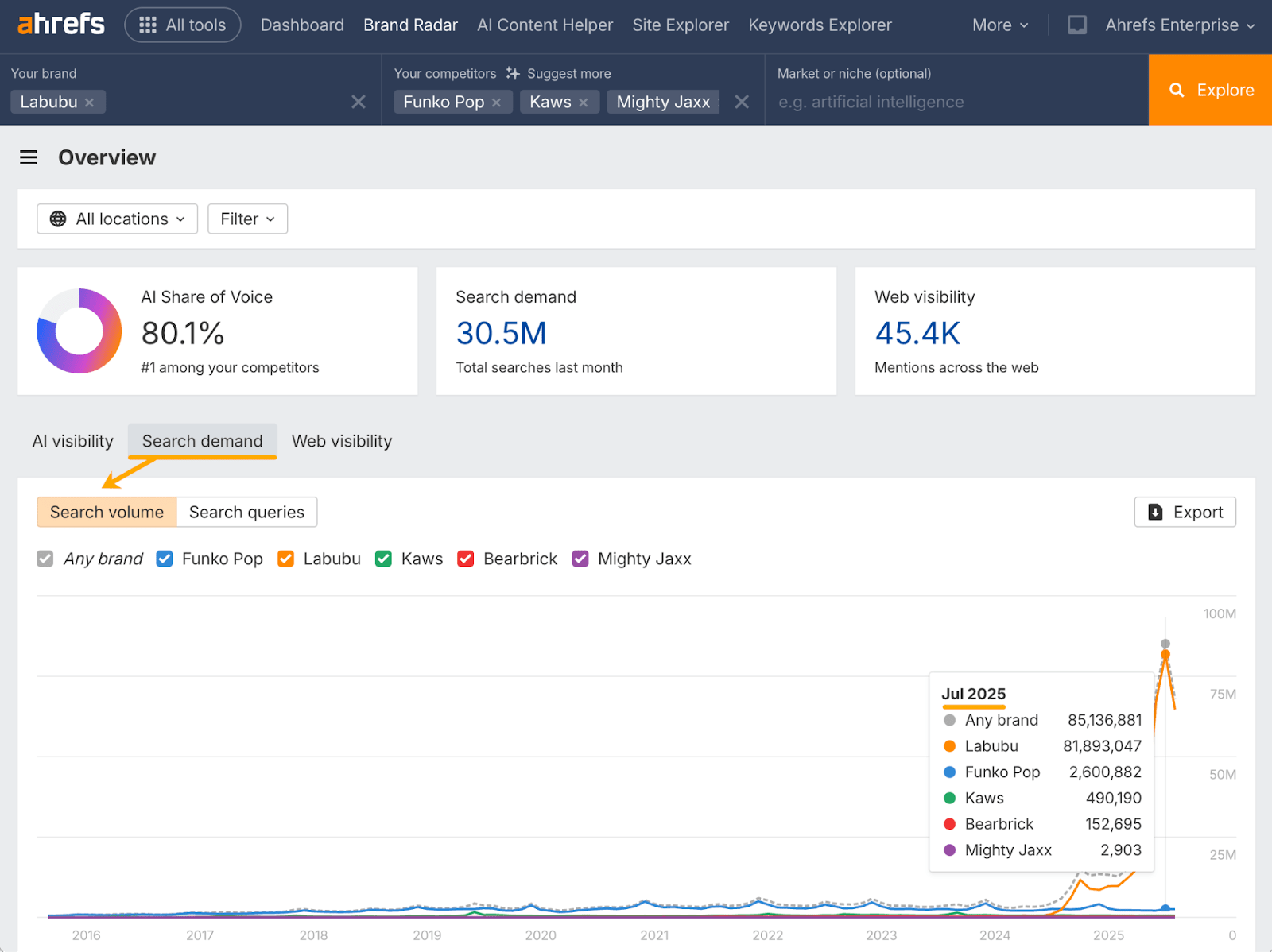

July: Search quantity spikes for those self same “Labubu” queries.

Additionally in July, internet mentions for “Labubu” surge, overtaking market-leading toy Funko Pop.

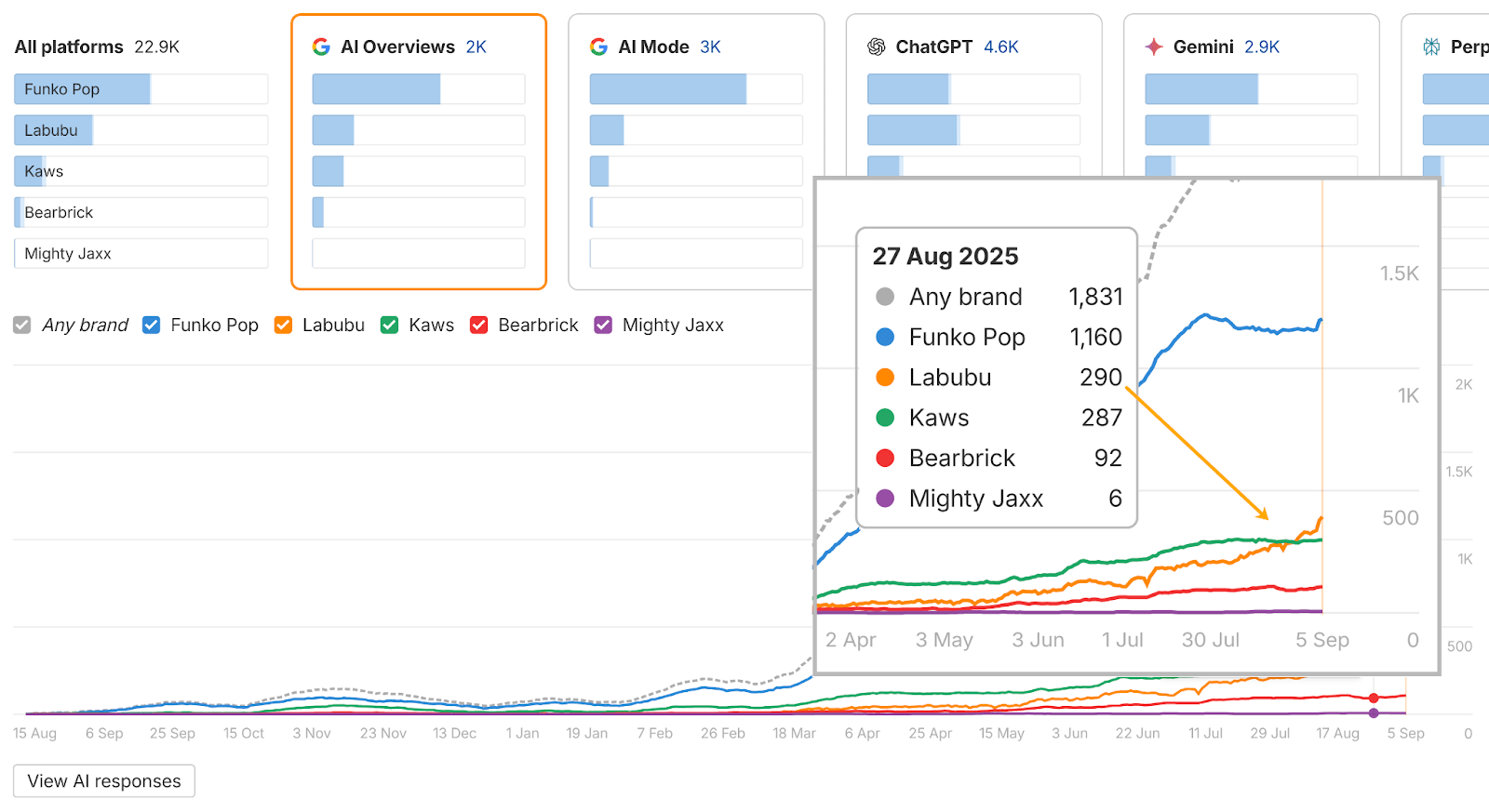

August: Labubu crosses over into AI visibility, gaining mentions in Google’s AI Overviews in late August—overtaking one other main toy model: Kaws.

Additionally in August, Labubu overtakes all different rivals in ChatGPT conversations.

This instance exhibits that AI is a part of a wider discovery ecosystem.

By monitoring it directionally, you may see when and the way a model (or development) breaks by means of into AI.

In all, it took 4 months for the Labubu model to floor in AI conversations.

By working the identical evaluation on rivals, you may consider completely different situations, replicate what works, and set lifelike expectations to your personal AI visibility timeline.

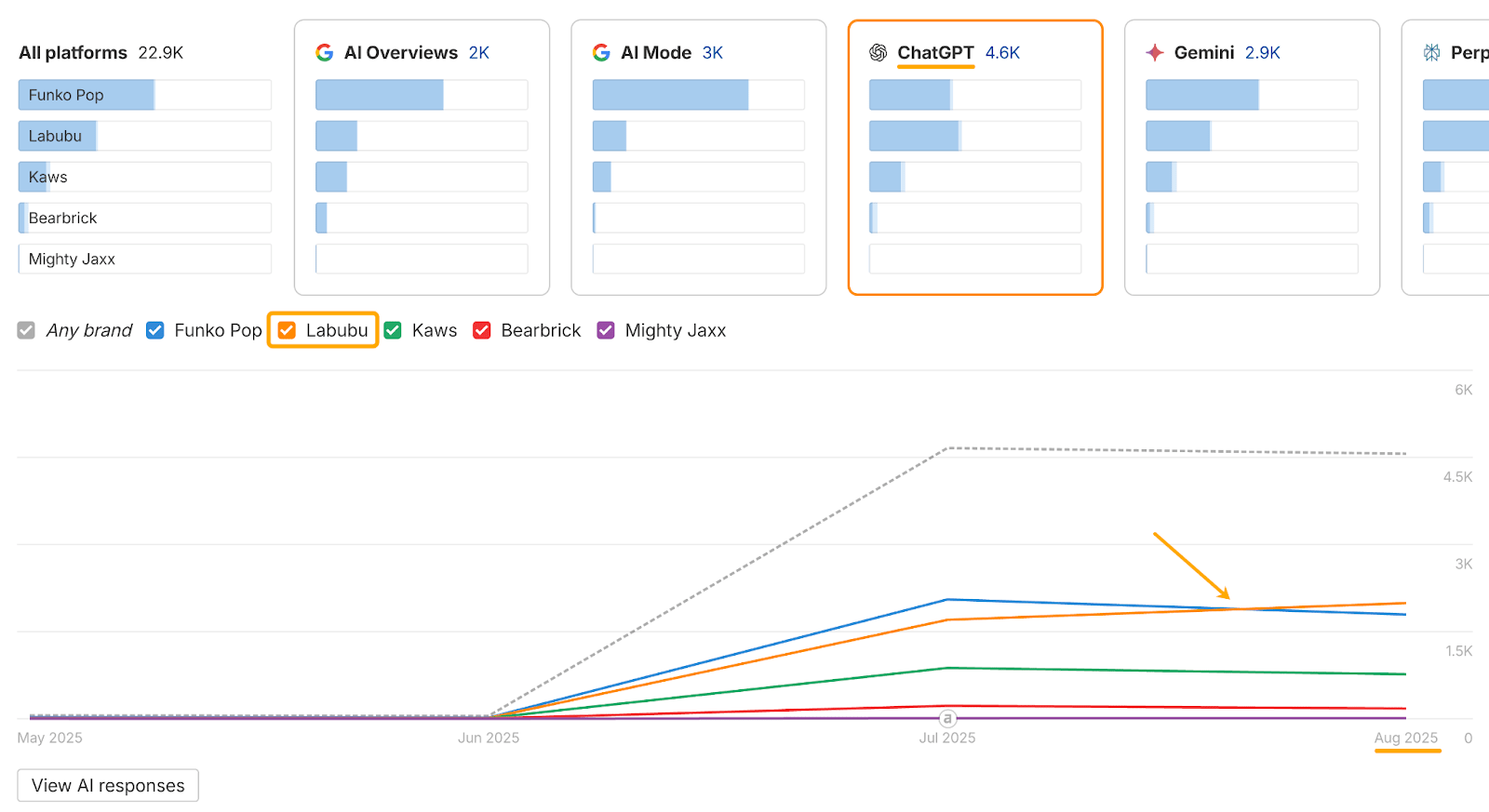

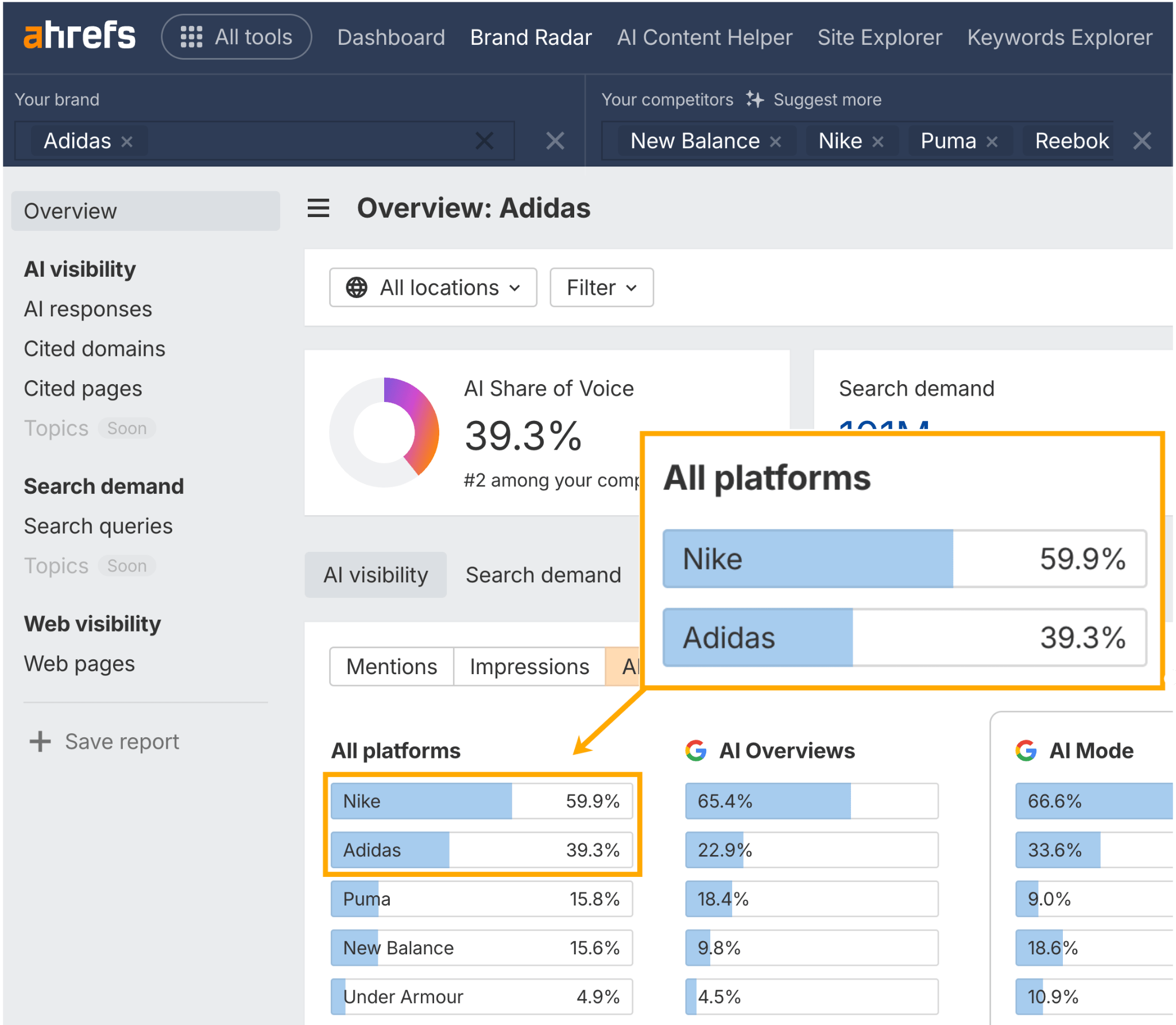

AI variance shouldn’t cease you evaluating your AI visibility to rivals.

The hot button is to trace your model’s AI Share of Voice throughout 1000’s of prompts—in opposition to the identical rivals—on a constant foundation, to gauge your relative possession of the market.

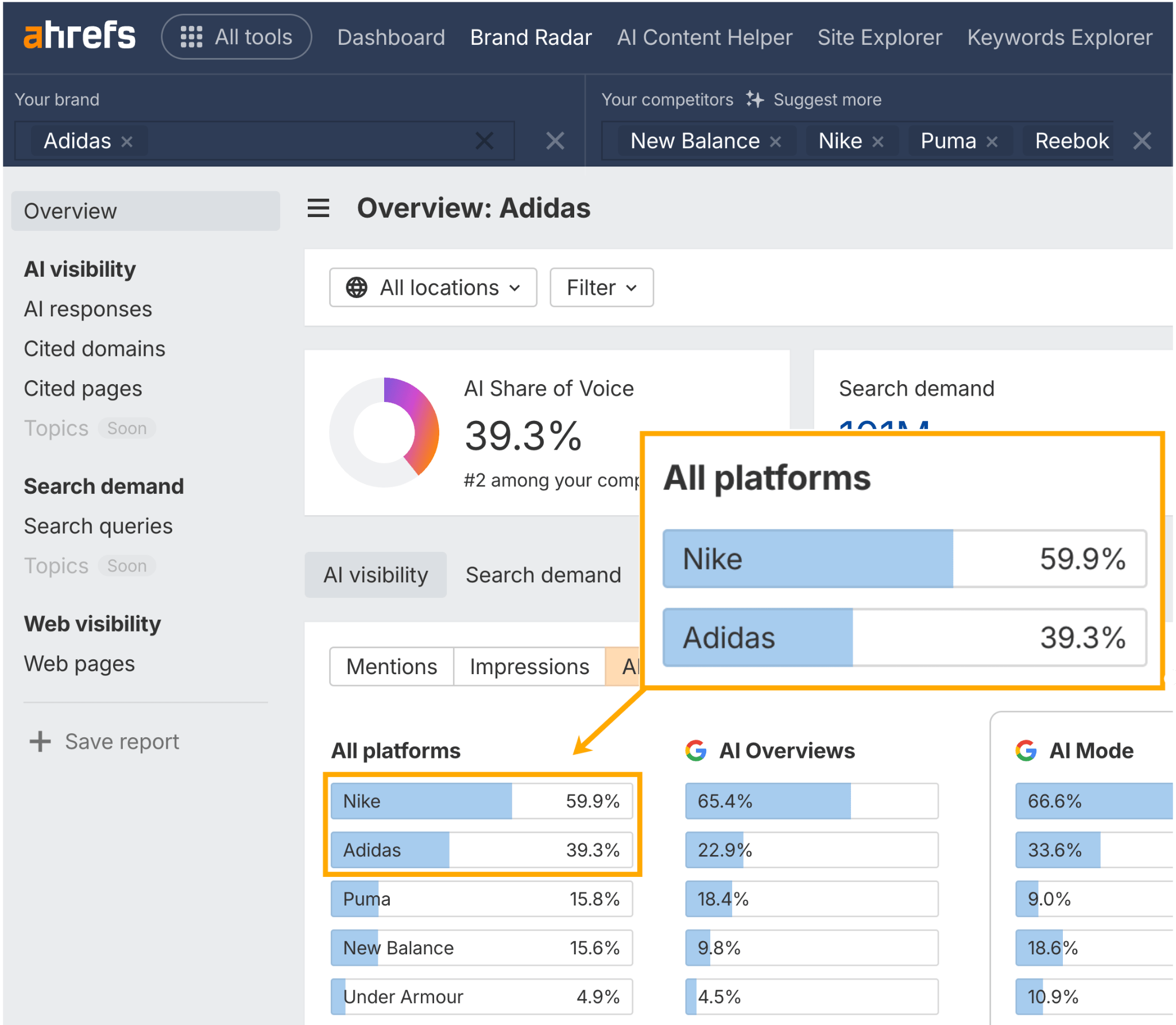

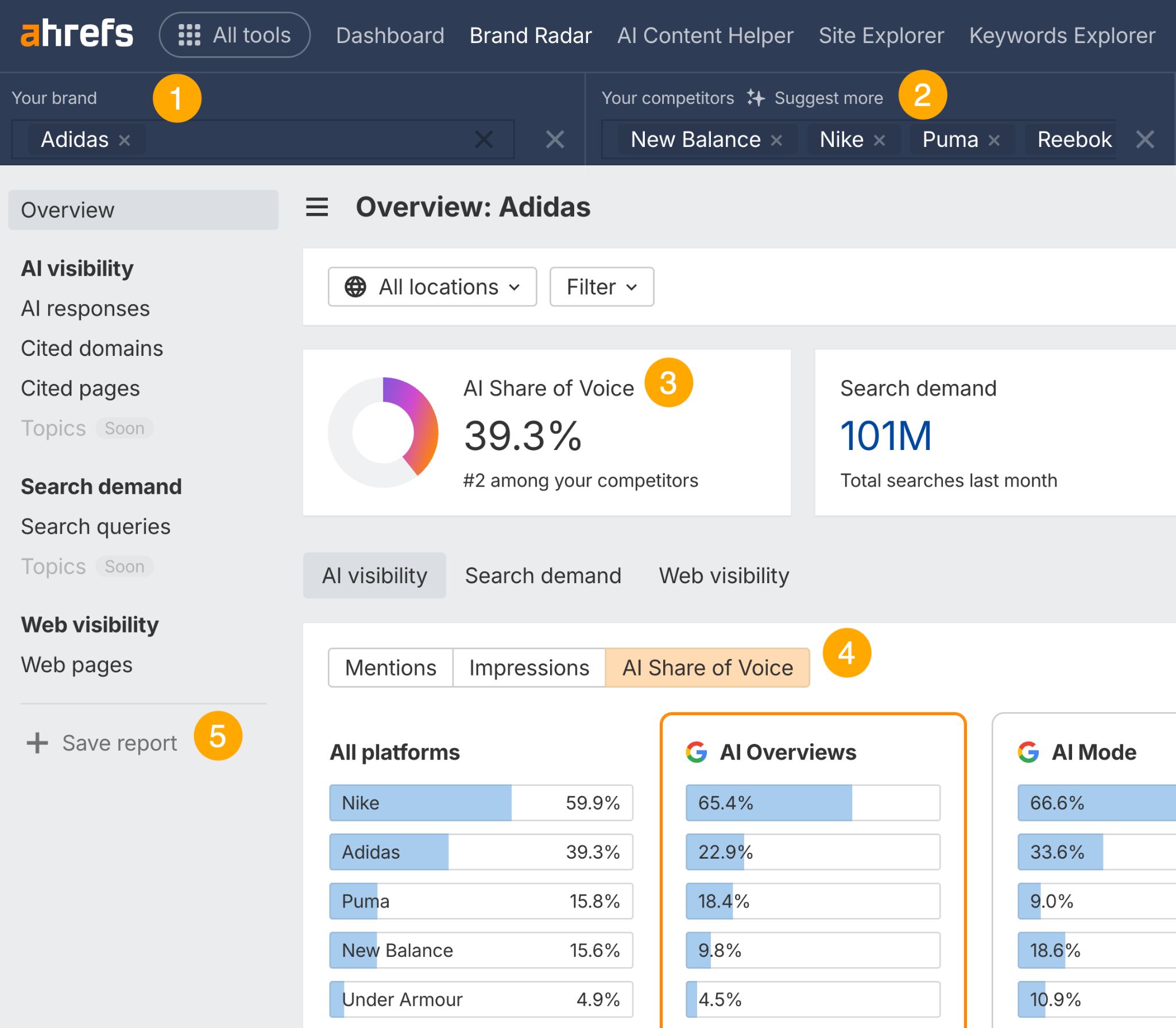

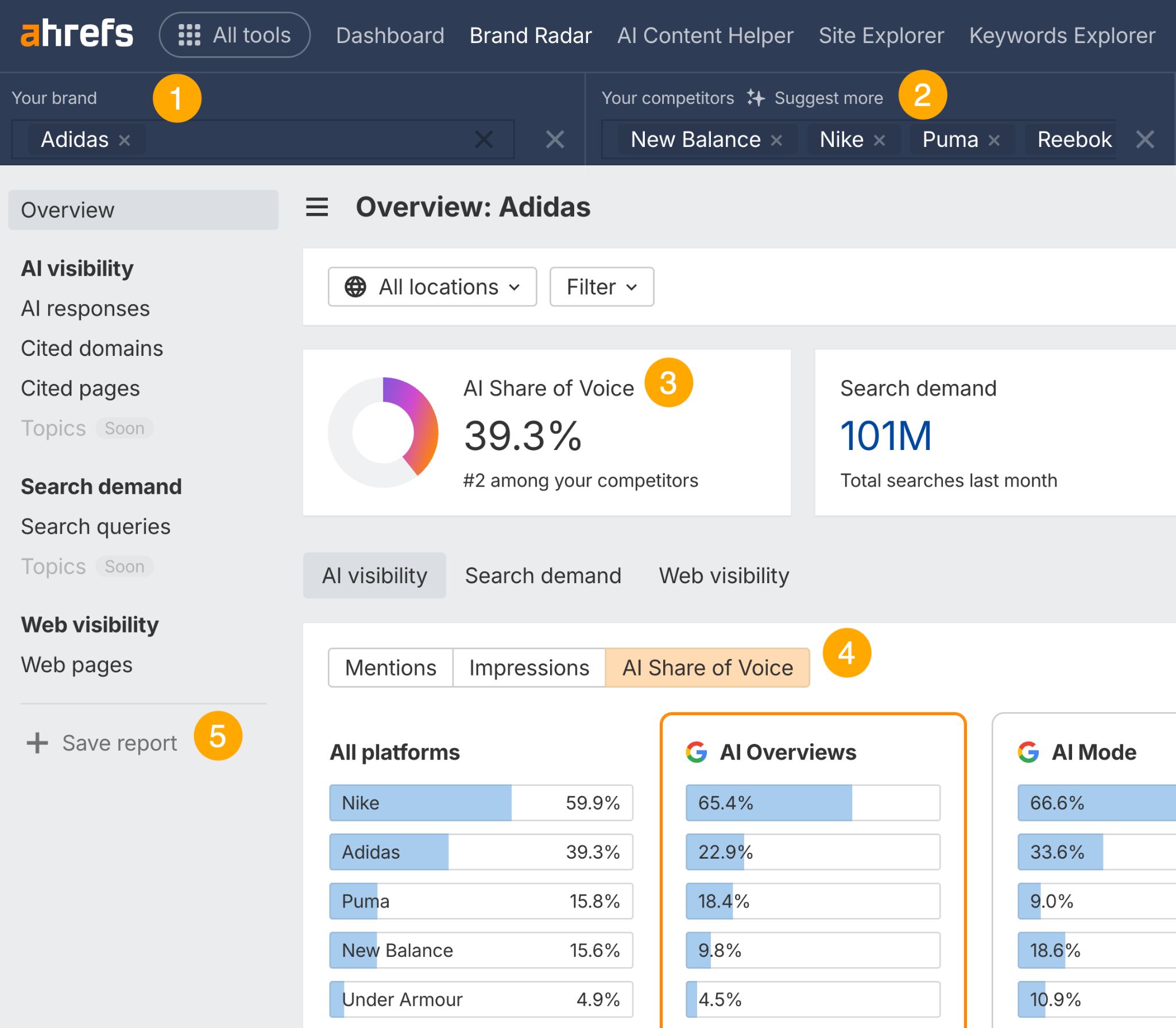

If a model (e.g. Adidas) seems in ~40% of prompts, however a competitor (e.g. Nike) exhibits up in ~60% , that’s a transparent hole—even when the numbers bounce round barely from run to run.

Monitoring AI search can present you the best way your AI visibility is trending.

For instance, if Adidas strikes from 40% to 45% protection, that’s a transparent directional win.

Model Radar helps this sort of longitudinal AI Share of Voice monitoring.

Right here’s the way it works in 5 easy steps:

- Search your model

- Enter your rivals

- Test your general AI Share of Voice proportion

- Hit the “AI Share of Voice” tab to benchmark in opposition to your rivals

- Save the identical immediate report and return to it to trace your progress

Over time, these benchmarks present whether or not you’re gaining or dropping floor in AI conversations.

A handful of prompts gained’t inform you a lot, even when they’re actual.

However whenever you have a look at a whole bunch of variations, you may work out whether or not AI actually ties your model to its key matters.

As an alternative of asking “Will we seem for [insert query]?”, we ought to be asking “Throughout all of the variations of prompts about this matter, how typically will we seem?”

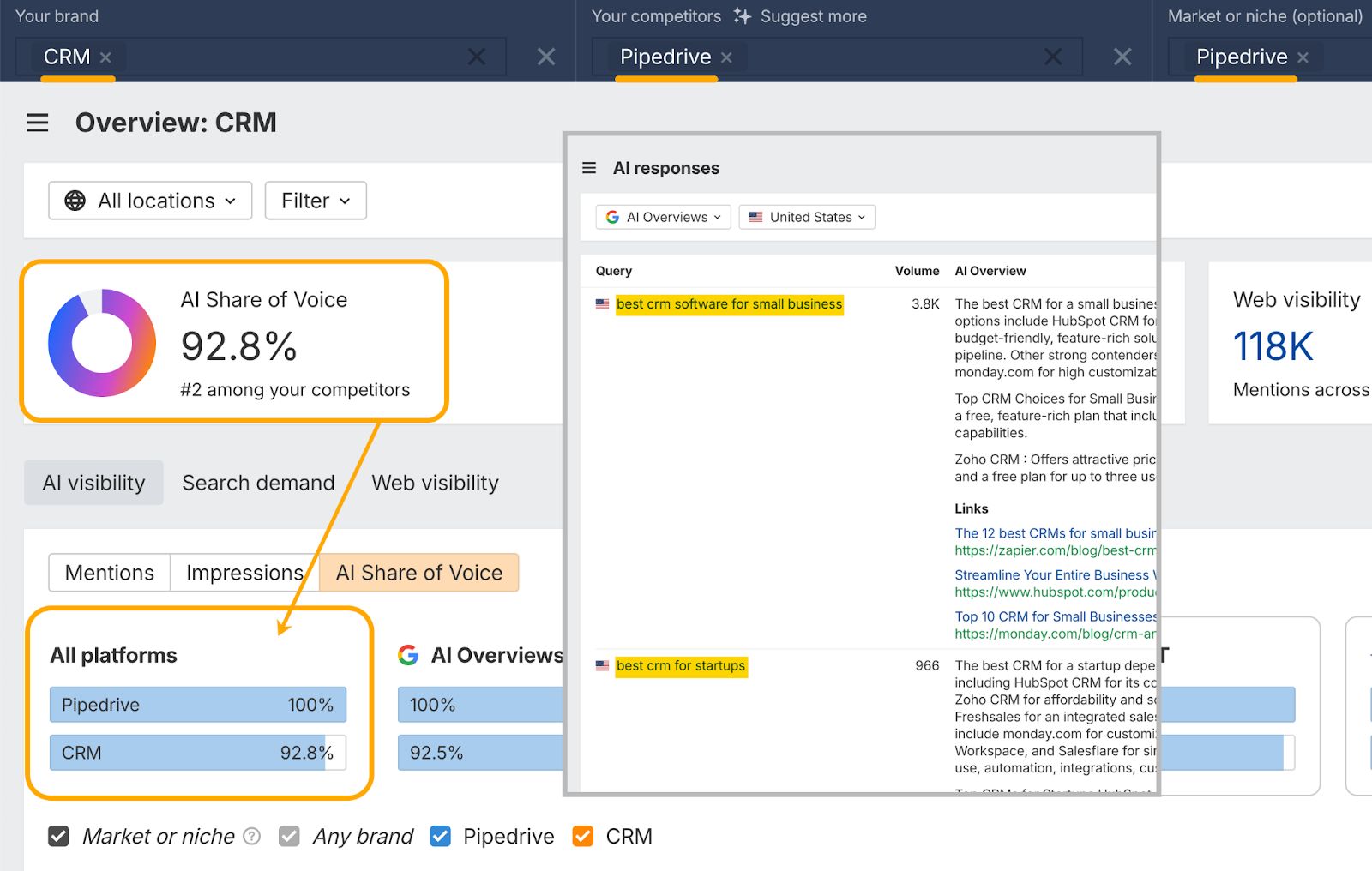

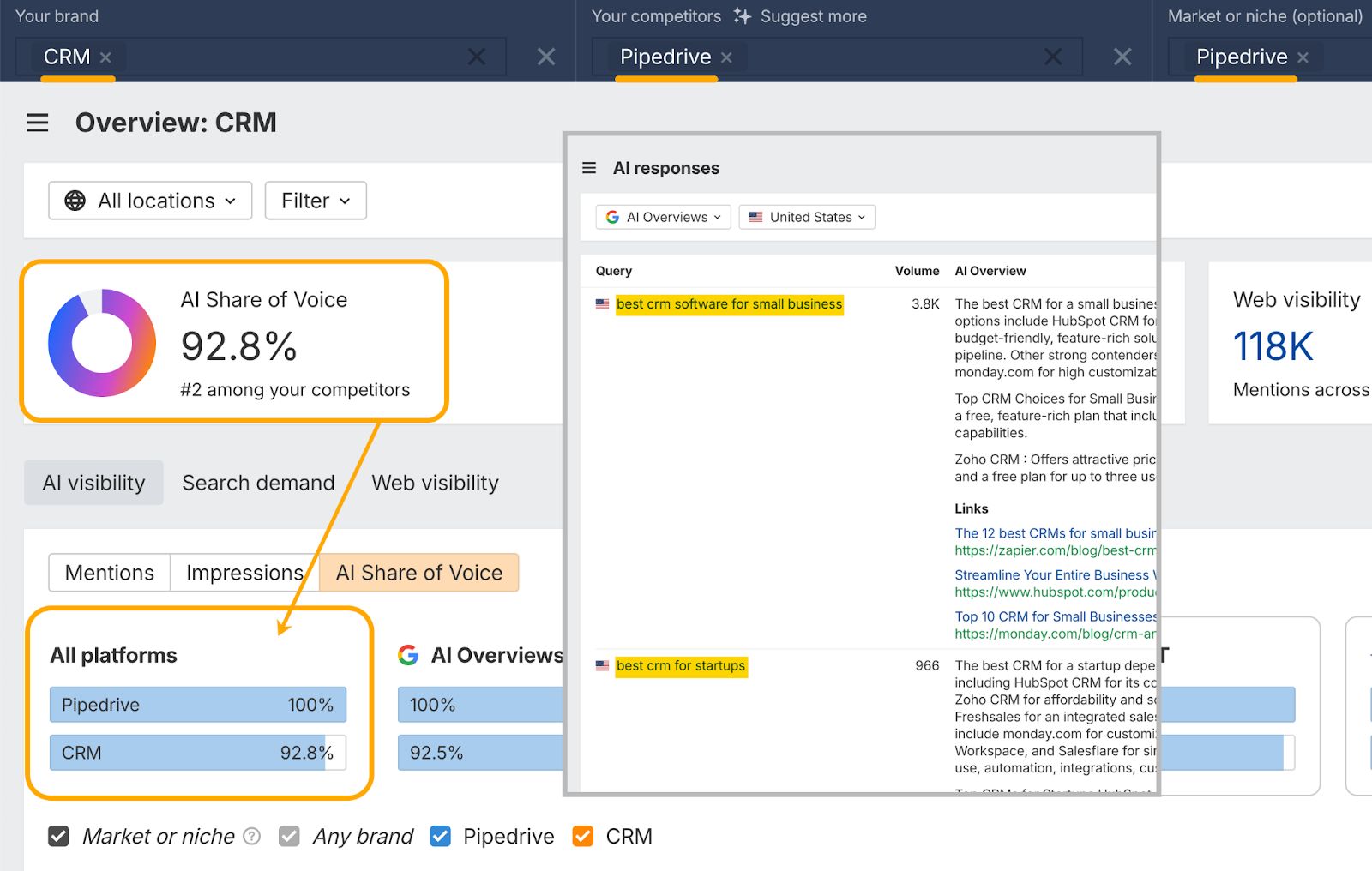

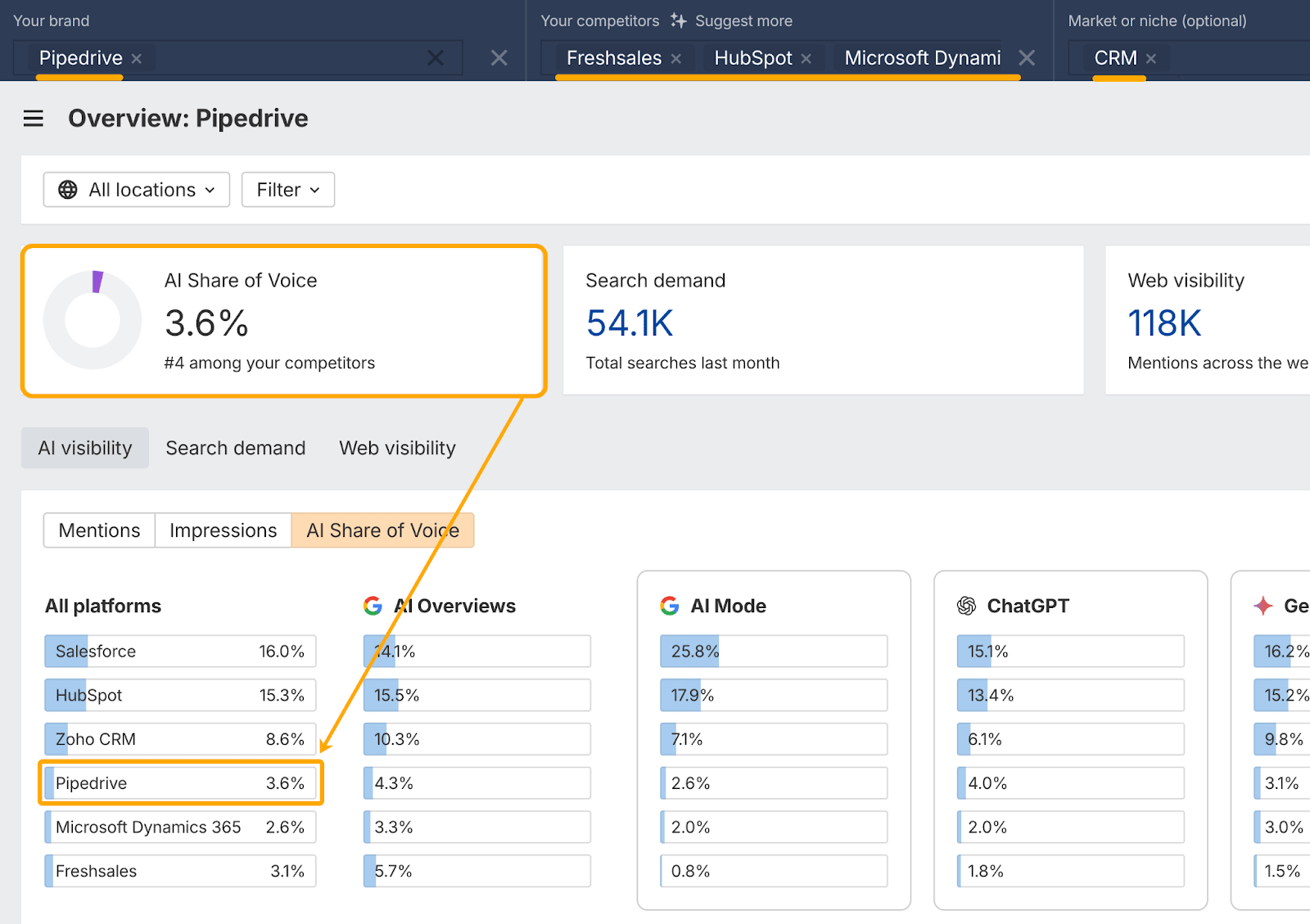

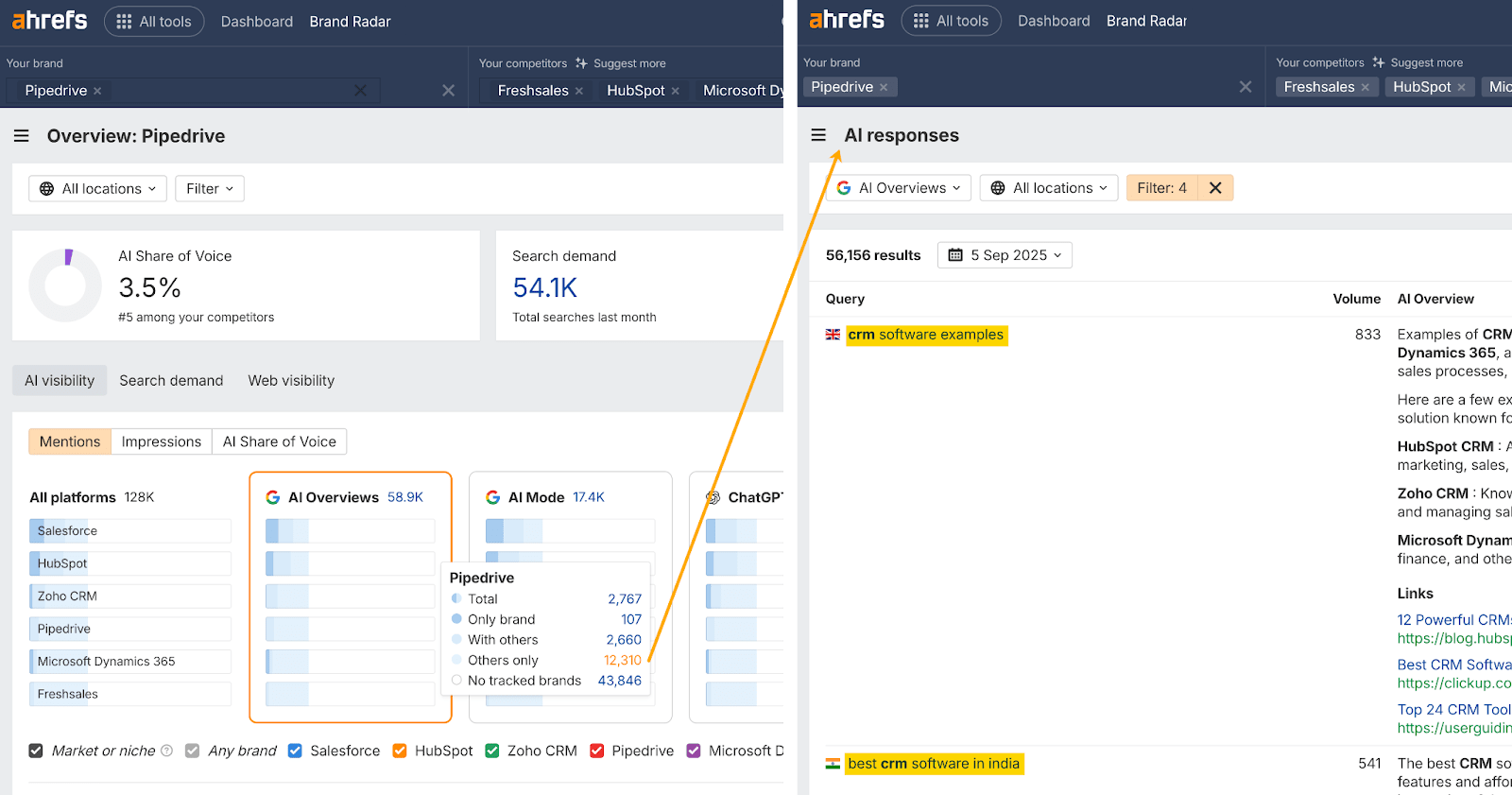

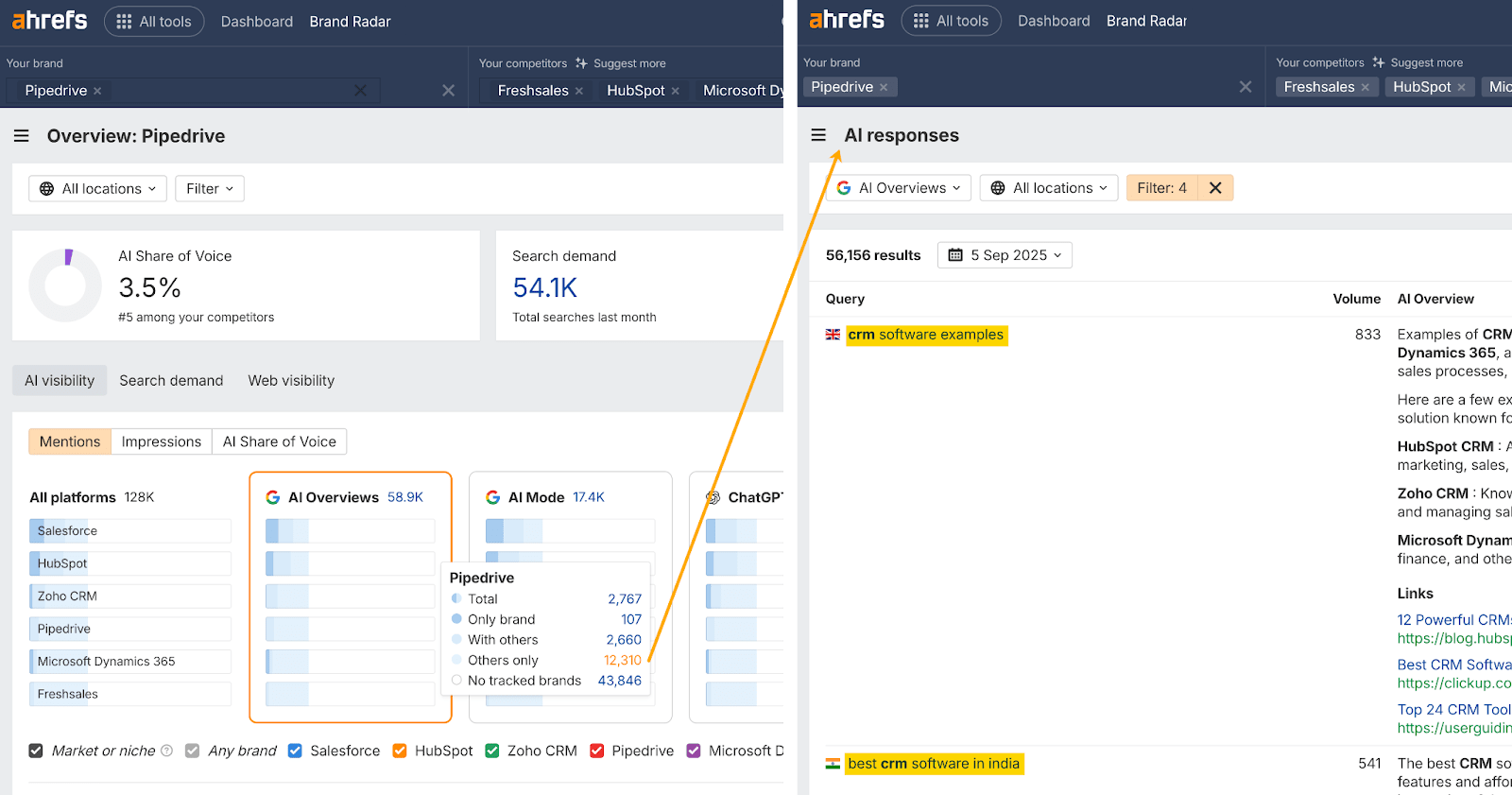

Take Pipedrive for instance.

CRM associated prompts like “finest CRM for startups” and “finest CRM software program for small enterprise” account for 92.8% of Pipedrive’s AI visibility (~7K prompts).

However whenever you benchmark in opposition to your complete CRM market (~128K prompts), their general share of voice drops to simply 3.6%.

So, Pipedrive clearly “owns” sure CRM subtopics, however not the complete class.

This model of AI monitoring offers you perspective.

It exhibits you ways typically you seem throughout subtopics and the broader market, however simply as importantly, reveals the place you’re lacking.

These gaps—the “unknown unknowns”—are alternatives and dangers you wouldn’t have thought to test for.

They offer you a roadmap of what to prioritize subsequent.

To search out these alternatives, Pipedrive can do a competitor hole evaluation in three steps:

- Click on “Others solely”

- Research the immediate matters they’re lacking within the AI Responses report

- Create or optimize content material to assert some extra of that visibility

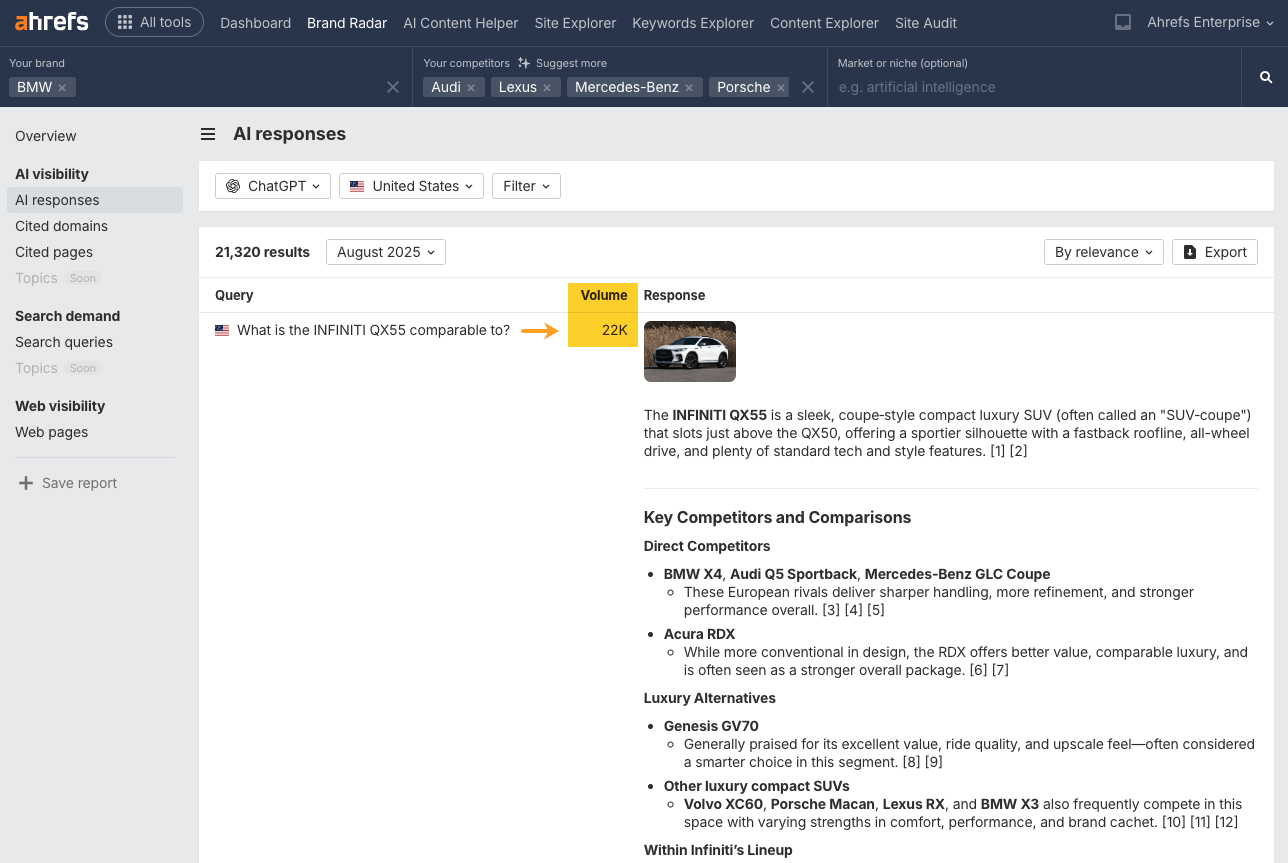

AI outcomes are noisy and artificial prompts aren’t excellent, however that doesn’t cease them from revealing one thing essential: how your model is framed within the solutions that do seem.

You don’t want flawless information to be taught helpful issues.

The way in which AIs describe your model—the adjectives they use, the websites they group you with—can inform you numerous about your positioning, even when the prompts are proxies and the solutions fluctuate.

- Are you labeled the “budget-friendly” possibility whereas rivals are framed as “enterprise-ready”?

- Do you constantly get really helpful for “ease of use” whereas one other model is praised for “superior options”?

- Are you talked about alongside market leaders, or lumped in with area of interest alternate options?

These patterns reveal the narrative that AI assistants connect to your model.

And whereas particular person solutions might fluctuate, these recurring themes add as much as a transparent sign.

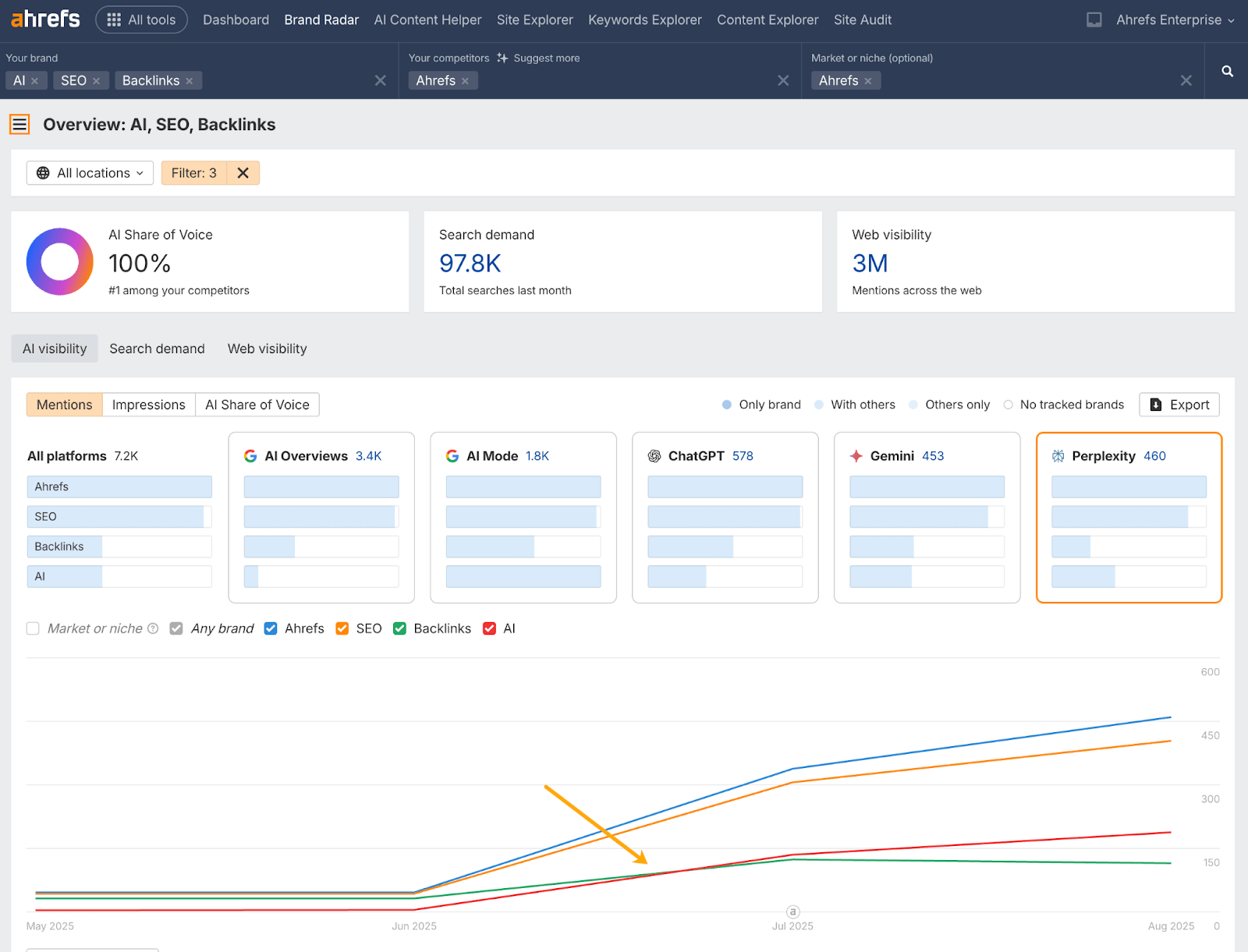

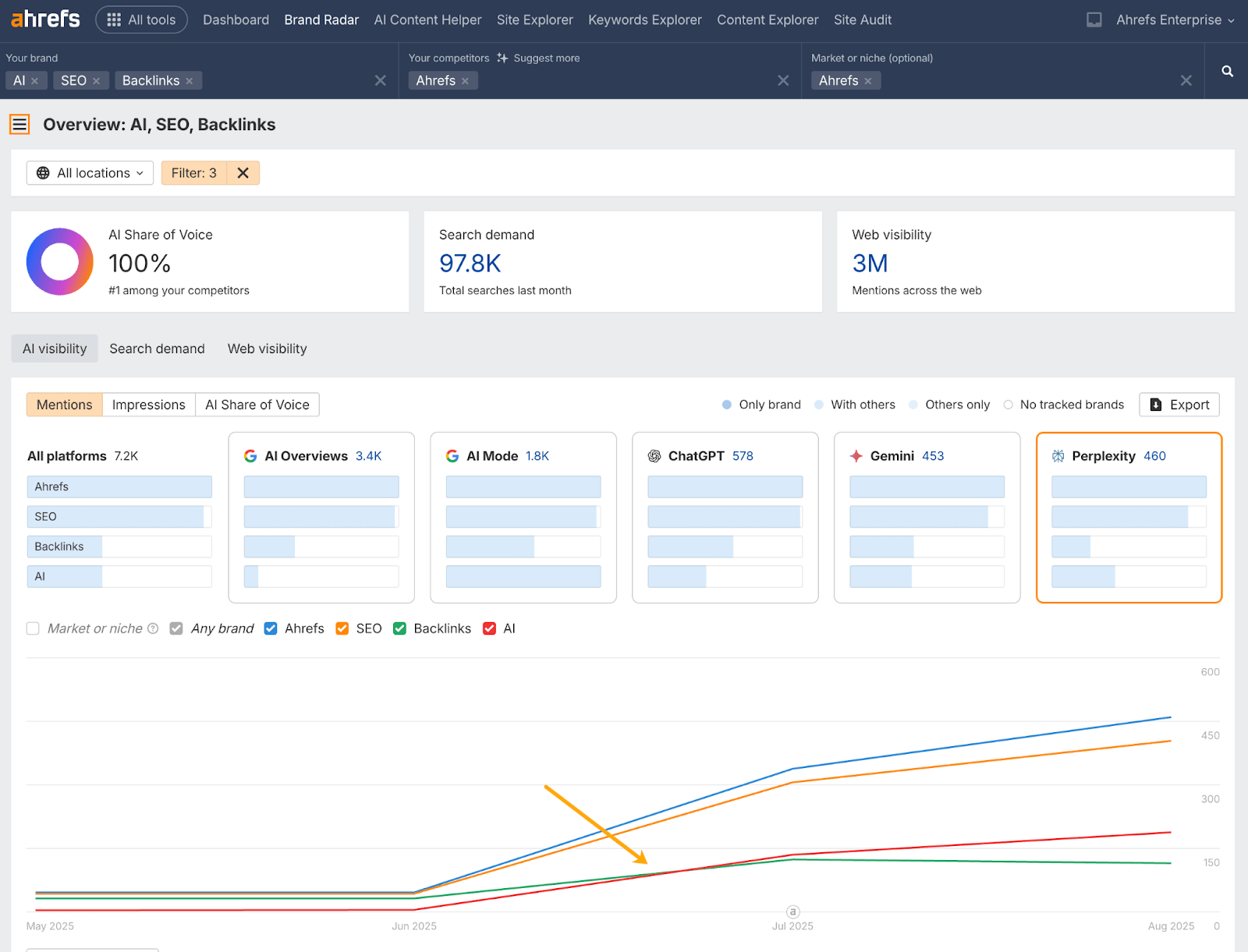

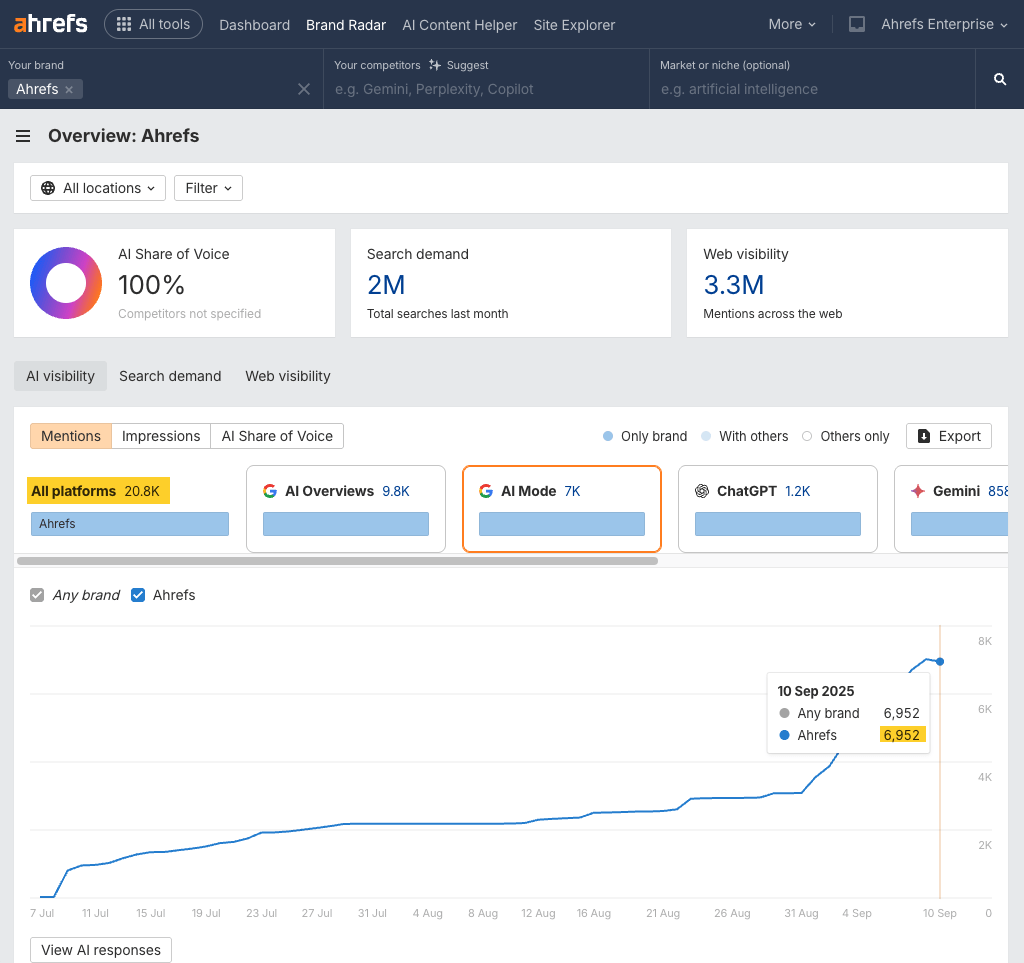

For instance, proper now now we have a difficulty with our personal AI visibility.

Ahrefs’ positioning has shifted prior to now yr as we’ve added new options and developed right into a advertising and marketing platform.

However, AI responses nonetheless describe us primarily as an ‘search engine marketing’ or ‘Backlinks’ instrument.

By placing out constant AI options, merchandise, content material, and messaging, our positioning is now starting to shift on some AI surfaces.

You may see this when the purple development line (AI) overtakes the inexperienced (Backlinks) within the chart beneath.

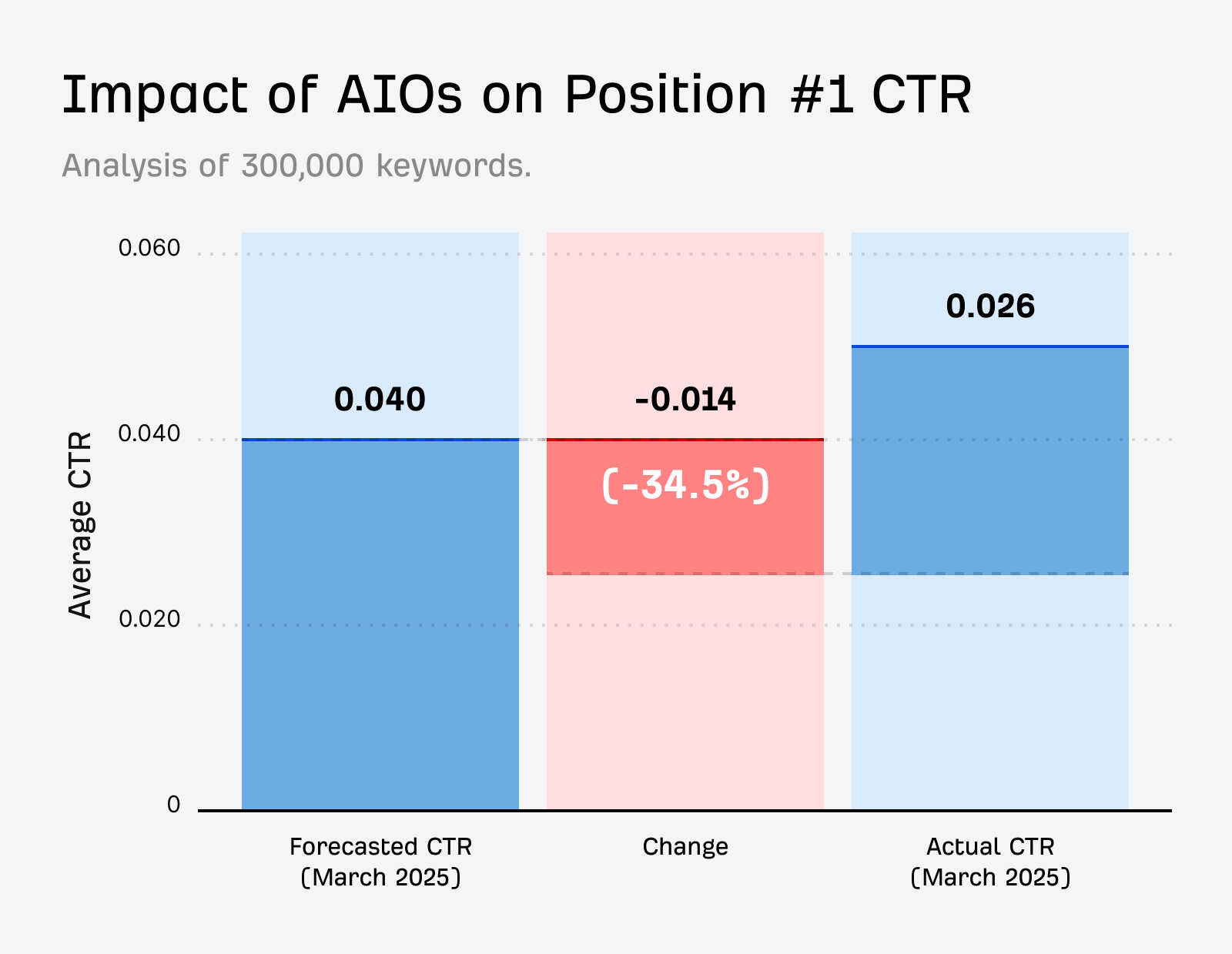

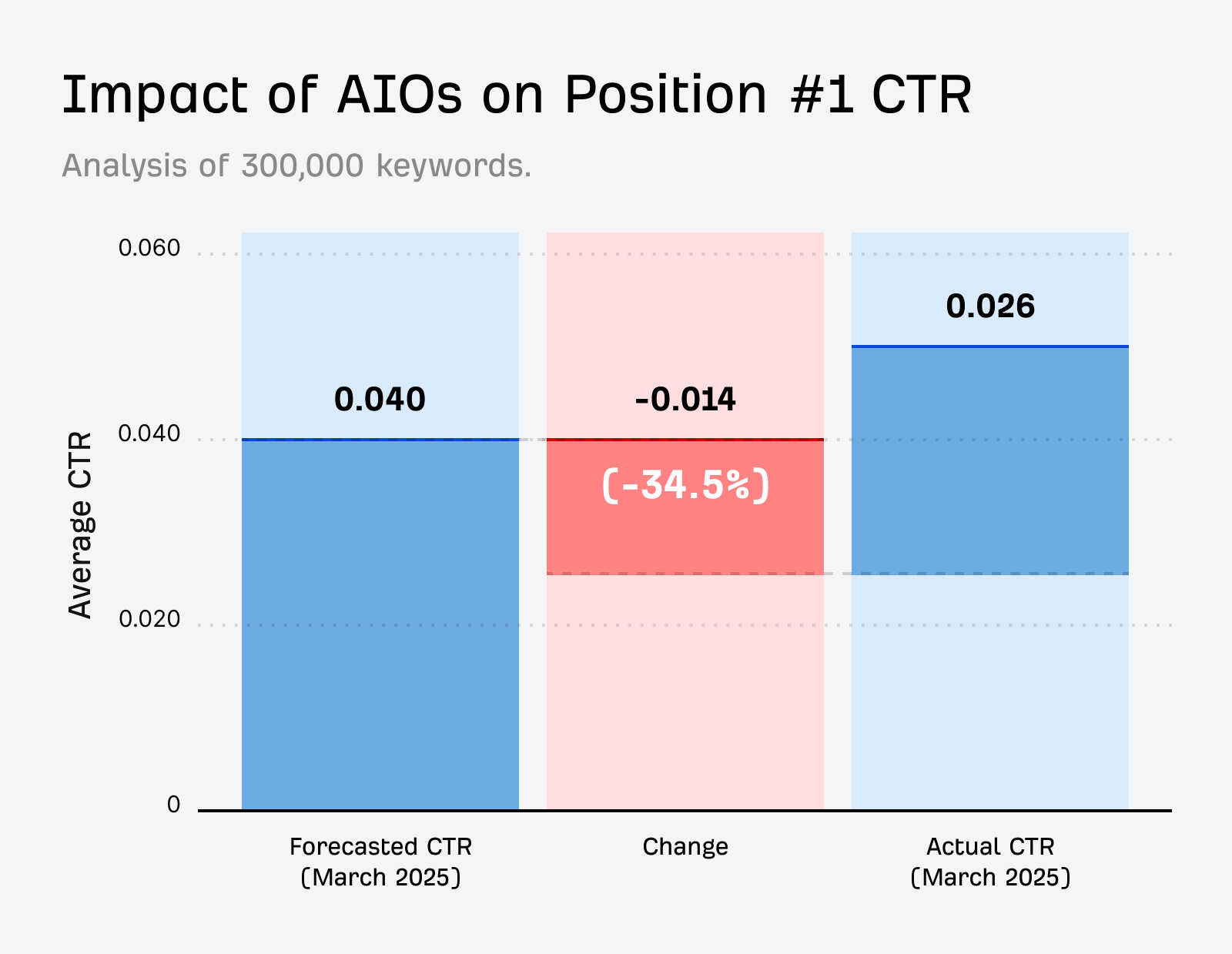

Natural visitors is shrinking quick.

When Google’s AI Overview seems, clickthroughs to the highest search outcomes drop by a few third.

Which means being named in AI solutions is not optionally available.

AI assistants are already a part of the invention journey.

Folks flip to ChatGPT, Gemini, and Copilot for product suggestions, not simply fast info.

In case your model isn’t in these solutions, you’re invisible on the actual second choices are made.

That’s why monitoring AI visibility issues.

Even when the information is noisy, it exhibits whether or not you’re a part of the dialog—or whether or not rivals are taking the highlight.

In an ideal world, monitoring AI visibility on a micro and macro stage isn’t an both–or alternative.

Micro monitoring for high-stakes AI prompts

Micro monitoring is about zooming in on the handful of queries that actually matter to your small business.

These would possibly embrace:

- Branded prompts: e.g. “What’s [Brand] recognized for?”

- Competitor comparisons: e.g. “[Brand] vs [Competitor]”

- Backside-of-funnel buy queries: e.g. “finest [product] for [audience]”

Though AI responses are probabilistic, it’s nonetheless price monitoring these “make or break” queries the place visibility or accuracy actually issues.

Macro monitoring for general AI visibility

Macro monitoring is about zooming out to know the larger image of how AI connects your model to matters and markets.

This method is about monitoring 1000’s of variations to identify patterns, discover new alternatives, and map the aggressive panorama.

Most AI instruments solely deal with the primary mode, however Ahrefs’ Model Radar will help you with each.

It enables you to hold tabs on business-critical prompts whereas additionally surfacing the unknown unknowns.

And shortly it’ll help customized prompts, so you may get much more granular along with your monitoring.

each ranges helps you reply two questions: are you current the place it counts, and are you sturdy sufficient to dominate the market?

Closing ideas

No, you’ll by no means observe AI interactions in the identical manner you observe conventional searches.

However that’s not the level.

AI search monitoring is a compass—it can present if you happen to’re headed in the fitting path.

The actual threat is ignoring your AI visibility whereas rivals construct presence within the area.

Begin now, deal with the information as directional, and use it to form your content material, PR, and positioning.