Kong has open-sourced Volcano, a TypeScript SDK that composes multi-step agent workflows throughout a number of LLM suppliers with native Mannequin Context Protocol (MCP) device use. The discharge coincides with broader MCP capabilities in Kong AI Gateway and Konnect, positioning Volcano because the developer SDK in an MCP-governed management aircraft.

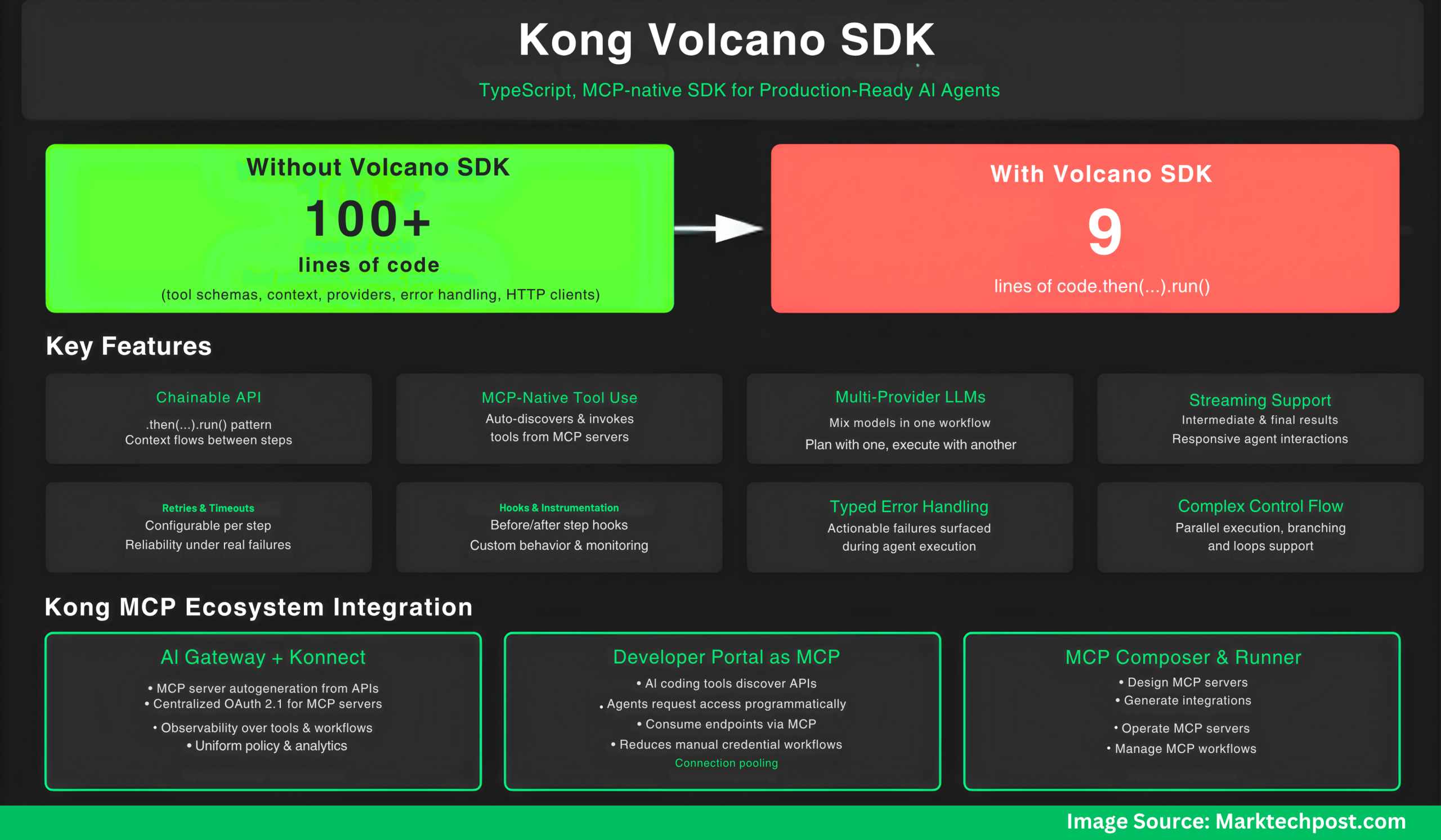

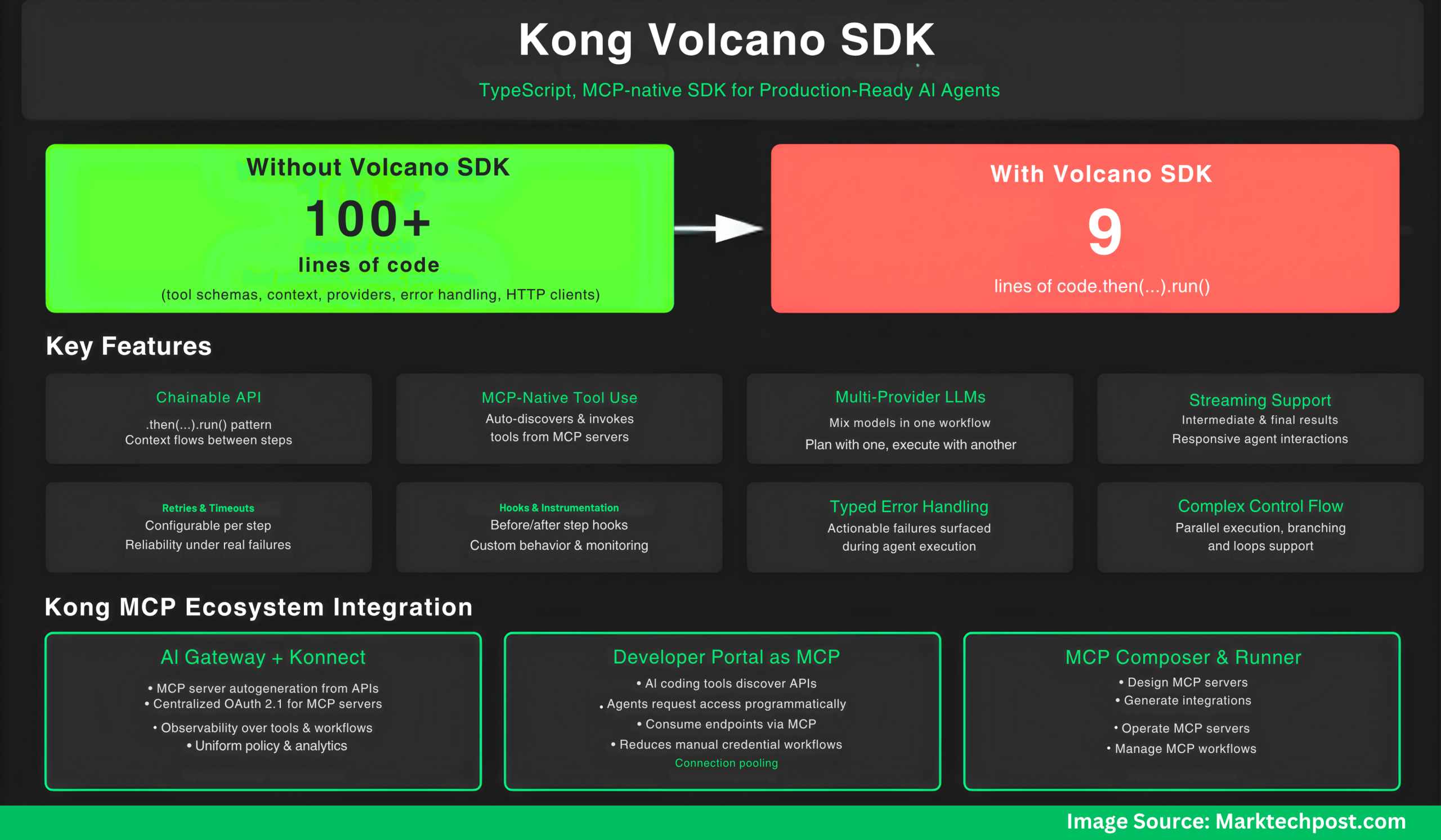

- Why Volcano SDK? as a result of 9 traces of code are sooner to write down and simpler to handle than 100+.

- With out Volcano SDK? You’d want 100+ traces dealing with device schemas, context administration, supplier switching, error dealing with, and HTTP shoppers.

- With Volcano SDK: 9 traces.

import { agent, llmOpenAI, llmAnthropic, mcp } from "volcano-ai";

// Setup: two LLMs, two MCP servers

const planner = llmOpenAI({ mannequin: "gpt-5-mini", apiKey: course of.env.OPENAI_API_KEY! });

const executor = llmAnthropic({ mannequin: "claude-4.5-sonnet", apiKey: course of.env.ANTHROPIC_API_KEY! });

const database = mcp("https://api.firm.com/database/mcp");

const slack = mcp("https://api.firm.com/slack/mcp");

// One workflow

await agent({ llm: planner })

.then({

immediate: "Analyze final week's gross sales information",

mcps: [database] // Auto-discovers and calls the suitable instruments

})

.then({

llm: executor, // Swap to Claude

immediate: "Write an government abstract"

})

.then({

immediate: "Put up the abstract to #executives",

mcps: [slack]

})

.run();What Volcano supplies?

Volcano exposes a compact, chainable API—.then(...).run()—that passes intermediate context between steps whereas switching LLMs per step (e.g., plan with one mannequin, execute with one other). It treats MCP as a first-class interface: builders hand Volcano a listing of MCP servers, and the SDK performs device discovery and invocation mechanically. Manufacturing options embrace automated retries, per-step timeouts, connection pooling for MCP servers, OAuth 2.1 authentication, and OpenTelemetry traces/metrics for distributed observability. The venture is launched below Apache-2.0.

Listed here are the Key Options of the Volcano SDK:

- Chainable API: Construct multi-step workflows with a concise

.then(...).run()sample; context flows between steps - MCP-native device use: Go MCP servers; the SDK auto-discovers and invokes the suitable instruments in every step.

- Multi-provider LLM assist: Combine fashions (e.g., planning with one, execution with one other) inside one workflow.

- Streaming of intermediate and remaining outcomes for responsive agent interactions.

- Retries & timeouts configurable per step for reliability below real-world failures.

- Hooks (earlier than/after step) to customise conduct and instrumentation.

- Typed error dealing with to floor actionable failures throughout agent execution.

- Parallel execution, branching, and loops to specific complicated management circulation.

- Observability by way of OpenTelemetry for tracing and metrics throughout steps and gear calls.

- OAuth assist & connection pooling for safe, environment friendly entry to MCP servers.

The place it suits in Kong’s MCP structure?

Kong’s Konnect platform provides a number of MCP governance and entry layers that complement Volcano’s SDK floor:

- AI Gateway beneficial properties MCP gateway options comparable to server autogeneration from Kong-managed APIs, centralized OAuth 2.1 for MCP servers, and observability over instruments, workflows, and prompts in Konnect dashboards. These present uniform coverage and analytics for MCP analytics.

- The Konnect Developer Portal will be changed into an MCP server so AI coding instruments and brokers can uncover APIs, request entry, and devour endpoints programmatically—lowering guide credential workflows and making API catalogs accessible by MCP.

- Kong’s crew additionally previewed MCP Composer and MCP Runner to design, generate, and function MCP servers and integrations.

Key Takeaways

- Volcano is an open-source TypeScript SDK that builds multi-step AI brokers with first-class MCP device use.

- The SDK supplies manufacturing options—retries, timeouts, connection pooling, OAuth, and OpenTelemetry tracing/metrics—for MCP workflows.

- Volcano composes multi-LLM plans/executions and auto-discovers/invokes MCP servers/instruments, minimizing customized glue code.

- Kong paired the SDK with platform controls: AI Gateway/Konnect add MCP server autogeneration, centralized OAuth 2.1, and observability.

Kong’s Volcano SDK is a practical addition to the MCP ecosystem: a TypeScript-first agent framework that aligns developer workflow with enterprise controls (OAuth 2.1, OpenTelemetry) delivered by way of AI Gateway and Konnect. The pairing closes a typical hole in agent stacks—device discovery, auth, and observability—with out inventing new interfaces past MCP. This design prioritizes protocol-native MCP integration over bespoke glue, slicing operational drift and shutting auditing gaps as inside brokers scale.

Try the GitHub Repo and Technical particulars. Be happy to take a look at our GitHub Web page for Tutorials, Codes and Notebooks. Additionally, be happy to observe us on Twitter and don’t overlook to hitch our 100k+ ML SubReddit and Subscribe to our E-newsletter. Wait! are you on telegram? now you may be a part of us on telegram as effectively.