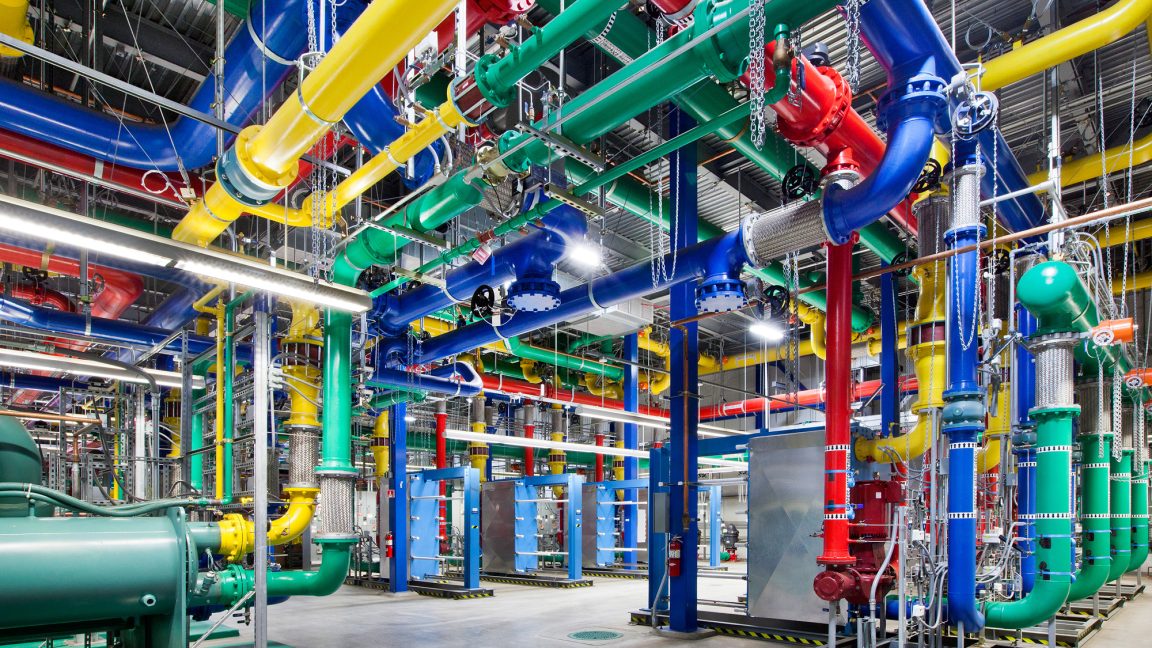

Whereas AI bubble speak fills the air nowadays, with fears of overinvestment that might pop at any time, one thing of a contradiction is brewing on the bottom: Firms like Google and OpenAI can barely construct infrastructure quick sufficient to fill their AI wants.

Throughout an all-hands assembly earlier this month, Google’s AI infrastructure head Amin Vahdat advised workers that the corporate should double its serving capability each six months to fulfill demand for synthetic intelligence providers, studies CNBC. The feedback present a uncommon take a look at what Google executives are telling its personal workers internally. Vahdat, a vp at Google Cloud, offered slides to its workers exhibiting the corporate must scale “the following 1000x in 4-5 years.”

Whereas a thousandfold improve in compute capability sounds bold by itself, Vahdat famous some key constraints: Google wants to have the ability to ship this improve in functionality, compute, and storage networking “for primarily the identical value and more and more, the identical energy, the identical power stage,” he advised workers in the course of the assembly. “It gained’t be straightforward however by way of collaboration and co-design, we’re going to get there.”

It’s unclear how a lot of this “demand” Google talked about represents natural consumer curiosity in AI capabilities versus the corporate integrating AI options into current providers like Search, Gmail, and Workspace. However whether or not customers are utilizing the options voluntarily or not, Google isn’t the one tech firm struggling to maintain up with a rising consumer base of shoppers utilizing AI providers.

Main tech firms are in a race to construct out information facilities. Google competitor OpenAI is planning to construct six large information facilities throughout the US by way of its Stargate partnership venture with SoftBank and Oracle, committing over $400 billion within the subsequent three years to succeed in practically 7 gigawatts of capability. The corporate faces comparable constraints serving its 800 million weekly ChatGPT customers, with even paid subscribers recurrently hitting utilization limits for options like video synthesis and simulated reasoning fashions.

“The competitors in AI infrastructure is essentially the most important and likewise the most costly a part of the AI race,” Vahdat mentioned on the assembly, in line with CNBC’s viewing of the presentation. The infrastructure government defined that Google’s problem goes past merely outspending opponents. “We’re going to spend so much,” he mentioned, however famous the true goal is constructing infrastructure that’s “extra dependable, extra performant and extra scalable than what’s accessible anyplace else.”