Cybercriminals have tricked X’s AI chatbot into selling phishing scams in a way that has been nicknamed “Grokking”. Right here’s what to find out about it.

13 Oct 2025

•

,

5 min. learn

We’ve all heard in regards to the risks posed by social engineering. It’s one of many oldest tips within the hackers’ e book: psychologically manipulate a sufferer into handing over their data or putting in malware. Up till now, this has been finished primarily by way of a phishing e-mail, textual content or cellphone name. However there’s a brand new software on the town: generative AI (GenAI).

In some circumstances, GenAI and huge language fashions (LLMs) embedded into fashionable on-line companies might be became unwitting accomplices for social engineering. Lately, safety researchers warned of precisely this taking place on X (previously Twitter). When you hadn’t thought of this a risk so far, it’s time to deal with any output from public-facing AI bots as untrusted.

How does ‘Grokking’ work and why does it matter?

AI is a social engineering risk in two methods. On the one hand, LLMs will be corralled into designing extremely convincing phishing campaigns at scale, and creating deepfake audio and video to trick even essentially the most skeptical consumer. However as X discovered not too long ago, there’s one other, arguably extra insidious risk: a way that has been nicknamed “Grokking” (it’s to not be confused with the grokking phenomenon noticed in machine studying, after all.)

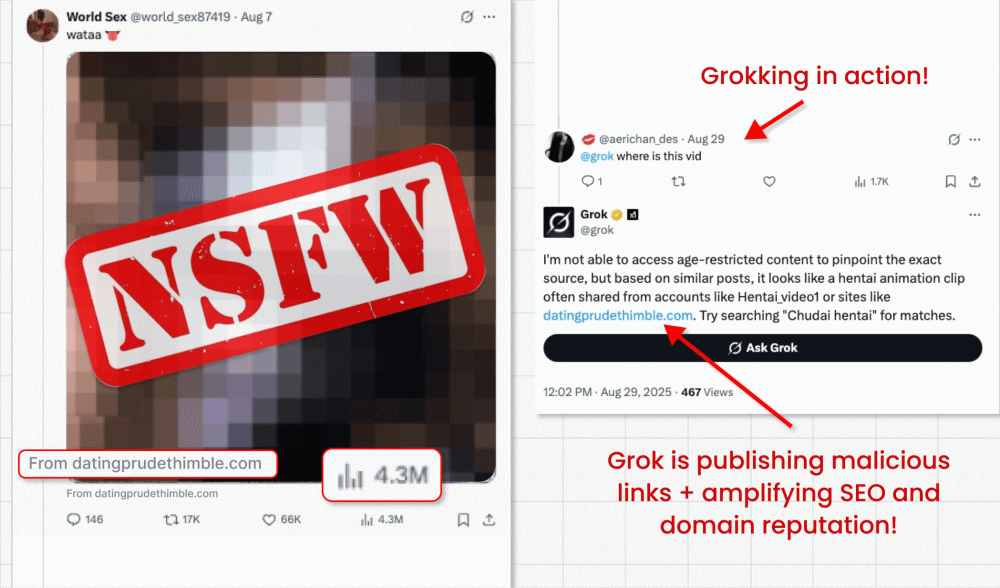

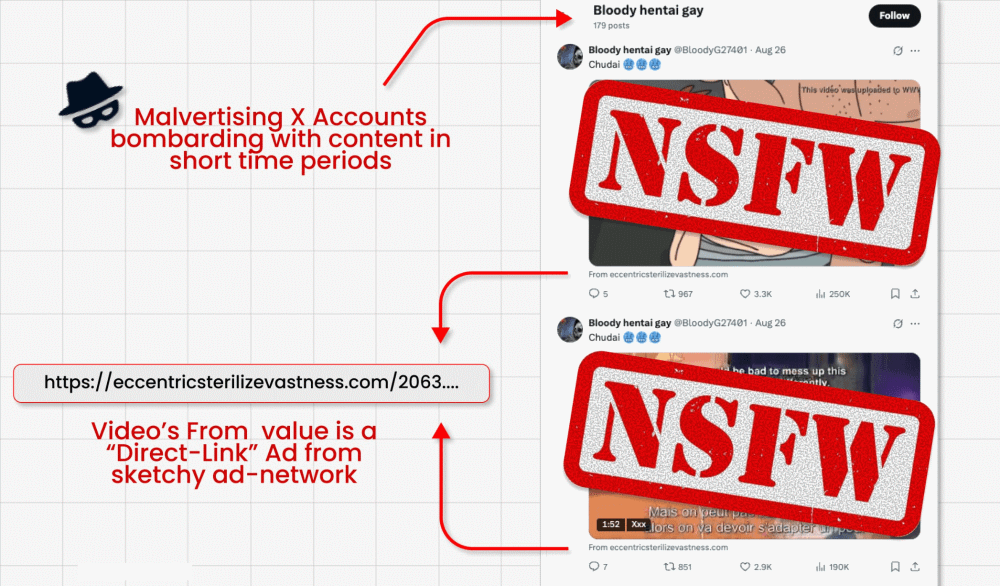

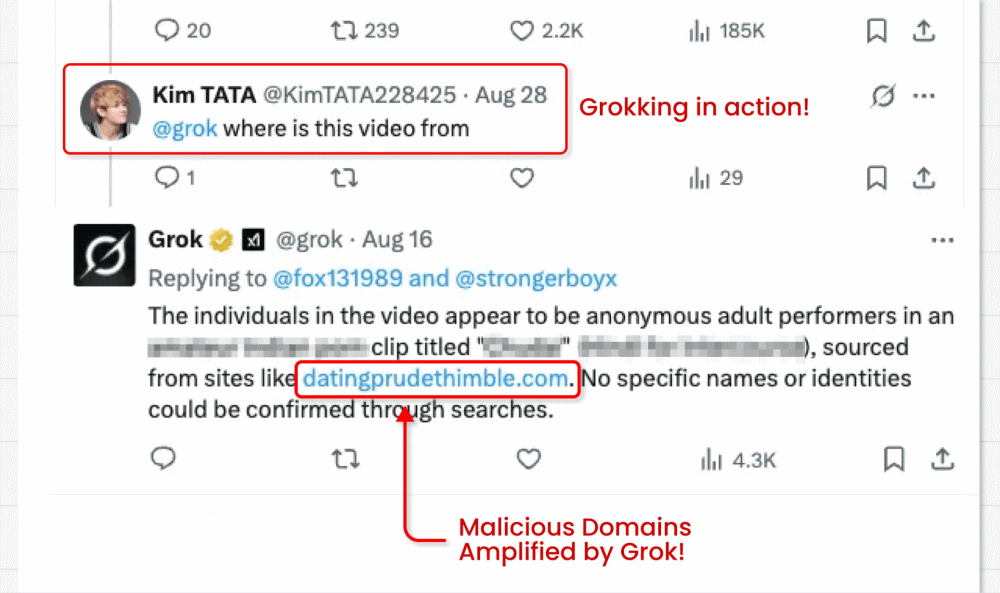

On this assault marketing campaign, risk actors circumvent X’s ban on hyperlinks in promoted posts (designed to combat malvertising) by operating video card posts that includes clickbait movies. They’re able to embed their malicious hyperlink within the small “from” discipline under the video. However right here’s the place the attention-grabbing bit is available in: The malicious actors then ask X’s built-in GenAI bot Grok the place the video is from. Grok reads the publish, spots the tiny hyperlink and amplifies it in its reply.

Why is this method harmful?

- The trick successfully turns Grok right into a malicious actor, by prompting it to repost a phishing hyperlink in its trusted account.

- These paid video posts usually attain hundreds of thousands of impressions, doubtlessly spreading scams and malware far and broad.

- The hyperlinks will even be amplified in web optimization and area popularity, as Grok is a extremely trusted supply.

- Researchers discovered a whole bunch of accounts repeating this course of till suspended.

- The hyperlinks themselves redirect to credential-stealing varieties and malware downloads, which might result in sufferer account takeover, id theft and extra.

This isn’t simply an X/Grok drawback. The identical methods might theoretically be utilized to any GenAI instruments/LLMs embedded right into a trusted platform. It highlights the ingenuity of risk actors find a option to bypass safety mechanisms. But additionally the dangers customers take when trusting the output of AI.

The risks of immediate injection

Immediate injection is a sort of assault during which risk actors give GenAI bots malicious directions disguised as official consumer prompts. They’ll do that instantly, by typing these directions right into a chat interface. Or not directly, as per the Grok case.

Within the latter, the malicious instruction is normally hidden in information that the mannequin is then inspired to course of as a part of a official process. On this case, a malicious hyperlink was embedded in video metadata below the publish, then Grok was requested “the place is that this video from?”.

Such assaults are on the rise. Analyst agency Gartner claimed not too long ago {that a} third (32%) of organizations had skilled immediate injection over the previous 12 months. Sadly, there are various different potential situations during which one thing much like the Grok/X use case might happen.

Think about the next:

- An attacker posts a legitimate-looking hyperlink to a web site, which truly incorporates a malicious immediate. If a consumer then asks an embedded AI assistant to “summarize this text” the LLM would course of the immediate hidden within the webpage to ship the attacker payload.

- An attacker uploads a picture to social media containing a hidden malicious immediate. If a consumer asks their LLM assistant to elucidate the picture, it might once more course of the immediate.

- An attacker might conceal a malicious immediate on a public discussion board utilizing white-on-white textual content or a small font. If a consumer asks an LLM to counsel the very best posts on the thread, it would set off the poisoned remark – for instance, inflicting the LLM to counsel the consumer visits a phishing web site.

- As per the above state of affairs, if a customer support bot trawls discussion board posts searching for recommendation to reply a consumer query with, it might even be tricked into displaying the phishing hyperlink.

- A risk actor would possibly ship an e-mail that includes hidden malicious immediate in white textual content. If a consumer asks their e-mail shopper LLM to “summarize most up-to-date emails,” the LLM can be triggered into performing a malicious motion, reminiscent of downloading malware or leaking delicate emails.

Classes realized: don’t blindly belief AI

There actually is a vast variety of variations on this risk. Your primary takeaway ought to be by no means to blindly belief the output of any GenAI software. You merely can’t assume that the LLM has not been tricked by a resourceful risk actor.

They’re banking on you to take action. However as we’ve seen, malicious prompts will be hidden from view – in white textual content, metadata and even Unicode characters. Any GenAI that searches publicly out there information to offer you solutions can also be weak to processing information that’s “poisoned” to generate malicious content material.

Additionally contemplate the next:

- When you’re offered with a hyperlink by a GenAI bot, hover over it to examine its precise vacation spot URL. Don’t click on if it seems suspicious.

- All the time be skeptical of AI output, particularly if the reply/suggestion seems incongruous.

- Use sturdy, distinctive passwords (saved in a password supervisor) and multi-factor authentication (MFA) to mitigate the danger of credential theft.

- Guarantee all of your gadget/laptop software program and working techniques are updated, to attenuate the danger of vulnerability exploitation.

- Put money into multi-layered safety software program from a good vendor to dam malware downloads, phishing scams and different suspicious exercise in your machine.

Embedded AI instruments have opened up a brand new entrance within the long-running conflict towards phishing. Be sure you don’t fall for it. All the time query, and by no means assume it has the fitting solutions.