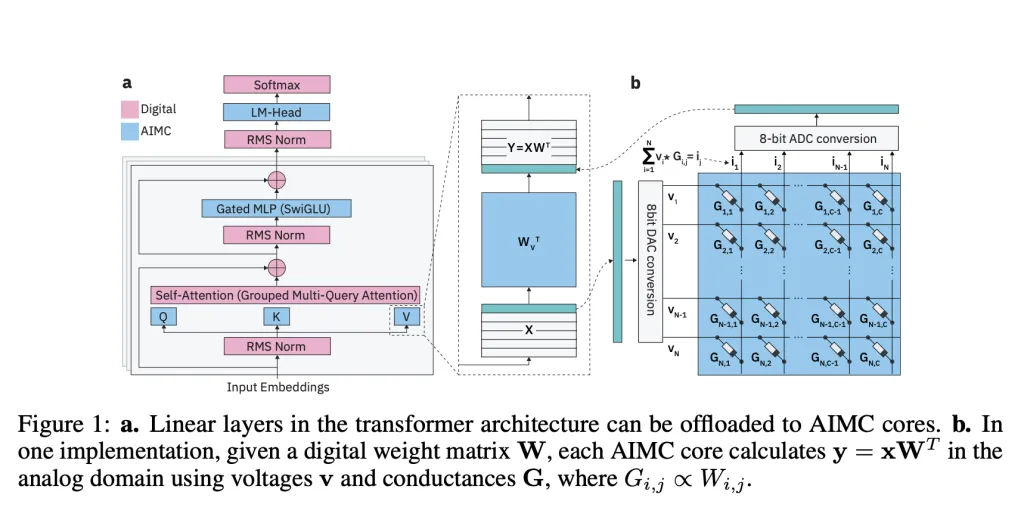

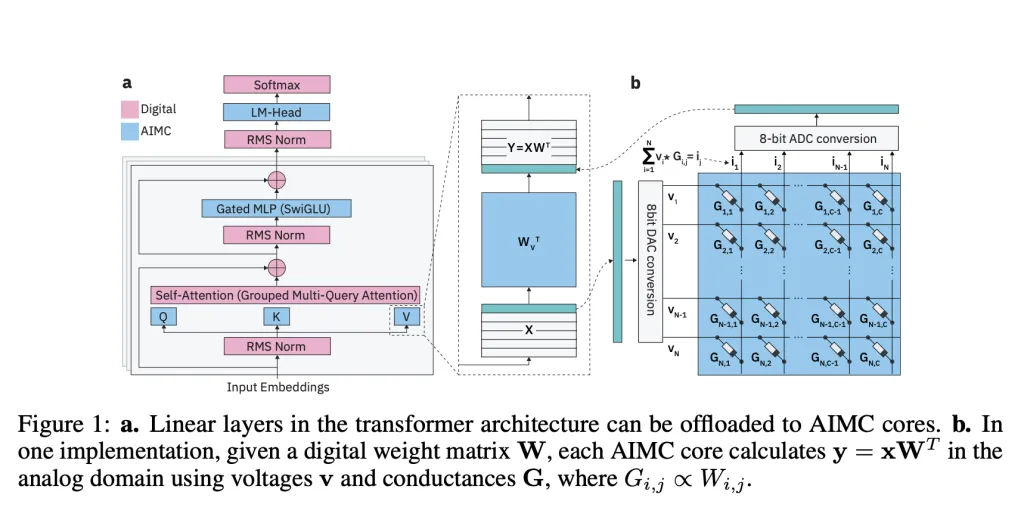

IBM researchers, along with ETH Zürich, have unveiled a brand new class of Analog Basis Fashions (AFMs) designed to bridge the hole between massive language fashions (LLMs) and Analog In-Reminiscence Computing (AIMC) {hardware}. AIMC has lengthy promised a radical leap in effectivity—operating fashions with a billion parameters in a footprint sufficiently small for embedded or edge gadgets—because of dense non-volatile reminiscence (NVM) that mixes storage and computation. However the expertise’s Achilles’ heel has been noise: performing matrix-vector multiplications instantly inside NVM gadgets yields non-deterministic errors that cripple off-the-shelf fashions.

Why does analog computing matter for LLMs?

In contrast to GPUs or TPUs that shuttle information between reminiscence and compute items, AIMC performs matrix-vector multiplications instantly inside reminiscence arrays. This design removes the von Neumann bottleneck and delivers large enhancements in throughput and energy effectivity. Prior research confirmed that combining AIMC with 3D NVM and Combination-of-Specialists (MoE) architectures might, in precept, assist trillion-parameter fashions on compact accelerators. That would make foundation-scale AI possible on gadgets nicely past data-centers.

What makes Analog In-Reminiscence Computing (AIMC) so troublesome to make use of in apply?

The largest barrier is noise. AIMC computations endure from machine variability, DAC/ADC quantization, and runtime fluctuations that degrade mannequin accuracy. In contrast to quantization on GPUs—the place errors are deterministic and manageable—analog noise is stochastic and unpredictable. Earlier analysis discovered methods to adapt small networks like CNNs and RNNs (<100M parameters) to tolerate such noise, however LLMs with billions of parameters constantly broke down underneath AIMC constraints.

How do Analog Basis Fashions deal with the noise drawback?

The IBM workforce introduces Analog Basis Fashions, which combine hardware-aware coaching to organize LLMs for analog execution. Their pipeline makes use of:

- Noise injection throughout coaching to simulate AIMC randomness.

- Iterative weight clipping to stabilize distributions inside machine limits.

- Realized static enter/output quantization ranges aligned with actual {hardware} constraints.

- Distillation from pre-trained LLMs utilizing 20B tokens of artificial information.

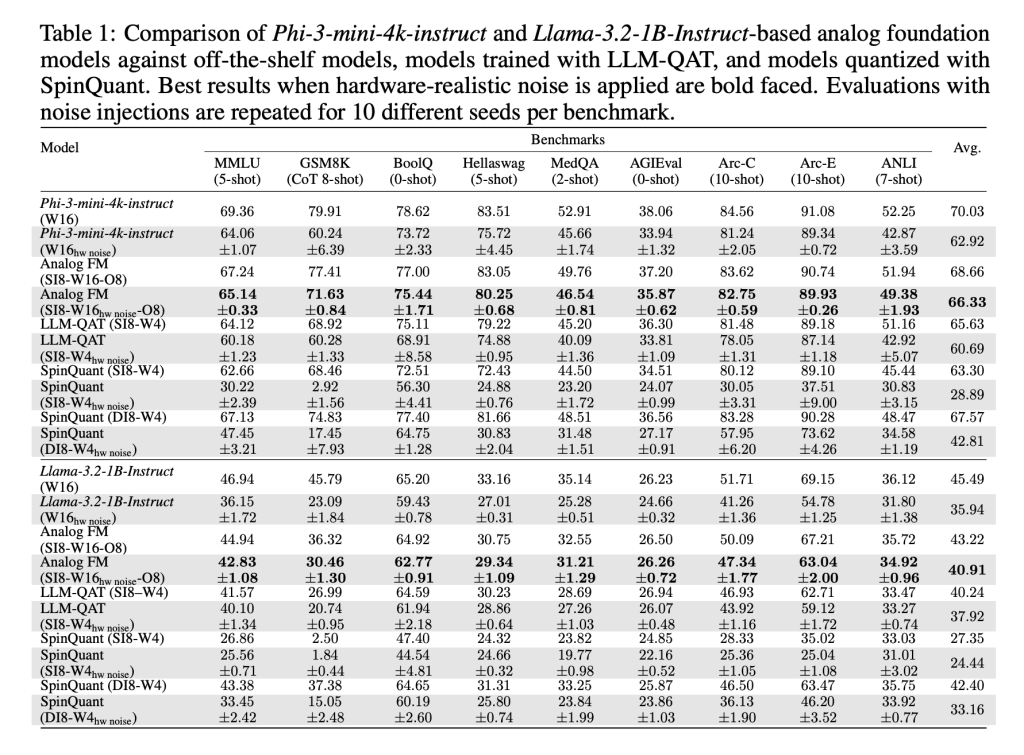

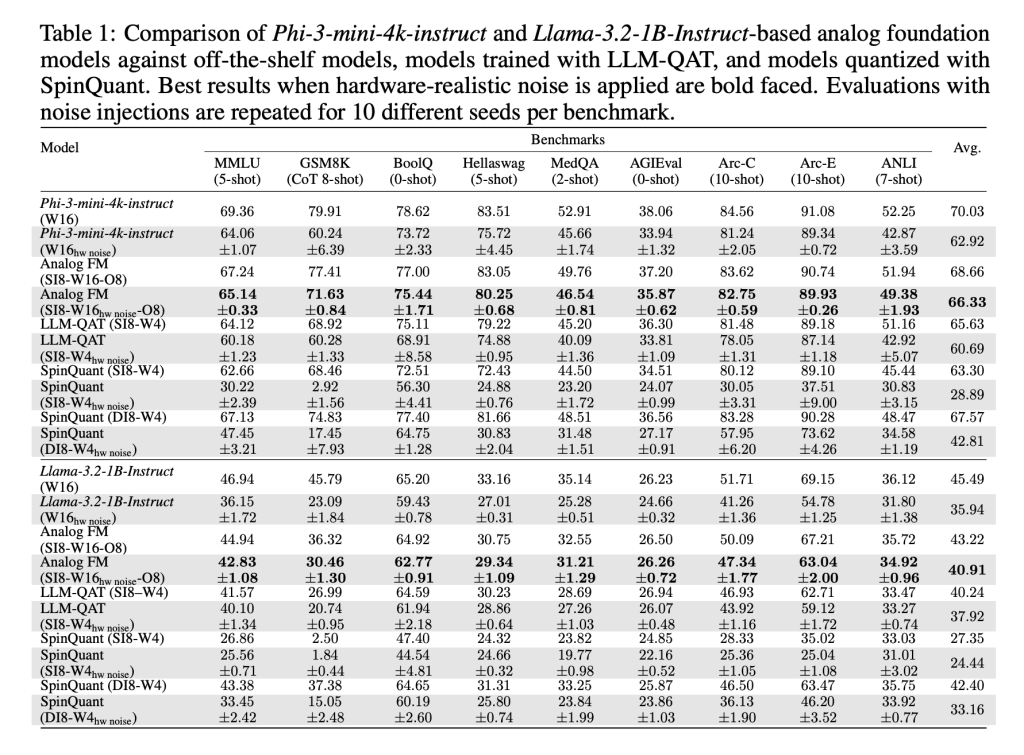

These strategies, carried out with AIHWKIT-Lightning, permit fashions like Phi-3-mini-4k-instruct and Llama-3.2-1B-Instruct to maintain efficiency corresponding to weight-quantized 4-bit / activation 8-bit baselines underneath analog noise. In evaluations throughout reasoning and factual benchmarks, AFMs outperformed each quantization-aware coaching (QAT) and post-training quantization (SpinQuant).

Do these fashions work just for analog {hardware}?

No. An surprising end result is that AFMs additionally carry out strongly on low-precision digital {hardware}. As a result of AFMs are skilled to tolerate noise and clipping, they deal with easy post-training round-to-nearest (RTN) quantization higher than present strategies. This makes them helpful not only for AIMC accelerators, but additionally for commodity digital inference {hardware}.

Can efficiency scale with extra compute at inference time?

Sure. The researchers examined test-time compute scaling on the MATH-500 benchmark, producing a number of solutions per question and choosing the right through a reward mannequin. AFMs confirmed higher scaling habits than QAT fashions, with accuracy gaps shrinking as extra inference compute was allotted. That is per AIMC’s strengths—low-power, high-throughput inference fairly than coaching.

How does it impression Analog In-Reminiscence Computing (AIMC) future?

The analysis workforce supplies the primary systematic demonstration that enormous LLMs could be tailored to AIMC {hardware} with out catastrophic accuracy loss. Whereas coaching AFMs is resource-heavy and reasoning duties like GSM8K nonetheless present accuracy gaps, the outcomes are a milestone. The mix of vitality effectivity, robustness to noise, and cross-compatibility with digital {hardware} makes AFMs a promising course for scaling basis fashions past GPU limits.

Abstract

The introduction of Analog Basis Fashions marks a essential milestone for scaling LLMs past the bounds of digital accelerators. By making fashions strong to the unpredictable noise of analog in-memory computing, the analysis workforce exhibits that AIMC can transfer from a theoretical promise to a sensible platform. Whereas coaching prices stay excessive and reasoning benchmarks nonetheless present gaps, this work establishes a path towards energy-efficient massive scale fashions operating on compact {hardware}, pushing basis fashions nearer to edge deployment

Take a look at the PAPER and GITHUB PAGE. Be happy to take a look at our GitHub Web page for Tutorials, Codes and Notebooks. Additionally, be happy to comply with us on Twitter and don’t neglect to hitch our 100k+ ML SubReddit and Subscribe to our Publication.

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is dedicated to harnessing the potential of Synthetic Intelligence for social good. His most up-to-date endeavor is the launch of an Synthetic Intelligence Media Platform, Marktechpost, which stands out for its in-depth protection of machine studying and deep studying information that’s each technically sound and simply comprehensible by a large viewers. The platform boasts of over 2 million month-to-month views, illustrating its recognition amongst audiences.